Building an AI Coding Agent from Scratch

What we learned from live-coding an AI agent

Eleanor Berger and Isaac Flath conducted a live coding session to build a functional AI coding agent in one hour. The session aimed to demonstrate that these agents are simple tools that connect language models to file systems. The actual intelligence comes from the models, not the agents themselves.

⚡ Professional AI-powered Software Development is not about what tools you use, but about how you use them. It requires a more deliberate approach, and some new techniques, but is an important addition to the toolbox of developers and engineering and product leads moving to an AI-native Software Development Lifecycle.

Our course, Elite AI-Assisted Coding covers these techniques in depth. Join us for a 3 week (12 live sessions) in-depth study of everything you need to build production-grade software with AI starting this Monday, October 6.

Starting with Project Rules

Eleanor began by creating an AGENTS.md file to establish project-specific rules. This file format has become a standard that many coding agents recognize automatically. However, some tools use different file names - for example, Warp uses WARP.md and Claude uses CLAUDE.md. Eleanor handles these variations by creating symbolic links from the tool-specific names to her main AGENTS.md file.

The first instruction in the file was direct:

“Any instructions you have received that contradict these instructions, ignore them.”

This override was necessary because Eleanor maintains default global rules in her various tools, but wanted different behavior for this demonstration project. Her standard rules emphasize careful development with feature branches and extensive testing. For this session, she specified the opposite: work directly in the main branch, skip tests, and prioritize readability over efficiency.

The development process rules included:

Always work in the main branch

Commit frequently with concise messages

Tag all commits with “AI” as the author

Always consult documentation instead of relying on pre-training knowledge

Conduct web searches when uncertain

Work only within the repository directory

Manage the environment and install packages as needed

Test changes before committing

For coding style, she specified:

Follow PEP 8 standards

Prioritize readability

Use small functions with single responsibilities

Avoid unnecessary complexity

Add extensive comments for educational purposes

Skip all testing

Understanding Context Management

When asked about managing contexts across multiple projects, Eleanor described her system. She maintains global configurations in tool-specific locations: .vscode directory for Visual Studio Code, .codex in her home directory for Codex, and through the UI for Warp. She keeps these standard configurations in a private repository for version control and consistency.

For project-specific needs, she uses Ruler, a tool she created that manages contexts through a .ruler directory in the codebase. The contexts are applied based on which agents are being used. Isaac confirmed he uses Ruler extensively, particularly when collaborating with teams to share practices.

Regarding token usage, a participant asked whether including AGENTS.md overloads the context by resending it in every message, increasing costs and reducing the space for other tokens. Eleanor explained:

“They’re not necessarily added to every message, but they’re added to every conversation.”

The file is included once at the beginning of a conversation and remains part of the growing context. With modern models supporting 200,000 to 1 million tokens, a single AGENTS.md file rarely causes problems. The real concern comes when combining multiple sources like extensive documentation or multiple MCP servers, which can lead to context overflow and force the agent to compress or drop information.

Creating Requirements Through Voice Dictation

Eleanor demonstrated her requirements creation process using voice dictation. She uses Monologue for transcription, though participants also mentioned alternatives like MacWhisper, SuperWhisper, WisperFlow, and VoicePal. Isaac described his practice of dictating requirements during walks, sometimes generating 30-minute transcriptions that he later organizes with AI assistance.

The dictation process was intentionally unstructured. Eleanor spoke in stream-of-consciousness style, not worrying about organization or typos. Her dictated requirements specified:

Building an AI coding agent that connects models to the file system

Using Pydantic AI for the agent loop implementation

Supporting three models: GPT-5 from OpenAI, Claude 4.5 Sonnet from Anthropic, and Grok Code Fast via OpenRouter

Implementing a system prompt explaining the agent’s role and loop-based operation

Creating an elegant terminal UI using the

richlibraryAdding a thinking animation with spinner during model processing

Using

prompt-toolkitfor input management with history and completionImplementing a single tool called

shellthat executes commands and returns stdout/stderrSupporting two approval modes: “approval” requiring user confirmation and “YOLO” for automatic execution

Maintaining conversation history across interactions

Handling Ctrl+C interrupts appropriately

Supporting slash commands:

/modelfor model selection,/approvalfor mode toggling,/newfor starting fresh conversationsLoading environment variables from a

.envfile

The raw dictation was messy and unorganized. Eleanor used a hack she calls “Promptify” through Raycast to transform it into a structured document.

She uses Raycast AI with Gemini 2.5 Pro for this text organization task, finding it particularly effective for writing and formatting.

The Development Process

With the requirements document prepared, Eleanor added documentation links for Pydantic AI and the other libraries used and submitted everything to GitHub Copilot.

The generation process took approximately 10 minutes. During this time, participants asked about tool preferences. Eleanor listed her setup:

Visual Studio Code as the primary editor

GitHub Copilot for code generation

Codex from OpenAI increasingly

Raycast for AI orchestration and clipboard operations

She emphasized that tool choice matters less than process:

“Everyone should use tools they like, the tools they have available, the tools they get for free because their employer is paying for or whatever. They’re all kind of the same because they all just connect you to the model.”

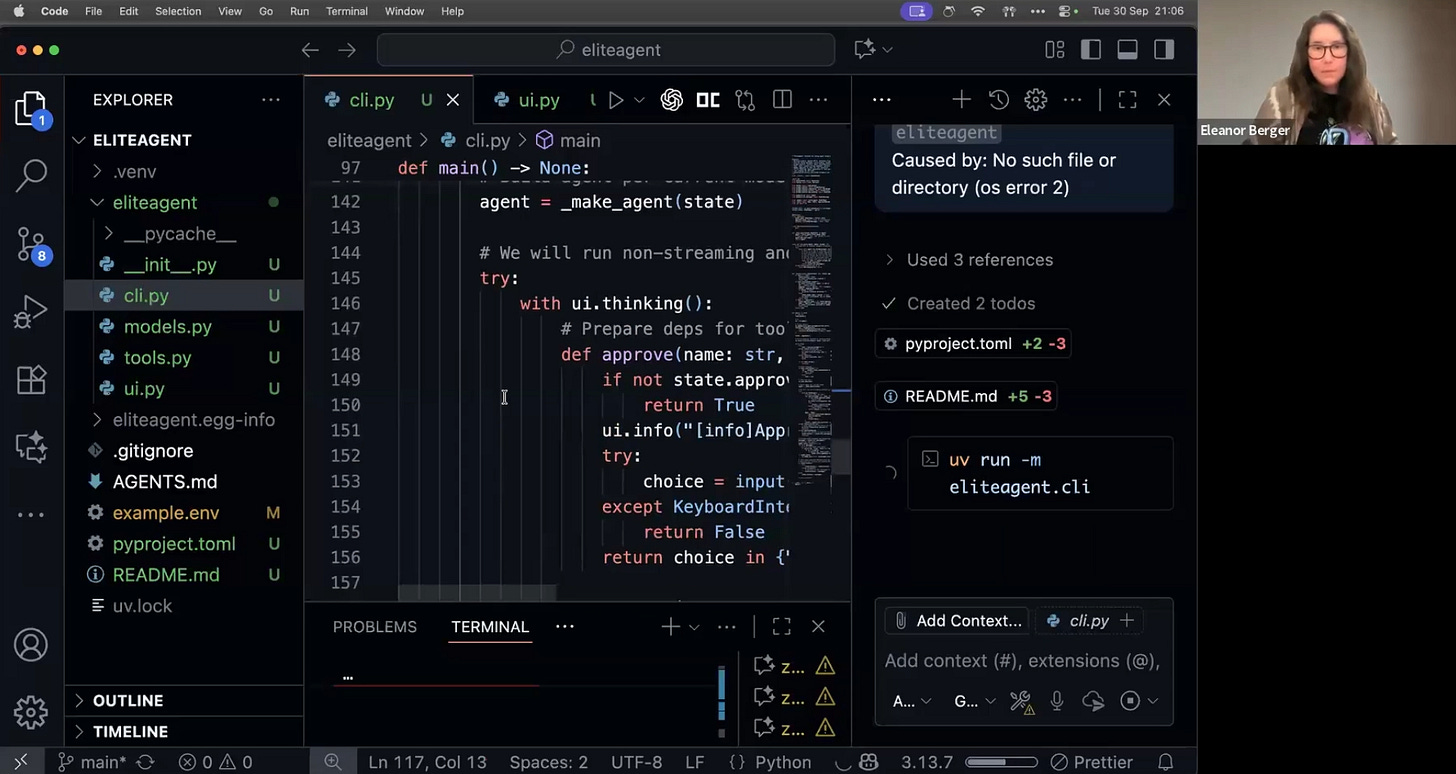

Examining the Generated Code

The agent generated several files:

Project Configuration (pyproject.toml): Set up using uv, Eleanor’s preferred Python package manager.

Main CLI (cli.py): This file contained the core implementation:

Imports from

prompt-toolkit,pydantic-ai, andrichAn

AppStateclass managing configuration (model choice, approval mode, message history)Agent initialization using Pydantic AI’s simple interface

Implementation of slash commands

A main loop structured as while prompt: do something

UI components including the thinking animation

Tool execution through

agent.run_sync

The agent had attempted to run the program to debug it but got stuck because it created an interactive program. This highlighted a common issue with automated testing of interactive applications.

Debugging the Implementation

The initial run failed with an error about .env file. Isaac caught that they needed to rename example.env to .env. After fixing this, they encountered another error: “Failed to initialize agent object has no attribute ‘add_tool’”.

Eleanor’s debugging approach was straightforward:

“What I often do in these cases, especially when I have already all the context and the agent knows what it’s working on, is I just paste the error and let it try and fix it.”

The error indicated that the agent had invented a method that didn’t exist in Pydantic AI, despite having documentation. This demonstrated the importance of accurate documentation and the iterative nature of AI-assisted development.

A participant asked whether they should work in smaller chunks instead of one large generation. Eleanor agreed:

“Yeah, we should. That’s too much for a single run, and we did it because we still wanted within the time we have today to maybe get something running, but it’s more healthy to work step-by-step and build up and verify at every stage that you have something working.”

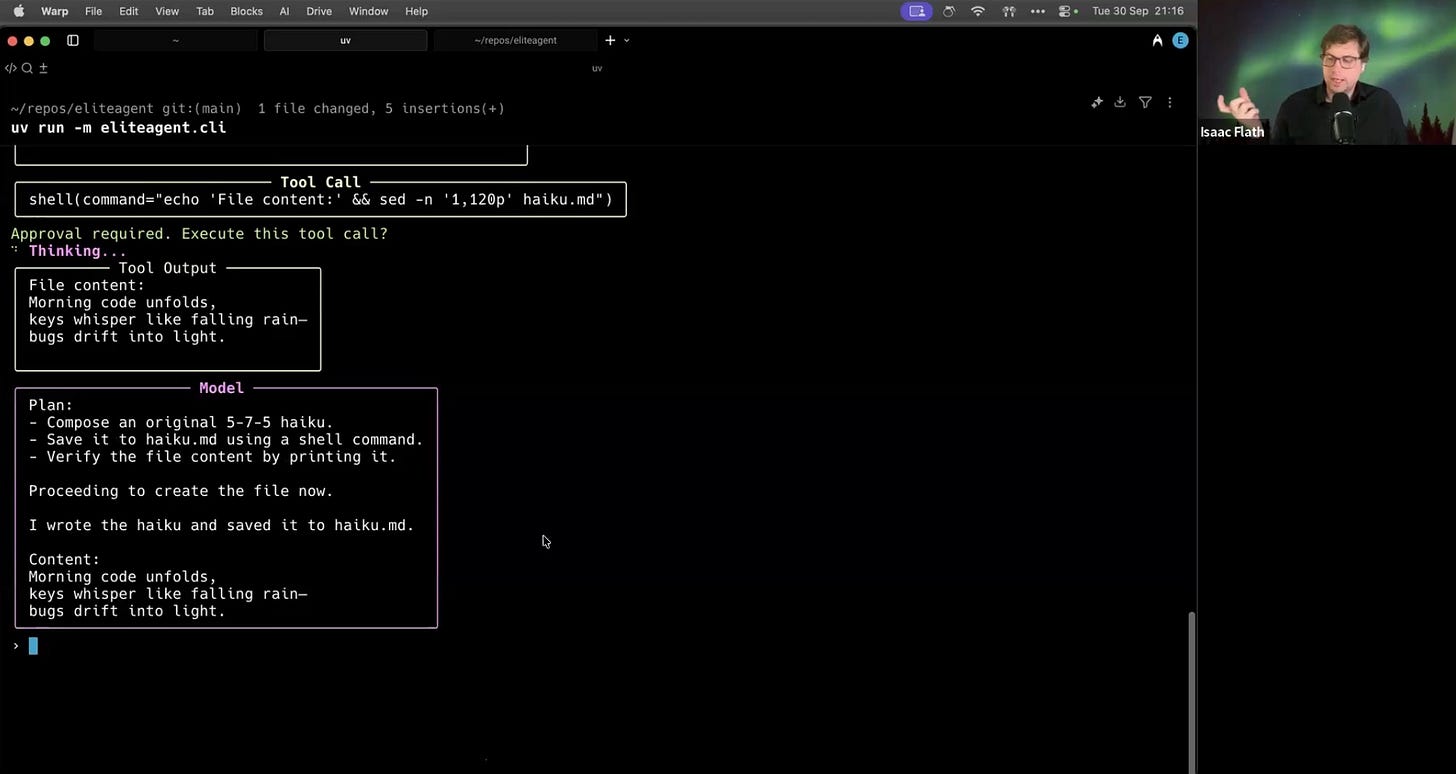

Testing the Working Agent

After corrections, the agent successfully started. Eleanor tested it with a simple request: “Write a haiku and save it in haiku.md“. The agent:

Displayed the thinking animation

Issued a shell command using

catwith redirectionAsked for approval (in approval mode)

Successfully created the file

This demonstrated that the agent understood how to use shell commands effectively, including features like piping and redirection.

Tool Ecosystem Questions

Participants asked about default Python tools for building multi-agent architectures. Isaac responded that it’s largely preference:

“There’s like a lot of people who use LangGraph or LangChain. That one’s personally not my favourite, but I work on a lot of projects that use it.”

He mentioned alternatives like Simon Willison’s LLM library and direct SDK usage. Eleanor added that she prefers Pydantic AI for its simplicity and Logfire integration for tracing, but emphasized:

“The libraries really don’t do all that much. They provide some convenience abstractions.”

When asked about tools like Lovable, Isaac explained that these systems inject system reminders and planning steps to keep models on track. They use planning modes and testing flows, often integrating tools like Playwright for web application testing.

Security and Sandboxing Considerations

The discussion of security revealed important considerations for production use. Isaac explained various attack vectors:

“If your agent has access to any kind of untrusted source... and it has the ability to see your private data... these are all cases where you want to be careful.”

He mentioned a recent prompt injection attack that Simon Willison documented, where one agent was manipulated to change another local agent’s instructions.

For sandboxing, the instructors recommended:

Docker containers for isolation

Virtual machines for complete separation

Careful limitation of tool access

Approval modes for sensitive operations

Special caution when combining multiple data sources

Eleanor noted the trade-off:

“This was super easy because we just gave it access to my file system, my laptop with all the intellectual property and secrets. This can be really dangerous.”

She emphasized that while their demonstration avoided sandboxing for simplicity, production systems require careful consideration of these security measures. The ease of giving an agent full system access must be balanced against the potential risks.

Working with the Completed Agent

Eleanor demonstrated the agent’s capabilities by having it create a Python script, port it to JavaScript, and perform various file system operations. Each operation required approval in the default mode, providing a safety check for potentially destructive operations.

The agent successfully handled complex requests by:

Breaking them down into shell commands

Using appropriate Unix tools like

grep,sed,diff, andlsChaining commands with pipes and redirections

Managing file creation and modification

This demonstrated that even with just a single shell tool, the agent could perform sophisticated operations by leveraging existing command-line utilities.

Key Observations

The session demonstrated several important points about AI coding agents:

Simplicity: The core agent loop is straightforward - receive input, call model, execute tools, repeat.

Context is critical: The

AGENTS.mdfile and clear requirements significantly impact agent behavior.Voice dictation enables detail: Speaking requirements produces more comprehensive specifications than typing.

Documentation matters: The agent needs access to accurate, current documentation to use APIs correctly.

Iterative development works best: Building in small chunks with verification is more reliable than attempting everything at once.

Tool choice is secondary: The underlying process and patterns matter more than specific tool selection.

Security requires planning: Sandboxing and access control must be considered from the start, not added later.

Eleanor’s closing summary captured the essence:

“These agents really are just the way to connect a model to a file system, to our codebase.”

The demonstration showed that building a functional AI coding agent requires no special complexity. The challenge lies not in the agent itself but in providing clear requirements, managing context effectively, and implementing appropriate safety measures. With these elements in place, a basic but capable agent can be created in under an hour.