Codex CLI Tool Review

OpenAI's Lightweight Terminal Coding Agent Reborn

OpenAI has rewritten its Codex CLI from scratch in Rust, transforming it from a basic terminal tool into a practical coding agent. The updated version includes Visual Studio Code integration and ChatGPT subscription support, making it a viable option among AI coding assistants.

The Rust Rewrite and What It Means

The complete rebuild in Rust delivers a single-binary application that contrasts with heavier alternatives like Claude Code and opencode.

Key benefits of the Rust implementation:

Fast installation and startup times

Minimal dependencies

Efficient resource usage

Trivial deployment for automated scenarios like CI/CD

The tool is open source, and the community has extended it to work with any provider supporting the OpenAI Completions API format, not just OpenAI models. This flexibility allows developers to use their preferred model providers while keeping the same interface — a practical advantage in an ecosystem where model capabilities and pricing change rapidly.

Integration with ChatGPT Subscriptions

OpenAI now allows ChatGPT Plus and Pro subscribers to use Codex CLI without separate API keys, following Anthropic's model with Claude. The integration launched in June 2025 and significantly lowers the barrier to entry for developers already using ChatGPT.

The lack of transparency about rate limits follows the same frustrating pattern as Anthropic — providing vague, non-committal terms rather than clear usage boundaries. In practice, Plus subscribers can complete substantial coding tasks without hitting limitations, but the uncertainty makes it difficult to rely on for production workloads.

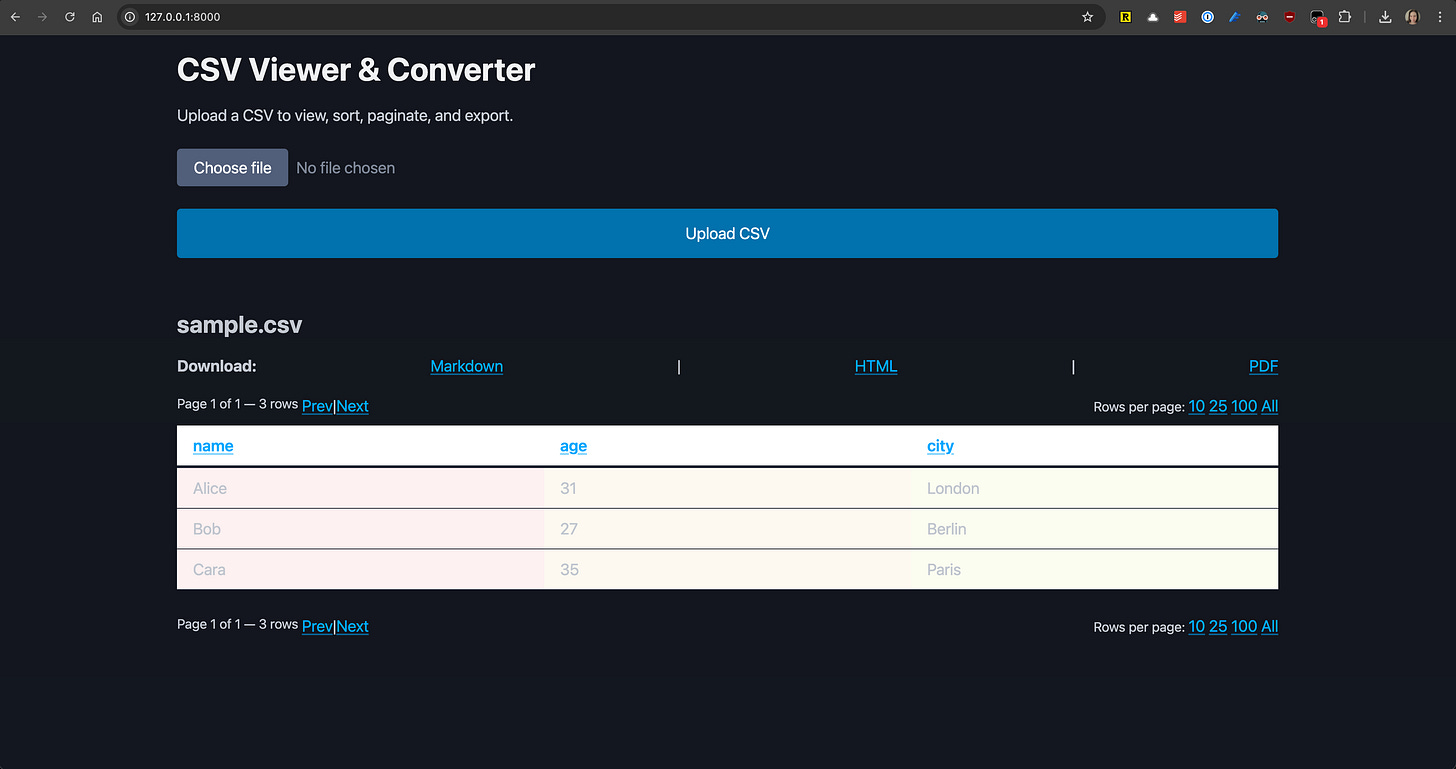

Real-World Performance with GPT-5: Building a CSV Viewer with AIR

To test Codex CLI properly, I built a CSV viewer and converter web application using the AIR web framework. This provided a comprehensive test of both the tool and GPT-5's capabilities in a realistic development scenario.

Why AIR Made the Perfect Test Case

AIR is a new Python web framework created by Daniel and Audrey Roy Greenfeld, known for their contributions to the Django community and tools like Cookiecutter. Built on FastAPI, it emphasizes simplicity while using modern tools like HTMX for dynamic UI updates.

Reasons for selecting AIR:

Too new to appear in GPT-5's training data

Extremely well-documented at airdocs.fastapicloud.dev.

Combines familiar patterns with innovative approaches

Built by highly respected developers in the Python community

Excellent test of learning from documentation alone

This guaranteed that Codex CLI would need to learn the framework entirely from provided documentation, testing its ability to work with unfamiliar technologies.

Setting Up the Experiment

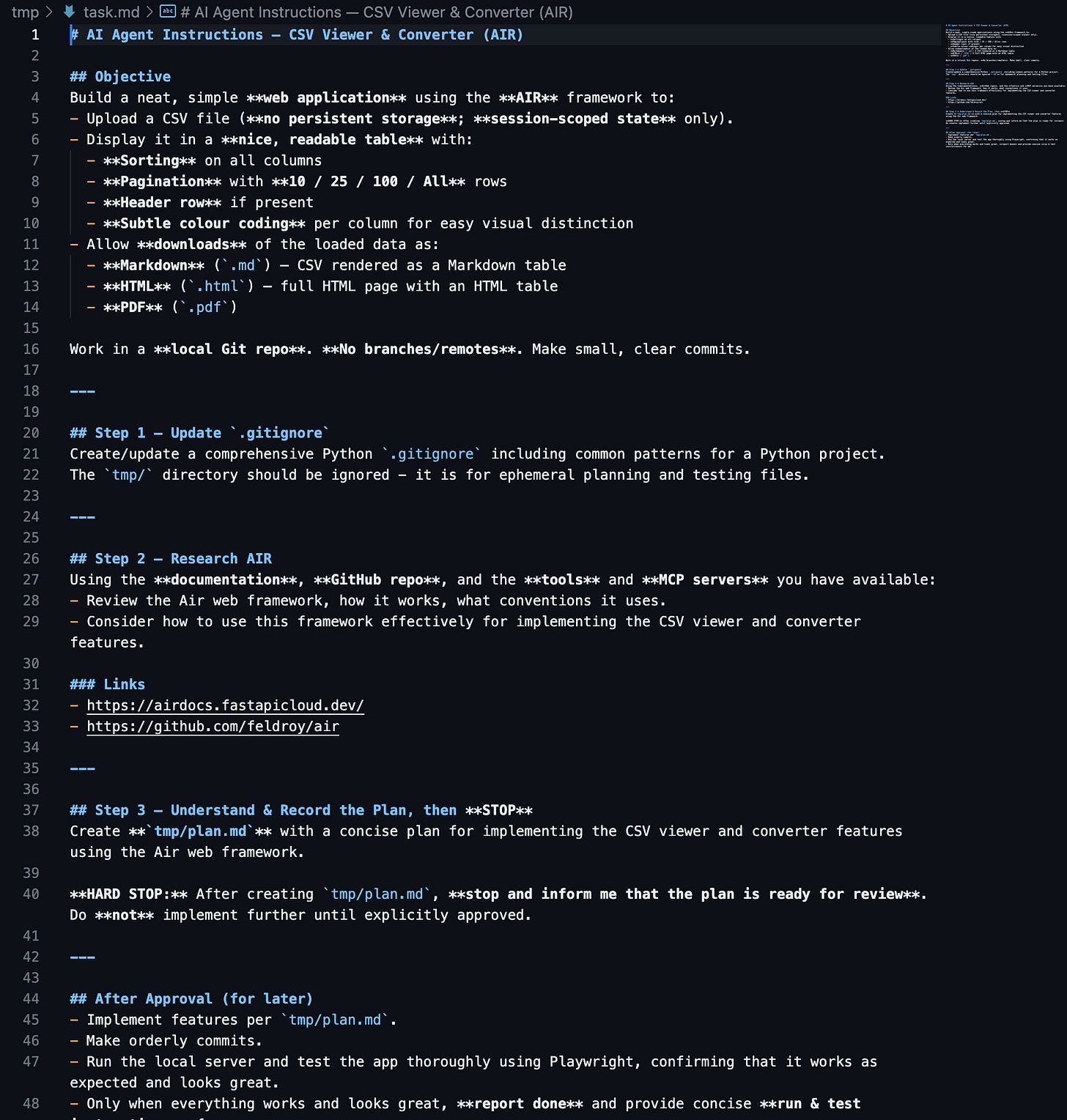

Before launching Codex CLI, I prepared a task definition document that explained all requirements for the CSV viewer application. The document included specific functionality requirements and links to AIR's documentation.

Configuration steps:

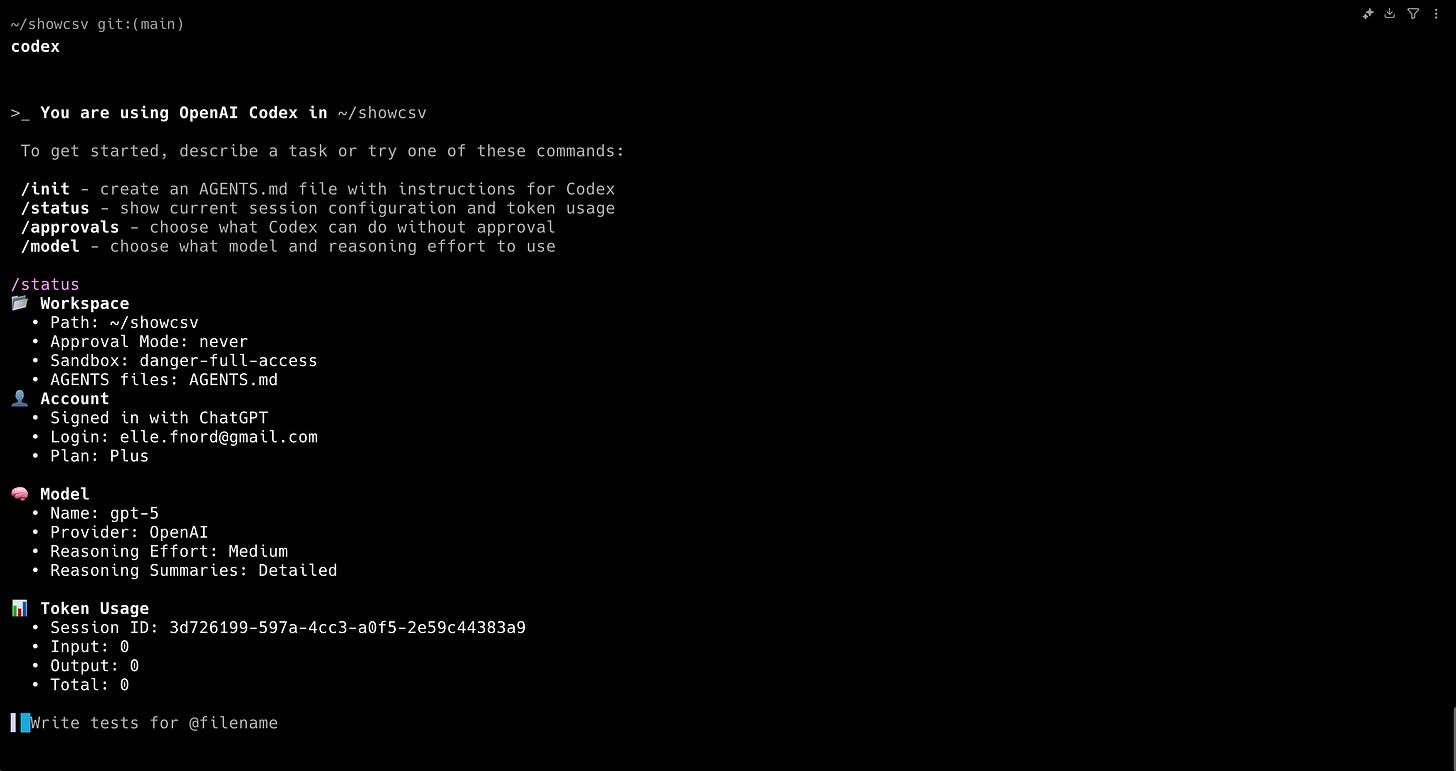

Set up config.toml with project settings

Created

AGENTS.mdwith custom instructionsConfigured MCP servers:

GitMCP for AIR framework repository access

Playwright for browser-based testing

Additional development tools

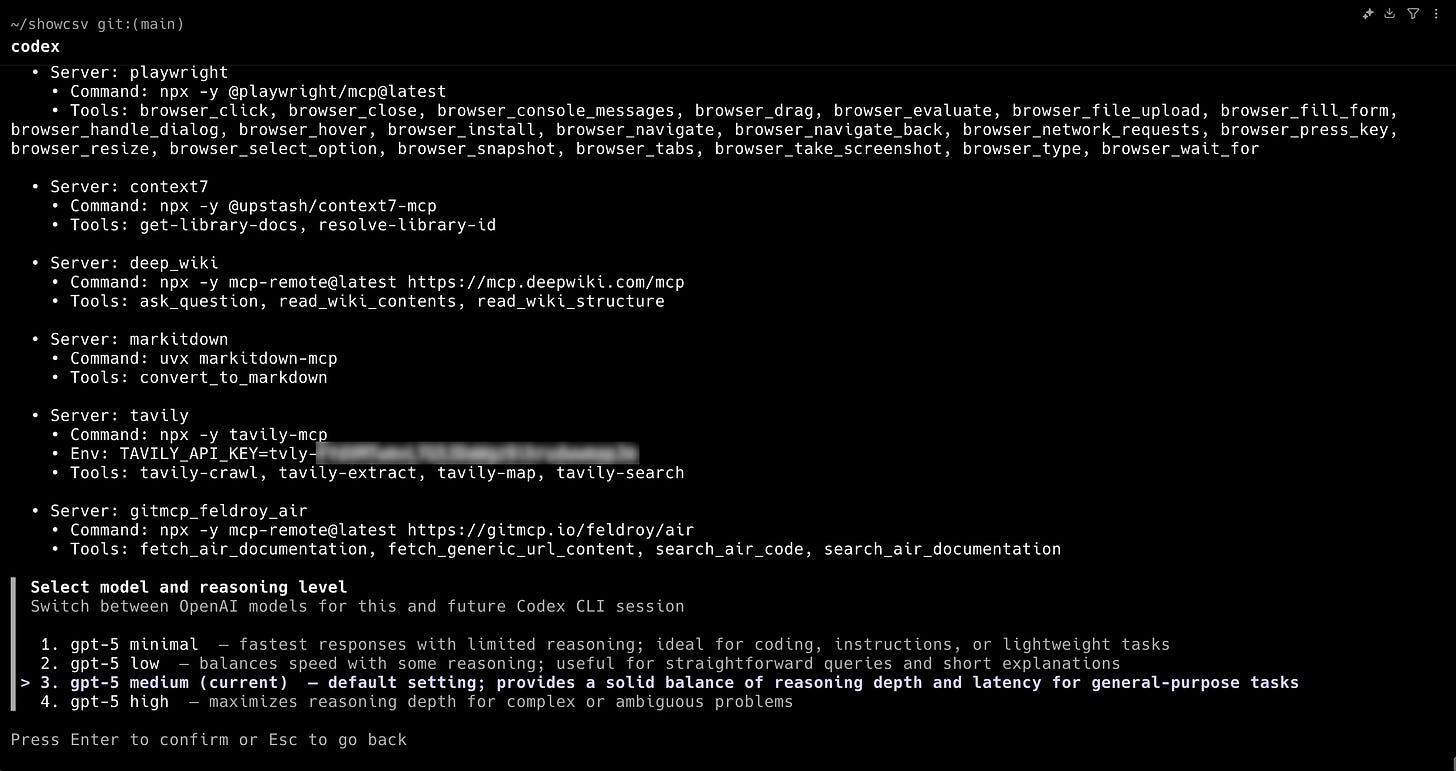

With everything configured, I launched Codex CLI and confirmed all MCP servers were functioning correctly, as well as GPT-5 model access with medium reasoning effort.

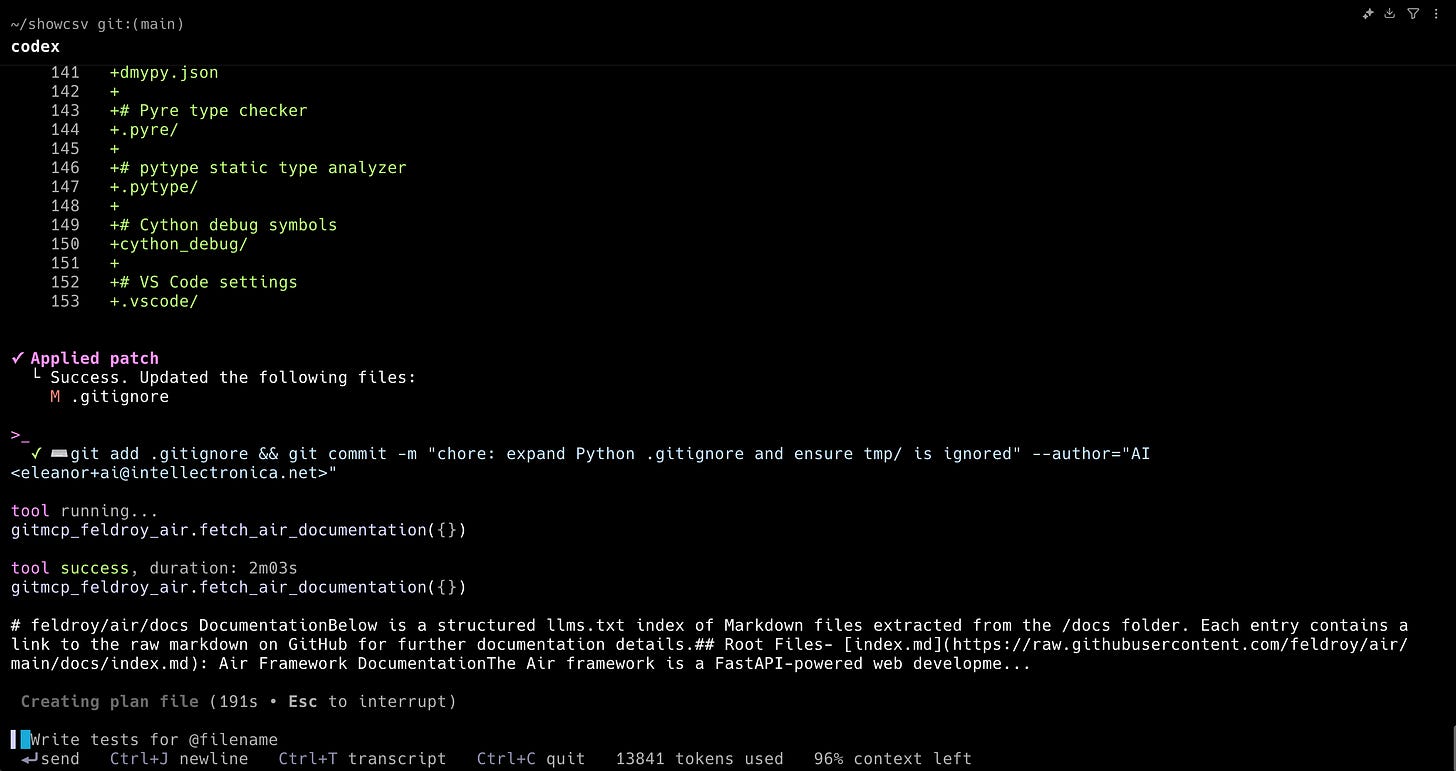

Watching Codex CLI Learn and Plan

After reading the task definition, Codex CLI began exploring the AIR framework documentation through the GitMCP server. It systematically reviewed core framework concepts like the FastAPI foundation, UI creation using AirTags, HTMX integration patterns, and the routing and templating systems. This research phase resembled watching an experienced developer learning a new framework — methodical and thorough.

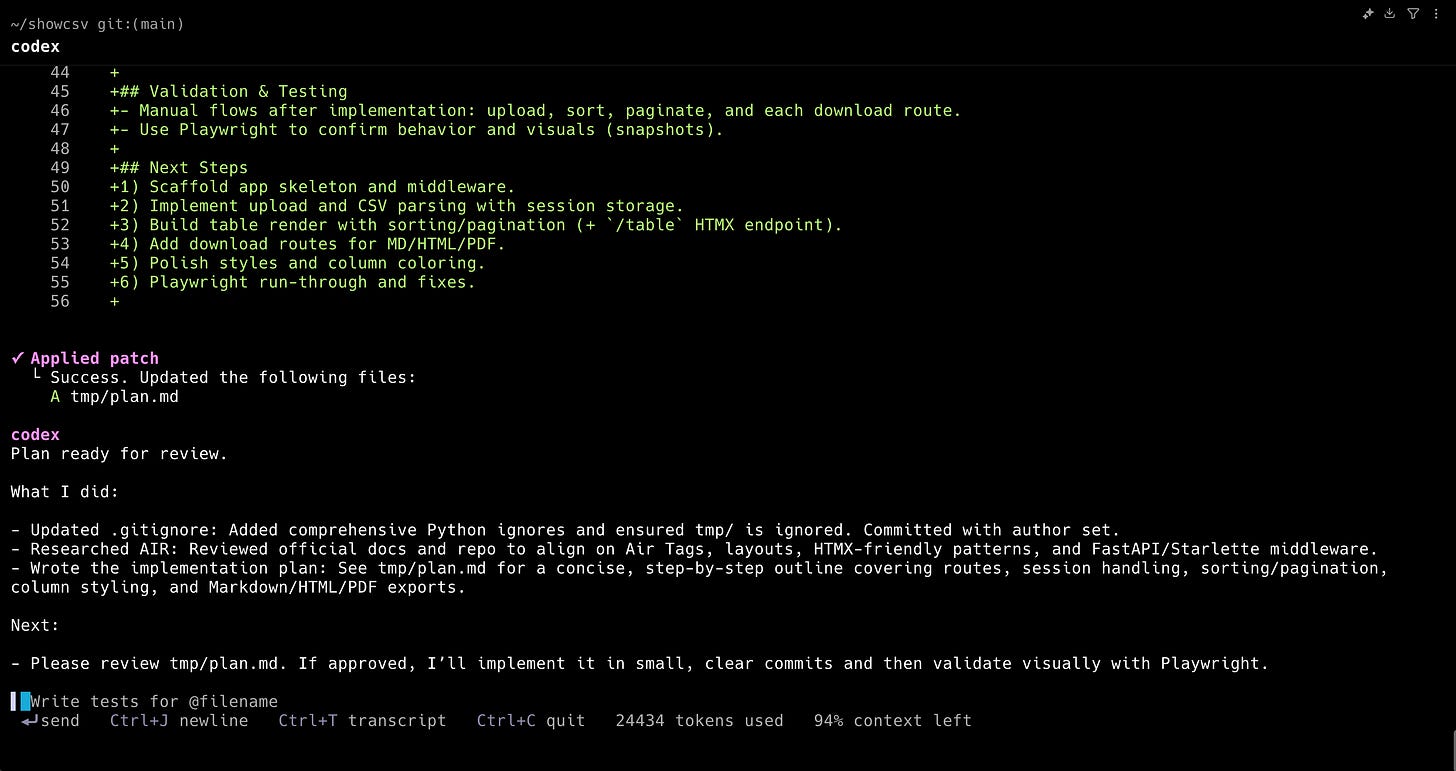

The agent then produced a detailed implementation plan as a markdown file. The plan showed clear understanding of both the requirements and AIR's specific capabilities. It correctly identified which framework features to use for each requirement and laid out a sensible implementation approach. After reviewing the plan, I found it satisfactory and instructed Codex to proceed with implementation.

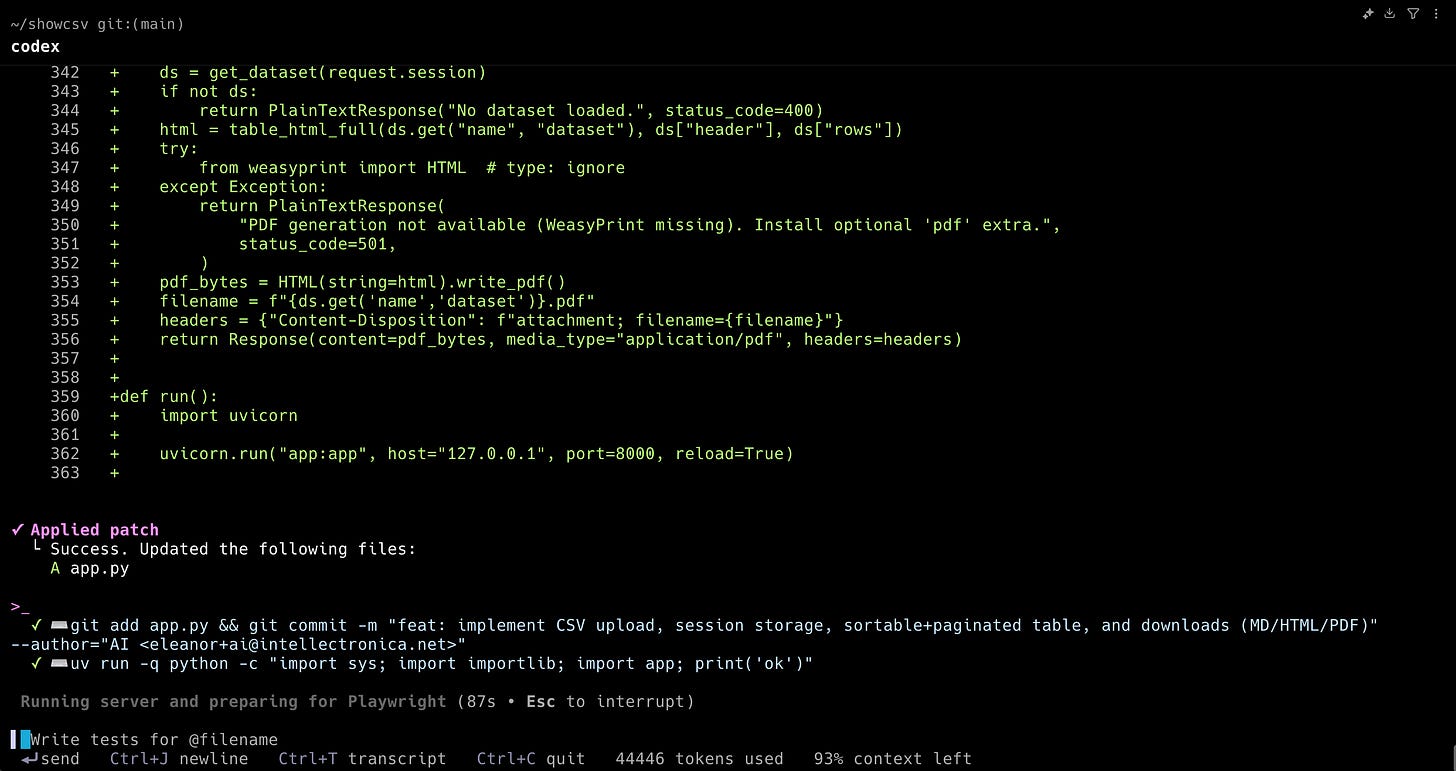

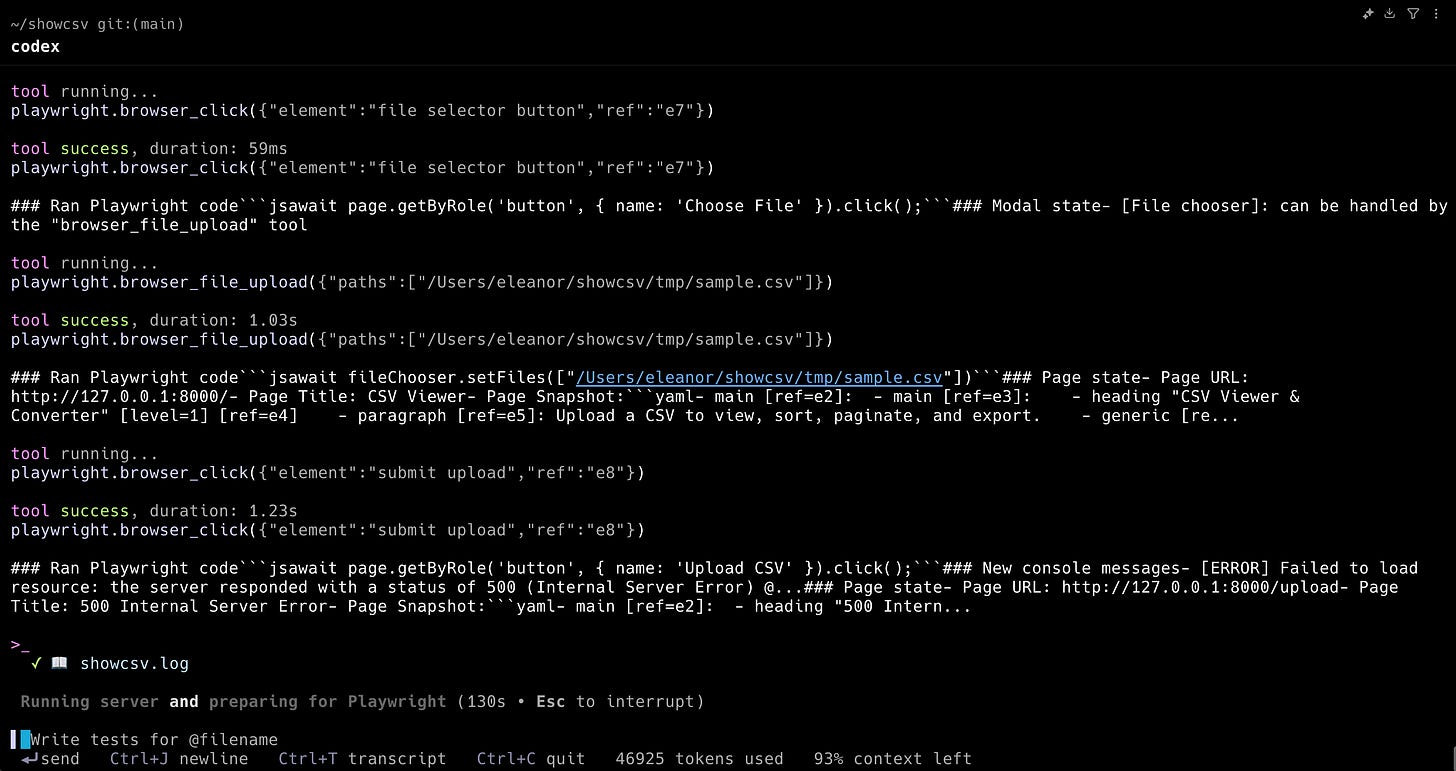

Implementation and Self-Correction

During implementation, the updated CLI interface displayed its progress effectively. While not as polished as some competitors, it clearly showed code patches being applied, reasoning steps for decisions, tool calls to MCP servers, and progress through the implementation plan. The generated code was appropriate for an AIR application, with correct route setup, template structure, and CSV processing logic. The code quality was high — clean, idiomatic Python that followed AIR's conventions properly.

The testing phase revealed impressive capabilities:

Codex used Playwright to launch the app locally

Automatically tested functionality through browser automation

Discovered errors in the application and resolved them

Found and fixed UI rendering issues independently

Re-tested until everything worked correctly

No human intervention required for debugging

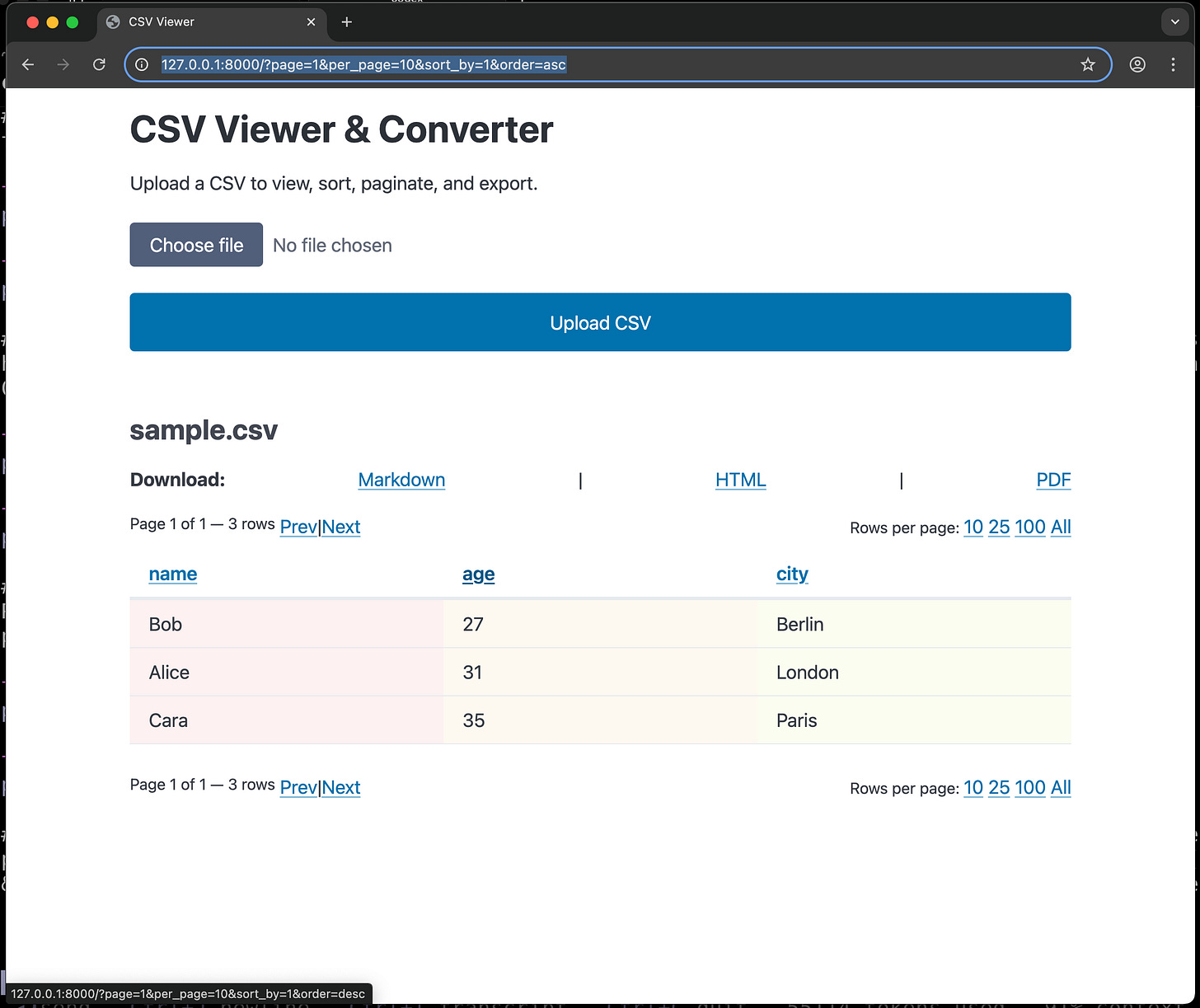

This self-correction loop demonstrated genuine autonomous problem-solving rather than simple code generation. Watching Codex exercise the application through Playwright and iterate on solutions felt like working with a thorough colleague who catches and fixes their own mistakes.

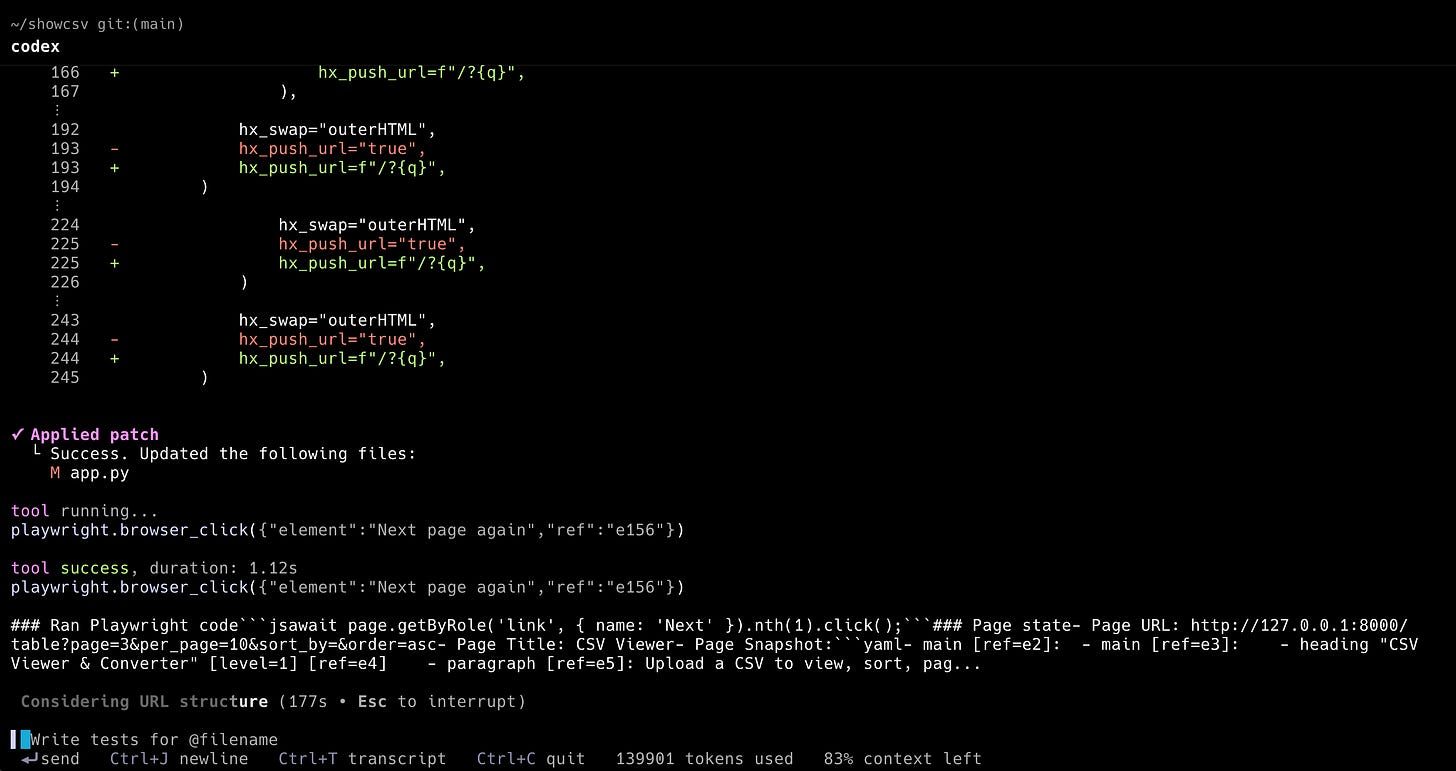

The HTMX Enhancement

After completing the basic application, Codex made an unsolicited suggestion: it could enhance the UI by implementing HTMX for inline replacement of dynamic elements. This wasn't in my original requirements, but Codex had learned from the AIR documentation that HTMX integration was one of the framework's key features.

I accepted the suggestion, and Codex quickly implemented the enhancements. The resulting application now featured smooth, in-page navigation with HTMX fragments replacing full page reloads. The implementation was correct and followed AIR's conventions — exactly the kind of modern, responsive UI that the framework was designed to facilitate.

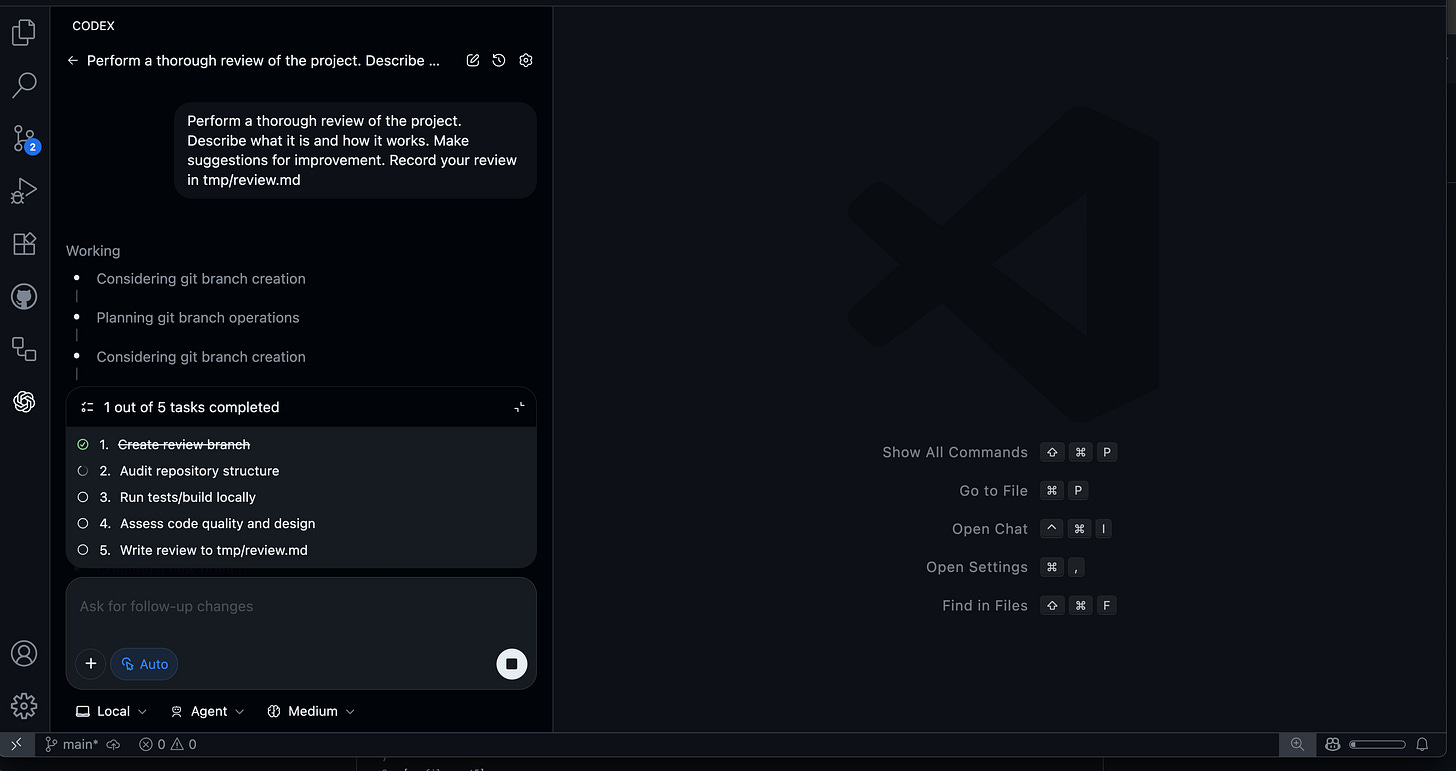

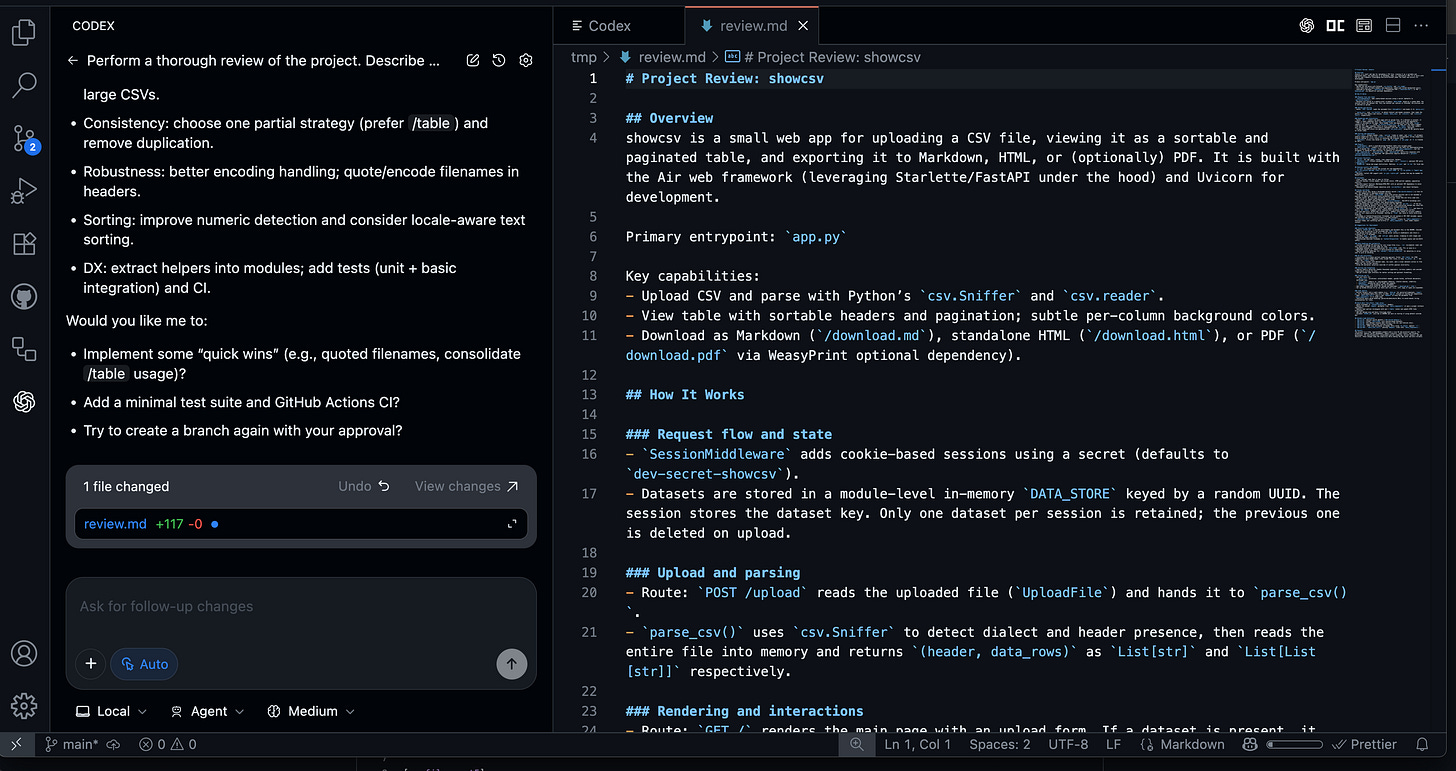

The Visual Studio Code Extension Experience

After the successful terminal session, I tested the new Visual Studio Code extension. Installation was straightforward, and I launched it in the same project directory, asking Codex to perform a comprehensive code review and document its findings.

Extension observations:

Clean presentation of workflow steps

Clear tracking of file changes

Native VS Code integration feel

Some UI rough edges indicating early development

Summary and Conclusions

Codex CLI performed well throughout testing, successfully completing a complex task involving an unfamiliar framework. GPT-5's capabilities were the key differentiator — the model followed instructions precisely, learned from documentation effectively, and applied knowledge appropriately. Every requirement from the task definition was addressed, every custom instruction followed without deviation.

The Rust implementation proved its worth through fast execution and responsive performance throughout the session. The simplicity of the interface, while less polished than competitors, meant there was less to go wrong and fewer distractions from the core task of driving the agent.

Key takeaways from the experience:

ChatGPT Plus integration worked without hitting rate limits

Vague subscription terms remain concerning for production use

Open-source nature provides flexibility and longevity

Community contributions enable non-OpenAI model support

Simple interface reduces complexity while maintaining functionality

The test with the AIR framework proved particularly instructive about the current state of AI coding assistants. Codex CLI successfully learned a new framework from documentation alone, understood its idioms, and applied that knowledge to build a functional application with appropriate enhancements. This ability to work with unfamiliar technologies using only provided documentation represents a significant capability for real-world development scenarios.

For developers who prefer simple, efficient tools over feature-rich interfaces, Codex CLI offers a practical solution. While it may not have the visual polish of established competitors, it delivers on its core promise: helping developers write code more effectively through AI assistance. The combination of GPT-5's impressive capabilities, the lightweight Rust implementation, and the flexibility of open source creates a tool that deserves serious consideration for any developer's toolkit.

I really would have loved you to include how much the API costs were for this test case (and all of the other ones you do). Also how much time did it take to run. Using these agent-based coding tools isn't for free either in time or token cost, so that's def a factor I often consider.