You Can Just Do Things

Peter Steinberger's AI Coding Workflow

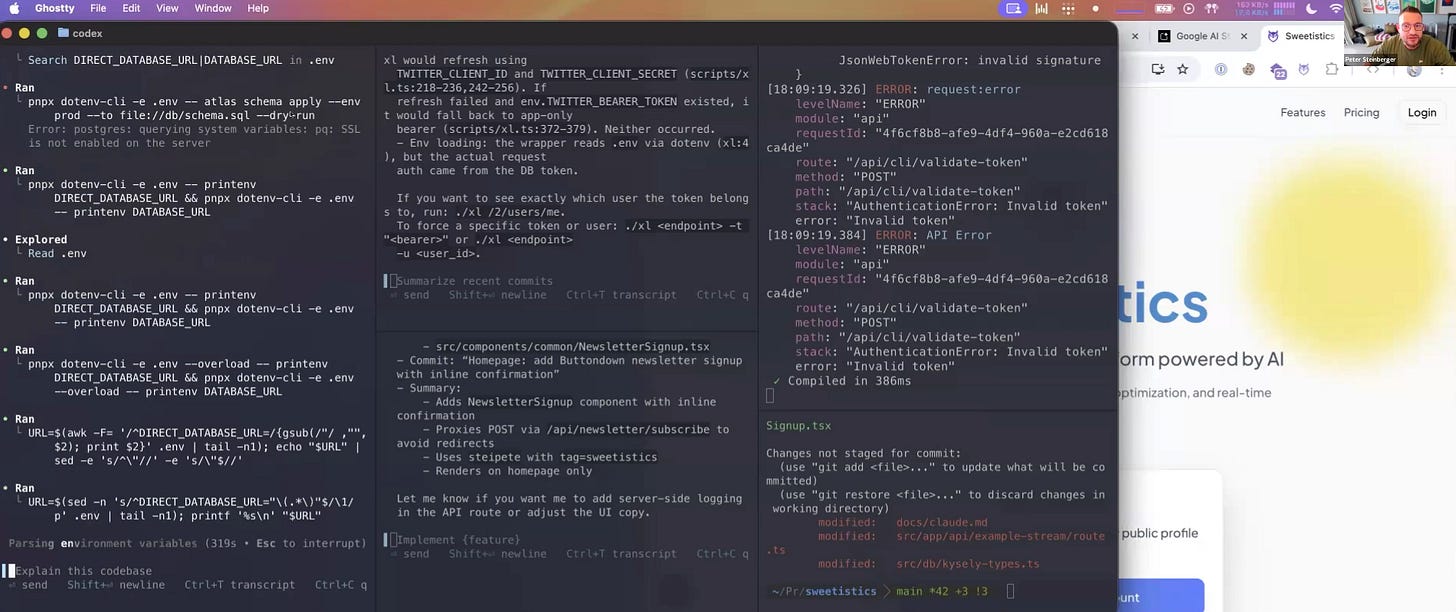

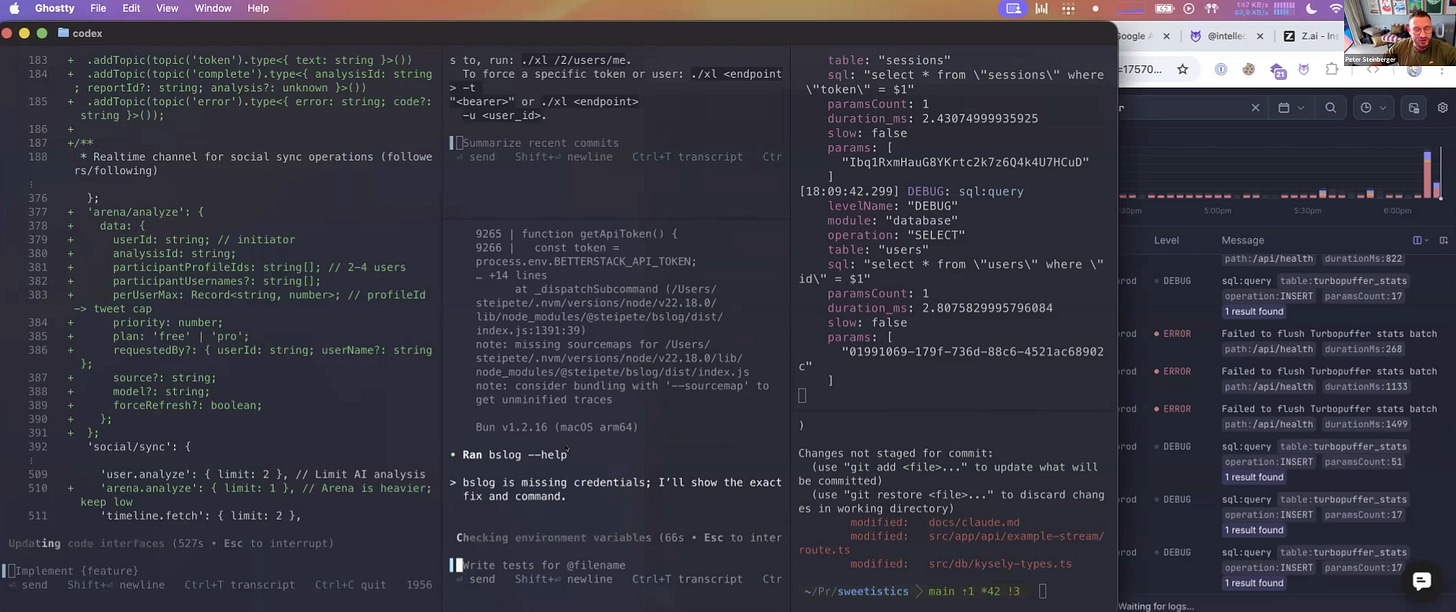

Peter Steinberger demonstrated his wild but highly effective AI-assisted development workflow in a live coding session. The session showcased how he builds features rapidly by embracing what he calls "chaos engineering" — running multiple AI agents simultaneously on the main branch without traditional safeguards.

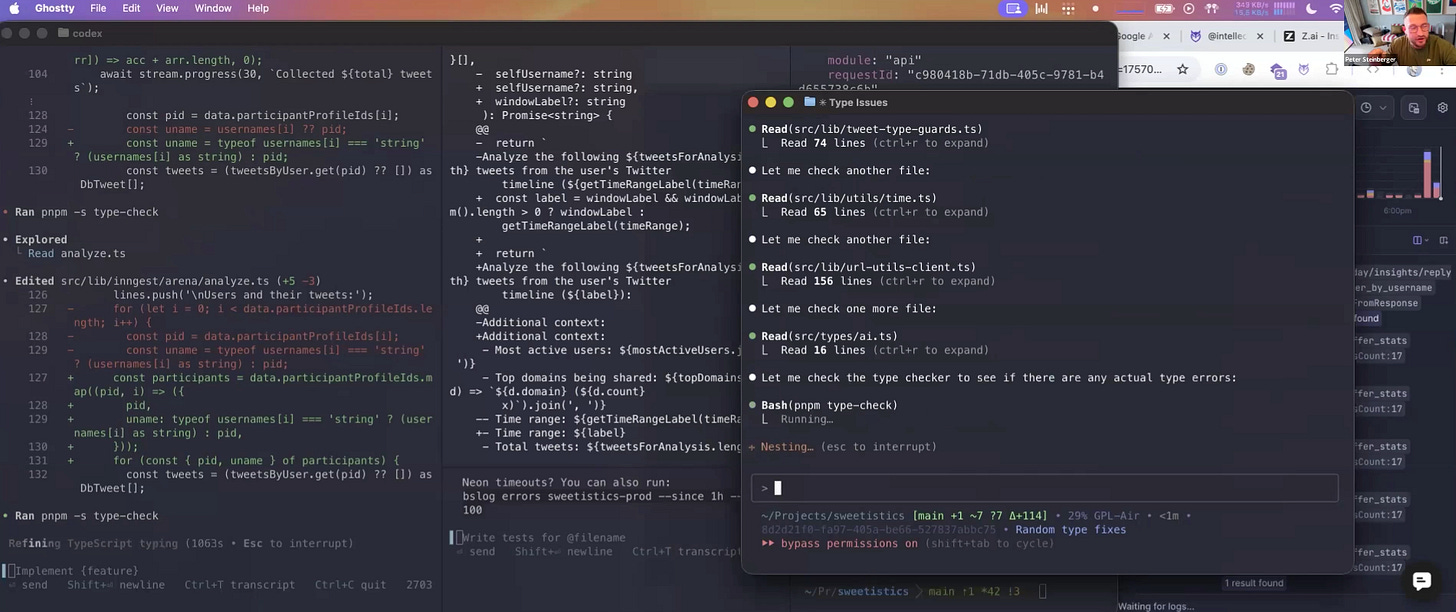

From Claude to Codex

Peter recently made a significant switch in his AI tooling, moving from Claude to OpenAI's Codex after hitting rate limits:

"I used to love Claude code. I still love it, but since I guess this week, I almost fully moved to Codex... the whole move was motivated because there's a little bit harder limits on Claude and that led me to explore other things."

The key advantage he found with Codex is its eagerness to read the existing codebase:

"It turns out I like Codex a lot more... it is much more eager to actually read your code base where Claude often just tries random stuff. So you really have to be very diligent with Claude and like plan it first and tell it to please look here and please read this. Whereas GPT is much more eager to read all the files and then usually just have the right thing."

The Main Branch Philosophy

One of Peter's most controversial practices is working directly on the main branch with multiple agents simultaneously. When asked about using work trees, he explained:

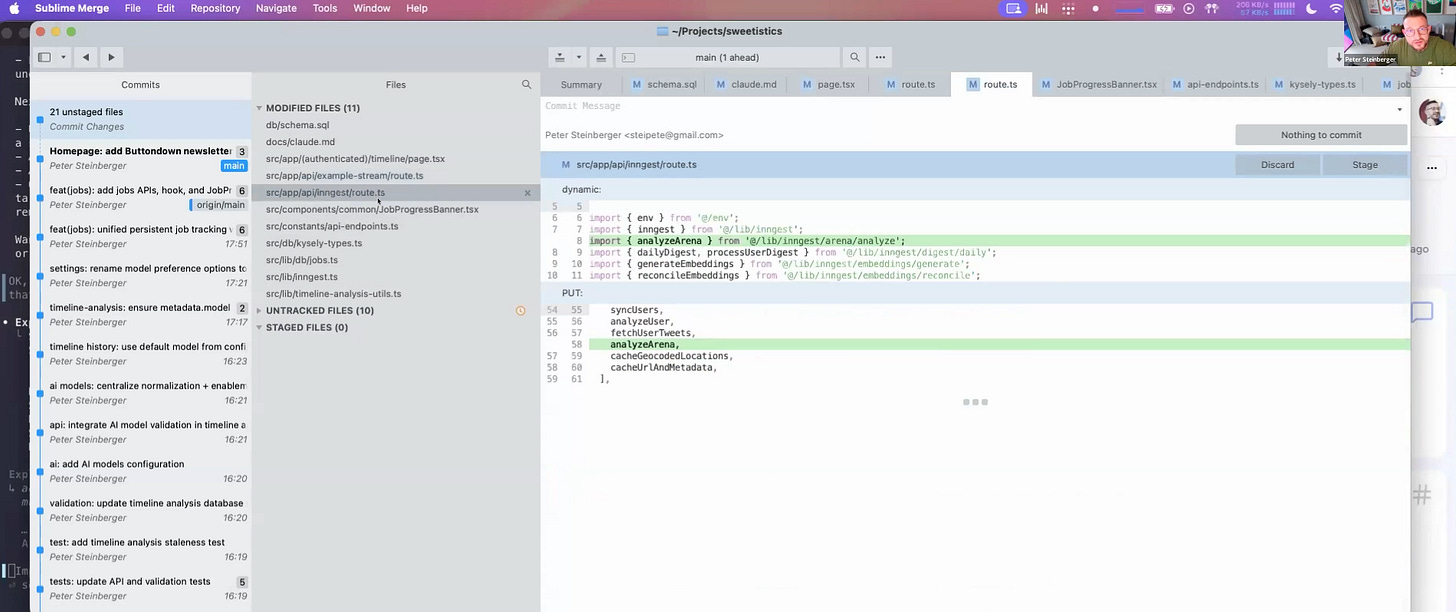

"I tried the whole work tree approach, but you know, obviously it just slowed me down. I had to deal with merge conflicts... You should just work on one, two, three features at the same time in the main repository. If you're clever about it, if you work on different things, agents interference will be minimal and you can still do nice separated commits."

His terminal setup reflects this approach — he uses Ghostty with multiple split screens, each running different agents. During the demo, he had agents working on different aspects of the same feature simultaneously.

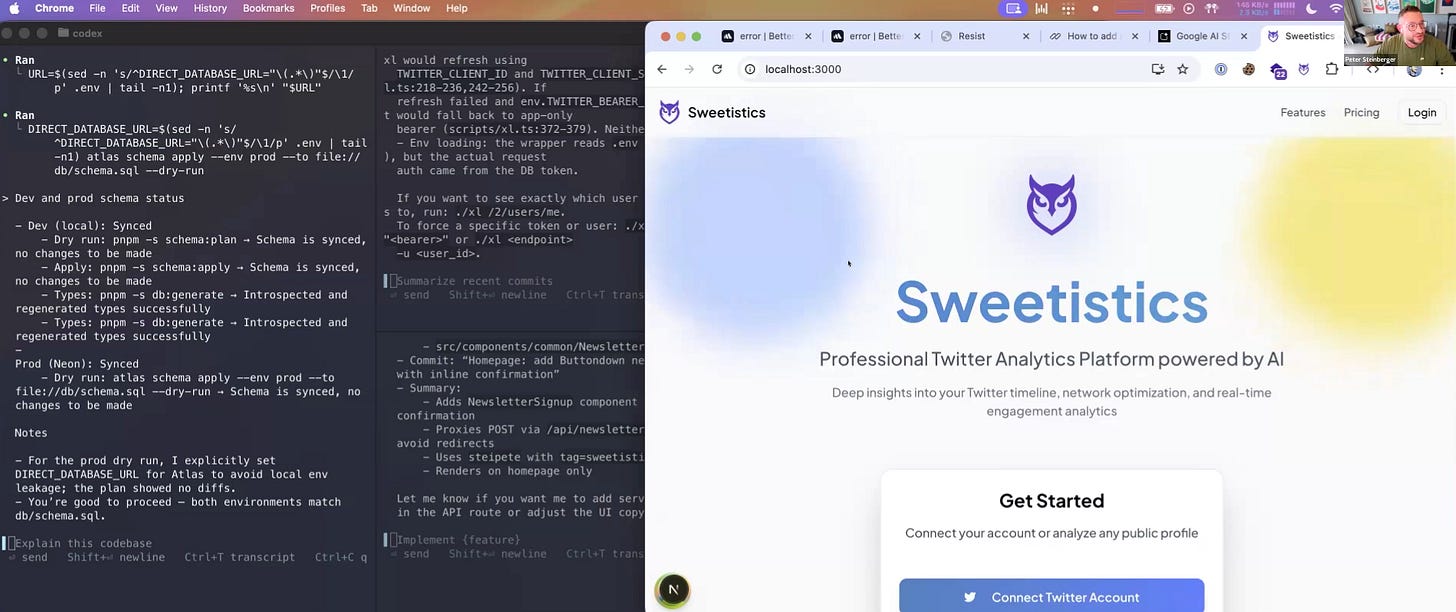

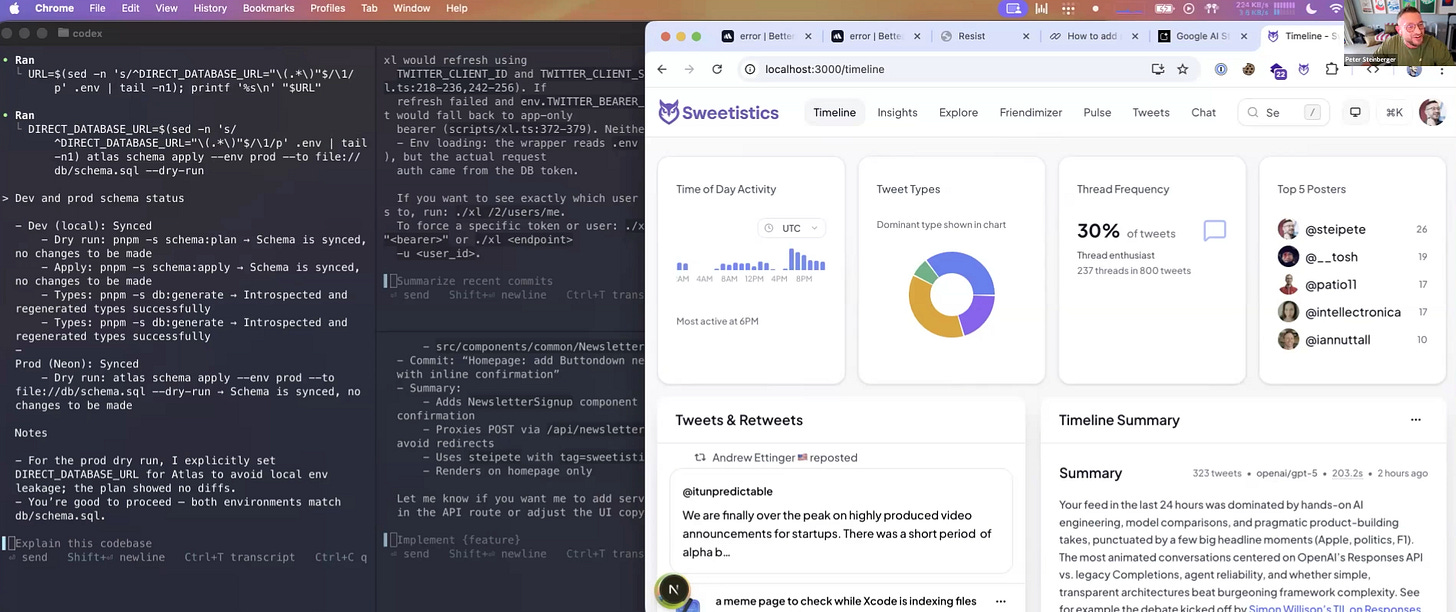

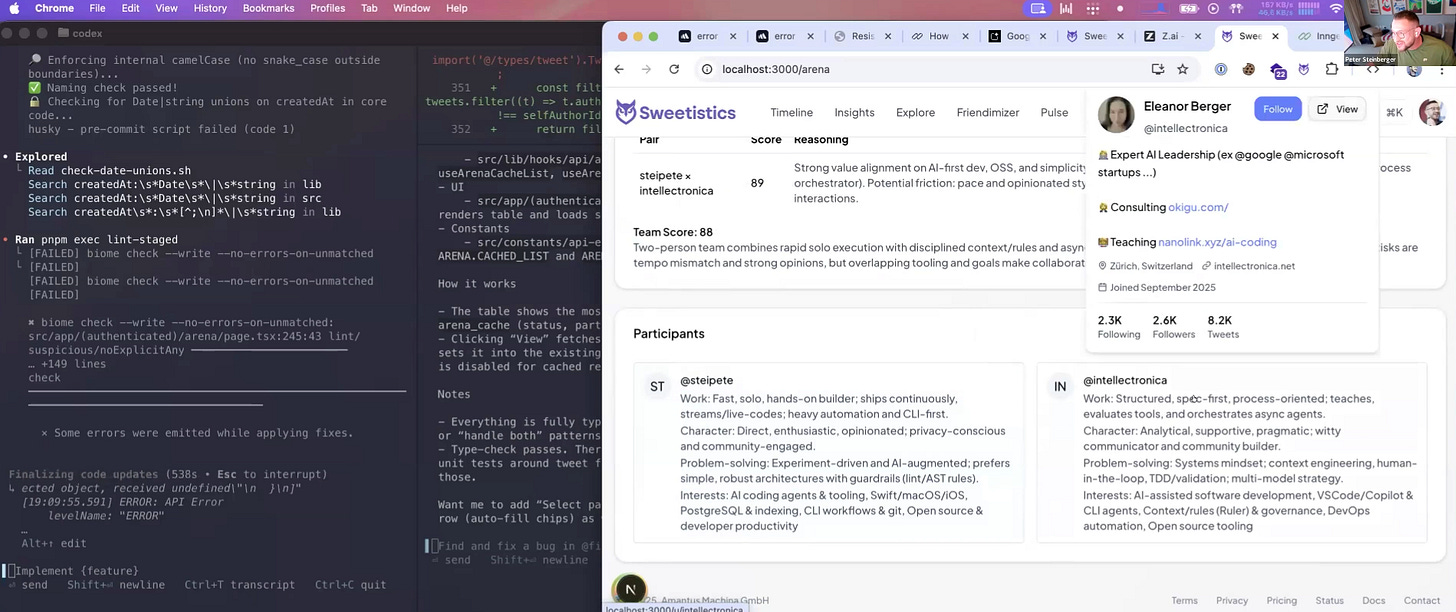

Building the Arena Feature

The live demonstration involved building a new "Arena" feature for his Twitter analytics website. The feature would compare multiple Twitter users to determine their compatibility as potential co-founders or team members. Peter described his approach:

"We're going to build a new feature. We call it Arena. What I want to do here is I want to have the user picker and I want to be able to pick up to four users. And then I want to have a button where we press and then we will fetch tweets from each user."

The feature would analyze up to 1,000 tweets total, evaluate each person's character traits and problem-solving approach, then calculate compatibility scores.

Context Management Without MCPs

A key insight from Peter's workflow is his rejection of MCP servers in favor of custom CLIs and direct context management:

"I don't use any MCPs. If you follow me on Twitter, you kind of know my sentiment towards that. I think keeping your context as clean as possible is important. So if you add MCPs, you just plot up the context."

Instead, he built custom CLIs for specific needs. For example, he created bslog for fetching logs from Better Stack and xl as a wrapper around curl for Twitter API calls. He explained the efficiency of this approach:

"The beauty is all you need is like one line in your CLAUDE file or two that explain that the CLI exists. And then the model will eventually try some random shit. It will fail. It will print the help message that pulls in all the instructions, how the thing has to be used. And then the model knows how to use the CLI."

🤔 Managing context effectively is so often the deciding factor between a frustrating “vibe coding” session and productive AI-assisted software engineering, so in our course Elite AI-Assisted Coding, this is one of the topics we focus on in all three parts. Join us for a 3 week (12 live sessions) in-depth study of everything you need to build production-grade software at hypersonic speed with AI.

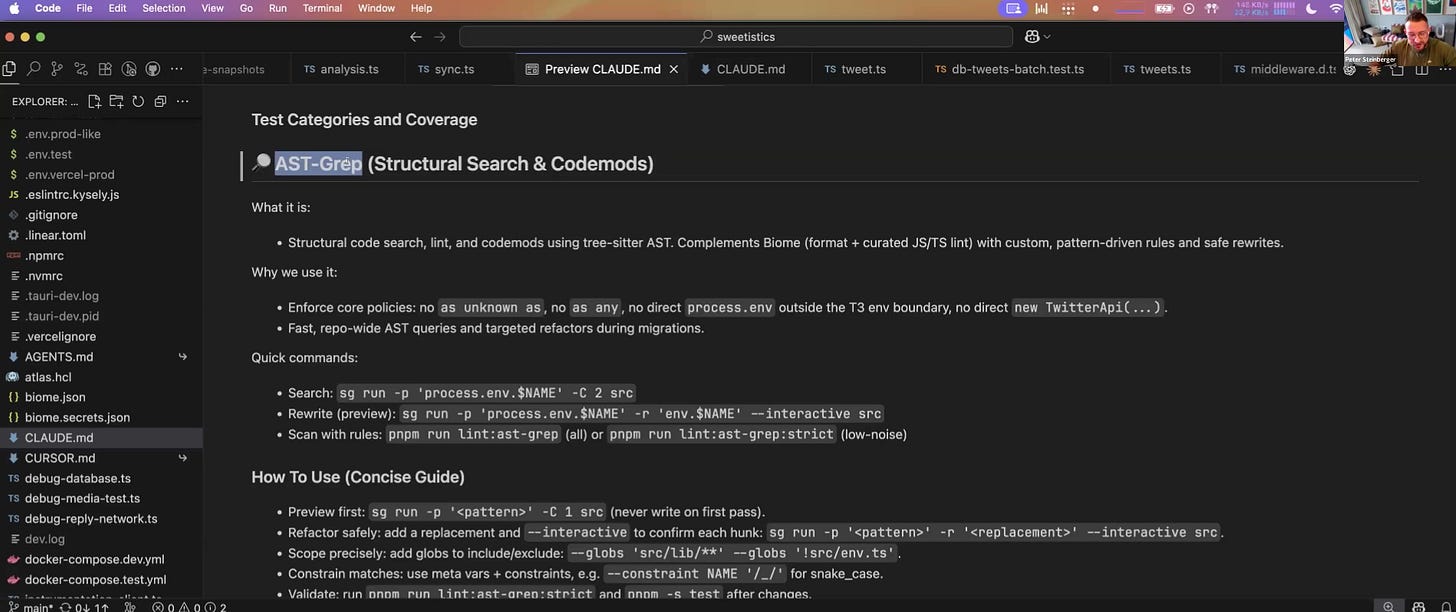

The CLAUDE.md Living Document

Peter maintains a CLAUDE.md file that serves as a living document of instructions and context for the AI agents. He allows agents to write to it and treats it as an evolving resource:

"See this as a living document, add stuff, or just tell the model to add stuff. And then at some point you can also tell the model to find redundancies and remove stuff."

The file contains style guides, database schema management instructions, CLI documentation, and refactoring notes. He emphasized keeping it concise:

"I think the word concise is important. Otherwise they're going to write a paragraph. And again, you want to be talking efficient, especially because the CLAUDE file gets messy eventually."

Voice-First Development

Peter uses WisprFlow for voice-to-text input, which adds another layer of efficiency to his workflow. He noted how the tool corrects his intent:

"I think the crucial thing that not a lot of other apps do is it also corrects my intent. So let's say I say, implement feature A, oh, actually I meant feature B. It just says implement feature B... with the semantic parsing, corrects what you say into the correct thing that you actually want to say."

YOLO Philosophy

When asked about manual approvals for agent actions, Peter was unequivocal:

"Nothing bad ever happened. Just be smart about it. You don't ask the model to delete stuff unless it's a very simple thing... otherwise everything is in git, backups run every night."

He argued that manual approval defeats the purpose of moving fast:

"To actually be super diligent you would have to be very attentive which kind of defeats the point of moving fast. So I think yes, YOLO is the only way of running agents."

Testing Strategy

Rather than Test-Driven Development, Peter writes tests after implementation:

"No, I don't do TDD ... I write tests afterwards. It's the best way to get the model to correct itself."

He emphasized the importance of asking models to write tests before context runs out:

"Always ask, write tests. I mean, models in general are not very good, they're writing good tests, but it's usually good enough to find issues at least of the feature that you're building."

Results and Takeaways

By the end of the session, Peter successfully built the Arena feature that could compare Twitter users' compatibility. The feature analyzed users' tweets and provided compatibility scores with detailed analysis of working styles and potential friction points.

Eleanor summarized a key lesson from the session:

"If there's one thing I'm taking from the session today is people will try to scare you and you have to use sandboxing, you have to use branches, you have to do it this way... but like the title of the session, you can also just do things."

Peter's approach demonstrates that with the right setup and confidence, AI-assisted development can be remarkably fast and effective without many of the traditional safeguards developers typically rely on. His workflow prioritizes speed and iteration over caution, relying on git for safety and multiple agents for parallel progress.

Where could I find his templates for agents ?