Working with Asynchronous Coding Agents

Your guide for delegating complete tasks to cloud-hosted asynchronous agents

While most AI-assisted development focuses on interactive tools like IDE extensions, asynchronous coding agents represent a fundamentally different — and potentially more powerful — approach to AI-augmented software development. These background agents accept complete work items, execute them independently, and return finished solutions while you focus on other tasks. This guide explores why asynchronous agents deserve more attention than they currently receive, provides practical guidelines for working with them effectively, and shares real-world experience using multiple agents to refactor a production codebase.

Why Asynchronous Agents Are the Future of AI-Assisted Development

The Productivity Multiplier

Asynchronous coding agents — also called background agents or cloud agents — unlock productivity in ways that interactive tools cannot match. The fundamental difference lies in how they change your relationship with AI assistance. While interactive AI keeps you tethered to the development process, requiring constant attention and decision-making, asynchronous agents transform you from a driver into a delegator.

Consider the typical workflow with interactive AI: you're writing code with an AI assistant suggesting completions, answering questions, or acting on your ad-hoc prompts. It's helpful, but you're still fundamentally doing the work yourself, just with enhanced capabilities. You can't step away, you can't parallelize, and it's hard to hand off complete features to be worked on while you attend to other matters.

Asynchronous agents change this model entirely. You prepare a work item in the form of a ticket, issue, or task definition, hand it off to the agent, and then move on to other work. You can have several of these tasks running in parallel, each agent working independently on different parts of your codebase. This isn't just about saving time — it's about restructuring how software gets built.

Key advantages include:

True parallel processing: Launch multiple agents on different tasks simultaneously

Developer time optimization: Hand off complete work items and focus on higher-value activities

Broader accessibility: Product managers and non-developers can create specifications and delegate implementation

Better integration: Fits naturally into existing software development workflows with issues, tickets, and pull requests

Scalability: Add more concurrent tasks without adding more developers

Asynchronous agents also democratize software development in ways that interactive tools cannot. A product manager who understands requirements but can't code can write a detailed specification and have it implemented. A senior developer can delegate routine refactoring while focusing on system design. This isn't about replacing developers — it's about amplifying what teams can accomplish.

The Current Landscape (an incomplete survey)

The ecosystem of asynchronous coding agents is rapidly evolving, with each offering different integration points and capabilities:

GitHub Copilot Agent: Accessible through GitHub by assigning issues to the Copilot user, with additional VS Code integration

Codex: OpenAI's hosted coding agent, available through their platform and accessible from ChatGPT

OpenHands: Open-source agent available through the All Hands web app or self-hosted deployments

Jules: Google Labs product with GitHub integration capabilities

Devin: The pioneering coding agent from Cognition that first demonstrated this paradigm

Cursor background agents: Embedded directly in the Cursor IDE

CI/CD integrations: Many command-line tools can function as asynchronous agents when integrated into GitHub Actions or continuous integration scripts

Guidelines for Effective Delegation

The Art of Complete Specification

The fundamental rule for working with asynchronous agents contradicts much of modern agile thinking: create complete and precise task definitions upfront. This isn't about returning to waterfall methodologies, but rather recognizing that when you delegate to an AI agent, you need to provide all the context and guidance that you would naturally provide through conversation and iteration with a human developer.

The difference between interactive and asynchronous AI work is significant. In interactive mode, we tend to underspecify because we're exploring, experimenting, and can provide immediate course corrections. We might type a quick prompt, see what the AI produces, and refine from there. This exploratory approach works when you're actively engaged, but it fails when you need to hand off work and walk away.

Building Complete Task Definitions

A complete task specification goes beyond describing what needs to be done. It should encompass the entire development lifecycle for that specific task. Think of it as creating a mini project plan that an intelligent but literal agent can follow from start to finish.

Essential components include:

Requirements: Not just what to build, but why it matters and how it fits into the larger system

Acceptance criteria: Specific, testable conditions that define success

Implementation plan: Suggested approach, key files to modify, architectural patterns to follow

Testing requirements: Which tests to write, what edge cases to cover, performance benchmarks

Development process: Branch naming, commit message format, PR description template

Style guidelines: Language-specific conventions, project-specific patterns, documentation standards

Tool specifications: Approved libraries, frameworks to avoid, performance considerations

Leveraging AI for Specification Writing

One effective technique for creating comprehensive specifications is to use AI assistants that have full awareness of your codebase. Tools like Gemini 2.5 Pro with its large context window and ChatGPT Deep Research can analyze your entire project and help generate task definitions that account for existing patterns, dependencies, and architectural decisions. And local IDE or terminal-based agents with access to your repository are also great tools for preparing specifications for asynchronous agents.

This creates a recursive relationship: you're using AI to write better specifications for AI. The planning assistant can identify edge cases you might miss, suggest implementation approaches that align with your existing code, and ensure consistency across different task definitions. This planning phase, while requiring upfront investment, pays dividends in reduced back-and-forth and higher success rates with asynchronous agents.

Learning from Imperfect Results

When an agent doesn't deliver what you expected, the temptation is to engage in corrective dialogue — to guide the agent toward the right solution through feedback. While some agents support this interaction model, it's often more valuable to treat failures as specification bugs. Ask yourself: what information was missing that caused the agent to make incorrect decisions? What assumptions did I fail to make explicit?

This approach builds your specification-writing skills rapidly. After a few iterations, you develop an intuition for what needs to be explicit, what edge cases to cover, and how to structure instructions for maximum clarity. The goal isn't perfection on the first try, but rather continuous improvement in your ability to delegate effectively.

A Real-World Tour: Refactoring Ruler with Asynchronous Agents

Setting the Stage

To test the capabilities and limitations of asynchronous agents, I used them exclusively for a comprehensive refactoring of Ruler, a tool I've been developing with an unusual constraint: I've never written a line of code for it myself, using AI exclusively for all development. This decision, combined with my policy of accepting all community contributions that don't cause regressions and have good test coverage, led to a codebase that needed architectural cleanup.

The accumulation of technical debt was predictable. Different AI sessions produced code with varying styles and patterns. Community contributors each brought their own approaches and preferences. While the tool worked well and had grown successfully, the codebase had become a patchwork of different implementations. Before adding new features, I needed to consolidate, refactor, and establish consistency — an ideal test case for asynchronous agents.

The Planning Phase

I began with a comprehensive review phase using multiple tools. I fed the entire codebase to Gemini 2.5 Pro, taking advantage of its large context window to analyze the architecture and identify improvement opportunities. I also used ChatGPT Deep Research to get a different perspective on what could be enhanced or consolidated. Finally, I conducted my own manual review, noting patterns that needed attention and areas where I knew the history behind certain decisions.

The three reviews showed significant overlap — most issues were obvious enough that all three identified them independently. Each review also contributed unique insights. The AI assistants caught subtle inconsistencies I had overlooked, while my manual review provided context about why certain decisions had been made. I grouped these findings into coherent work packages, each representing a focused refactoring effort that could be completed independently.

Creating GitHub Issues

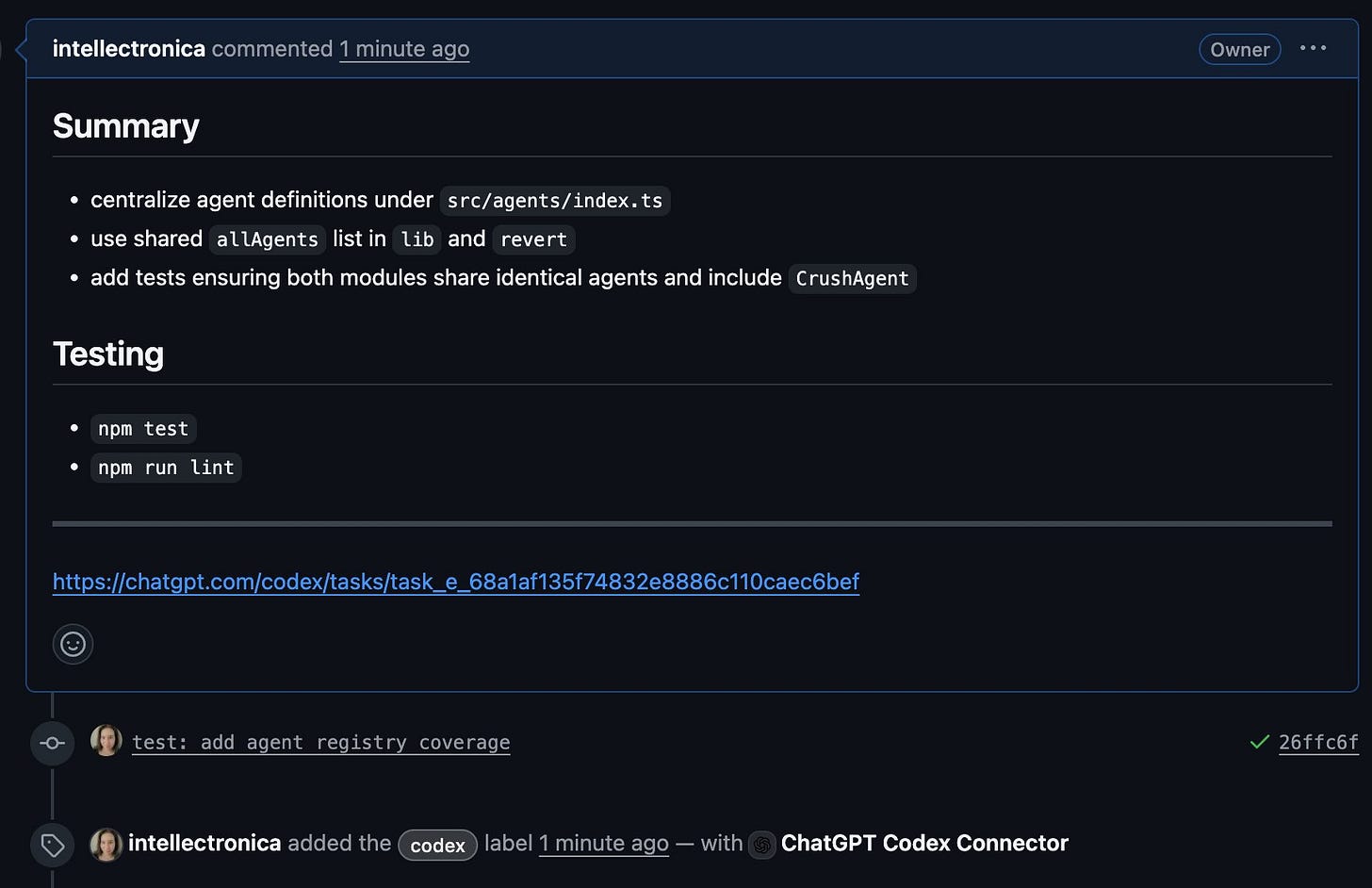

For each work package, I used a consistent approach to create detailed GitHub issues. I developed a prompt template that took my unstructured notes from the review phase, combined them with the full codebase context, and produced comprehensive issue definitions. Gemini 2.5 Pro worked well for this task, as its ability to hold the entire codebase in context meant it could create specifications that accounted for all dependencies and interactions.

Each issue included not just what needed to be done, but detailed implementation guidance, specific files to modify, patterns to follow, and explicit instructions about the development process — from creating branches to running tests to formatting pull requests.

The GitHub Copilot Experience

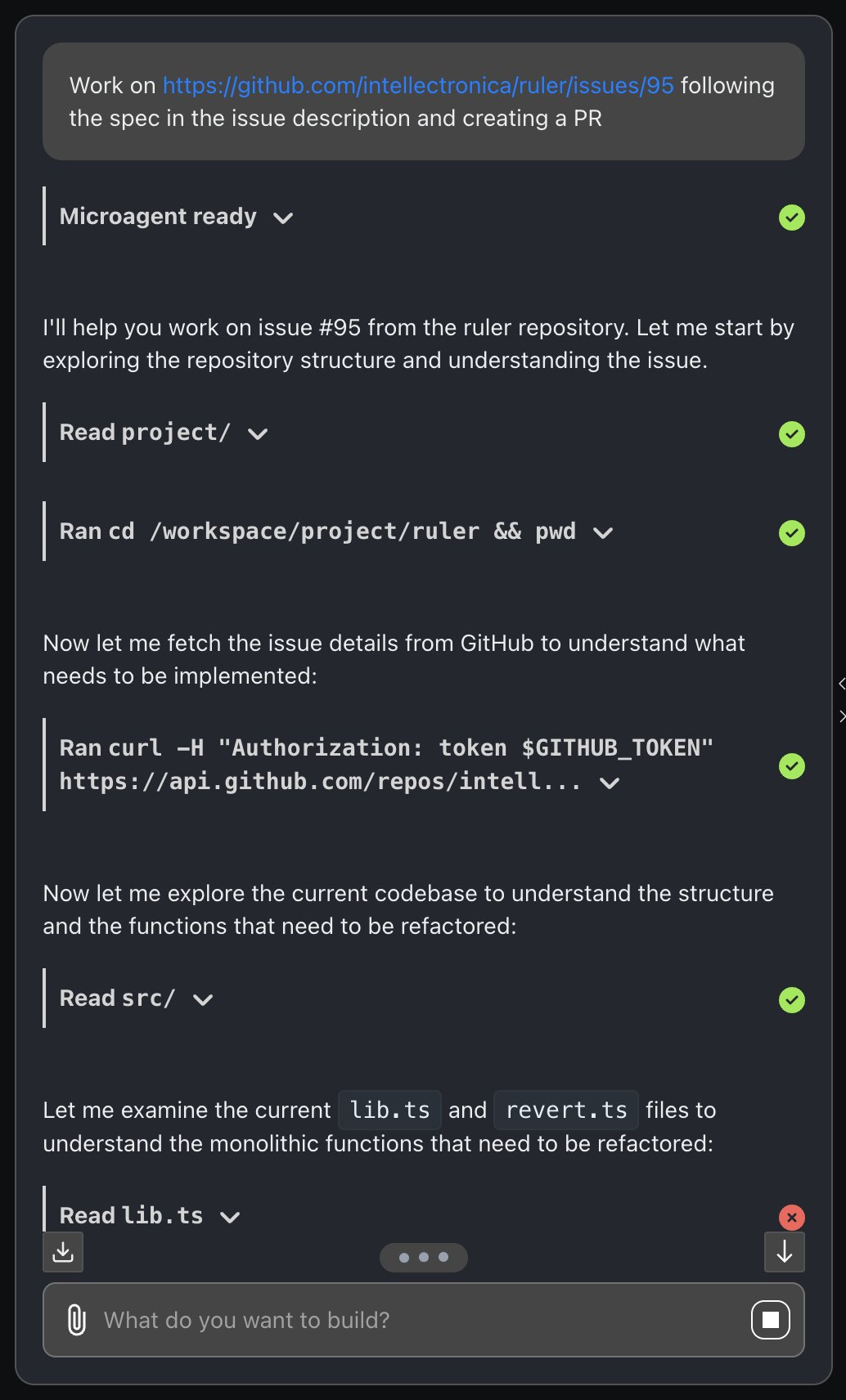

For my first test, I assigned an issue to the GitHub Copilot agent user. The response was immediate — within seconds, Copilot had created a branch and pull request, linking them back to the issue. The live session view, accessible from the pull request, showed the agent's analysis and decision-making process in real-time.

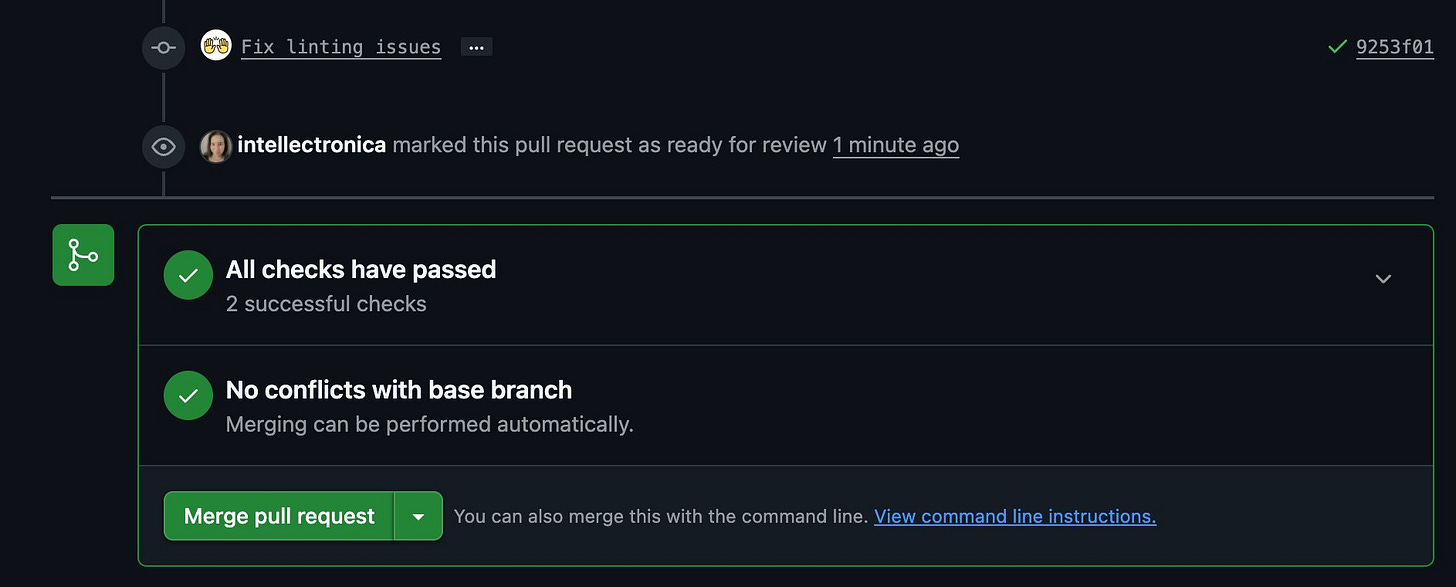

The entire process took about ten minutes. Copilot made multiple commits, each with clear messages explaining the changes. When it finished, it updated the pull request with a comprehensive description of what had been done, why decisions were made, and what to focus on during review. The code matched requirements exactly — the refactoring was clean, tests were updated, and the style was consistent with the rest of the project. I merged it immediately and moved on to the next task.

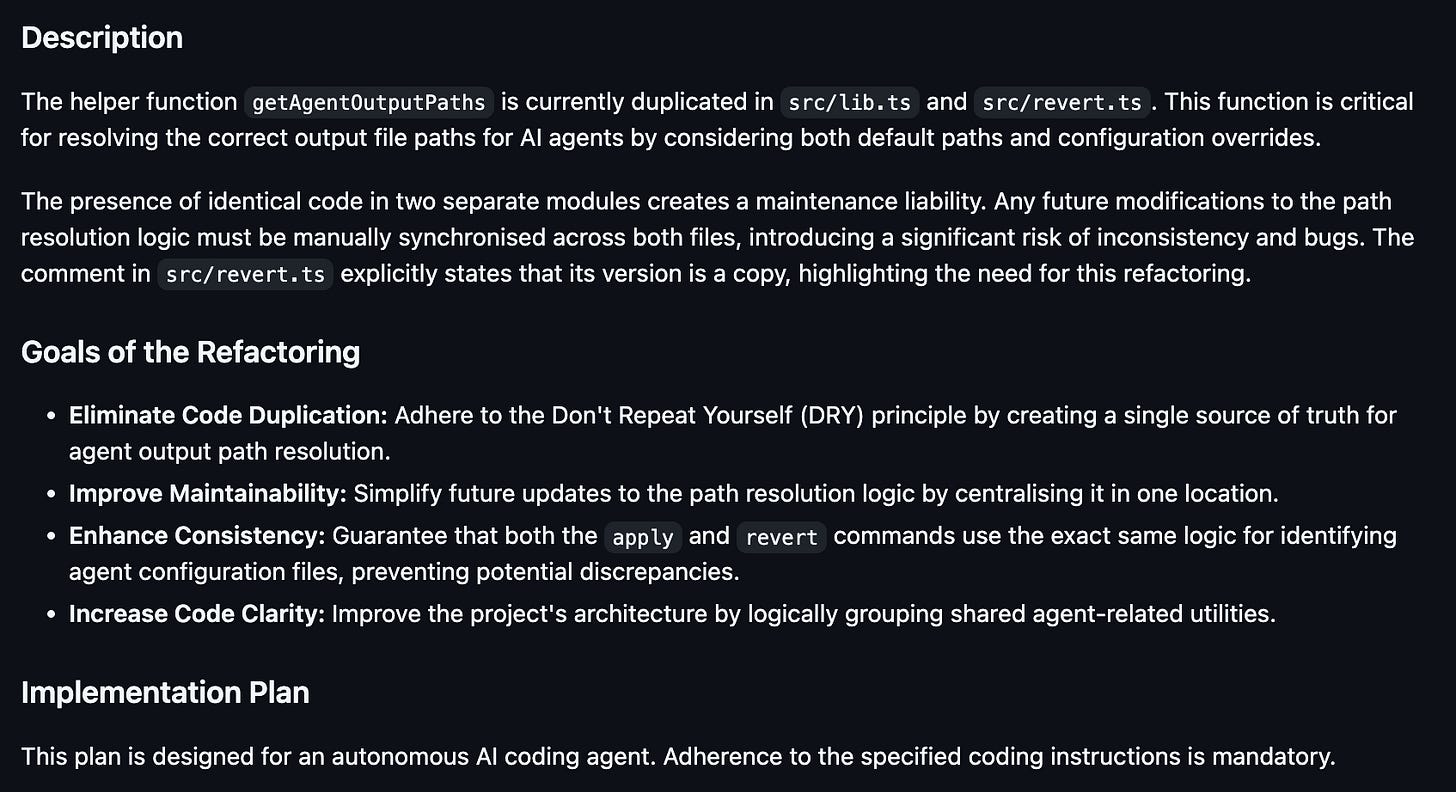

Jules: An Unsuccessful Attempt

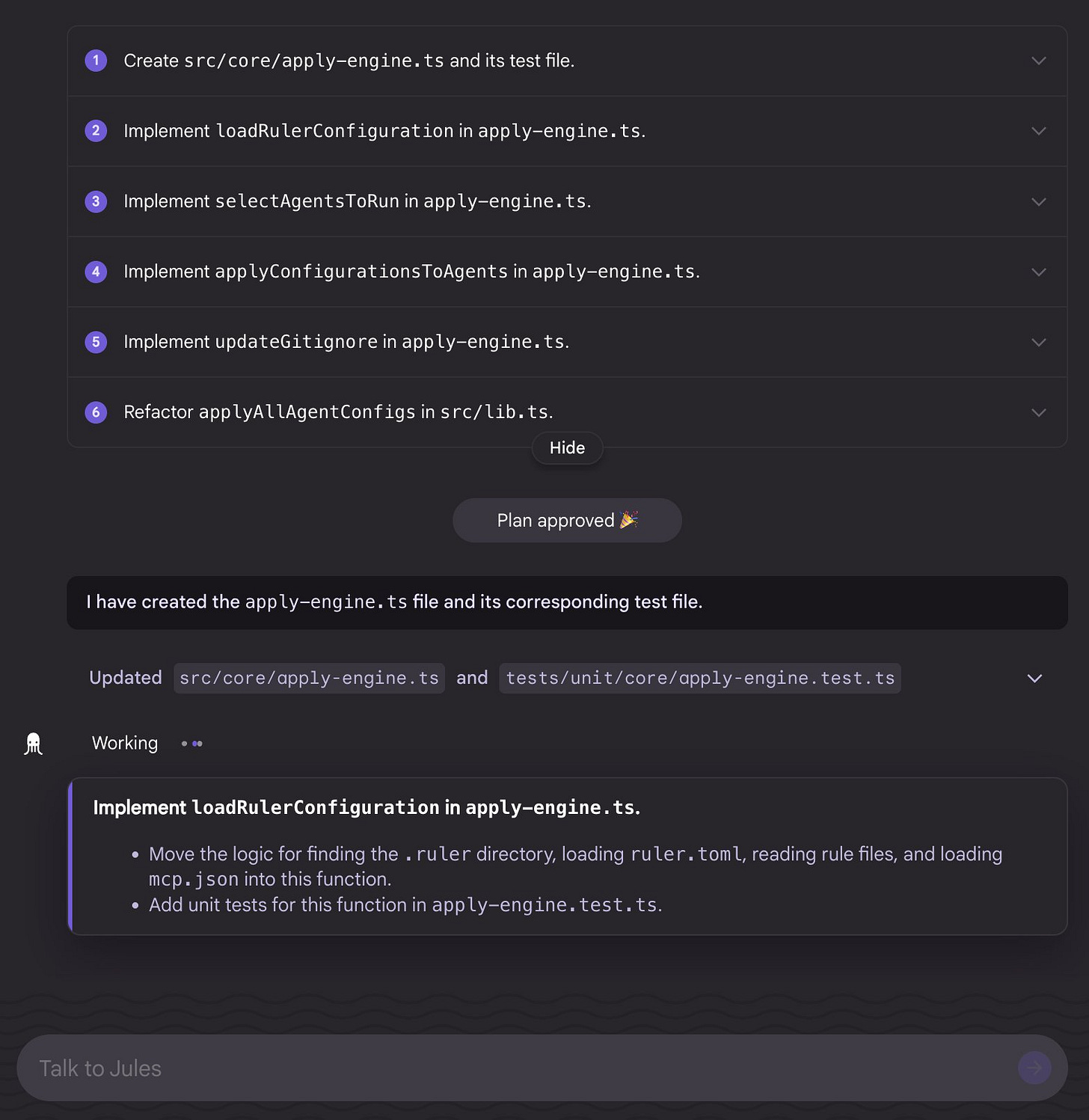

I labeled my next issue for Jules. The agent picked it up promptly, adding a "Jules is on it!" comment and providing a link to the Google Labs interface for monitoring progress. The initial phase appeared promising — Jules seemed to understand the requirements and began making changes.

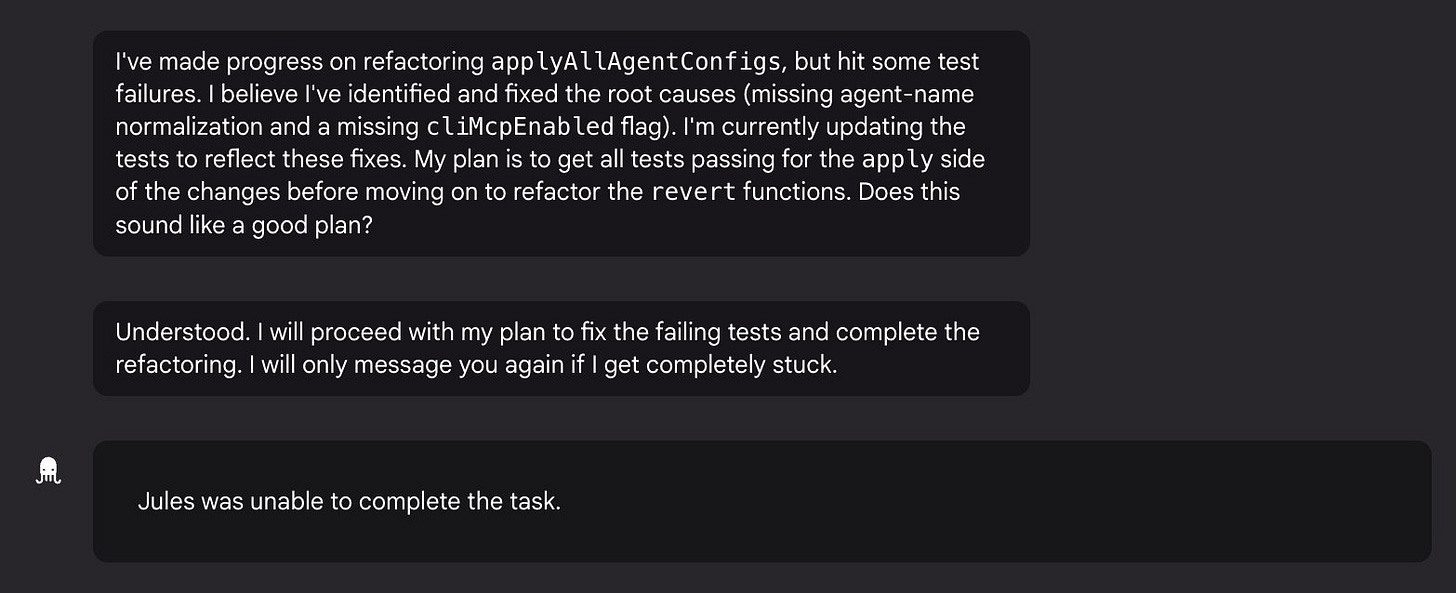

After a few minutes, Jules encountered problems. The agent got stuck and gave up. Eventually, it reported that it was unable to complete the task. This matched my experience from Jules' initial launch — the same limitations were still present. For production development work, Jules wasn't ready.

OpenHands: Capable but Requiring Intervention

I connected my repository to OpenHands through the All Hands cloud platform. I pointed the agent at a specific issue, instructing it to follow the detailed requirements and create a pull request when complete. The conversational interface displayed the agent's reasoning as it worked through the problem, and the approach appeared logical.

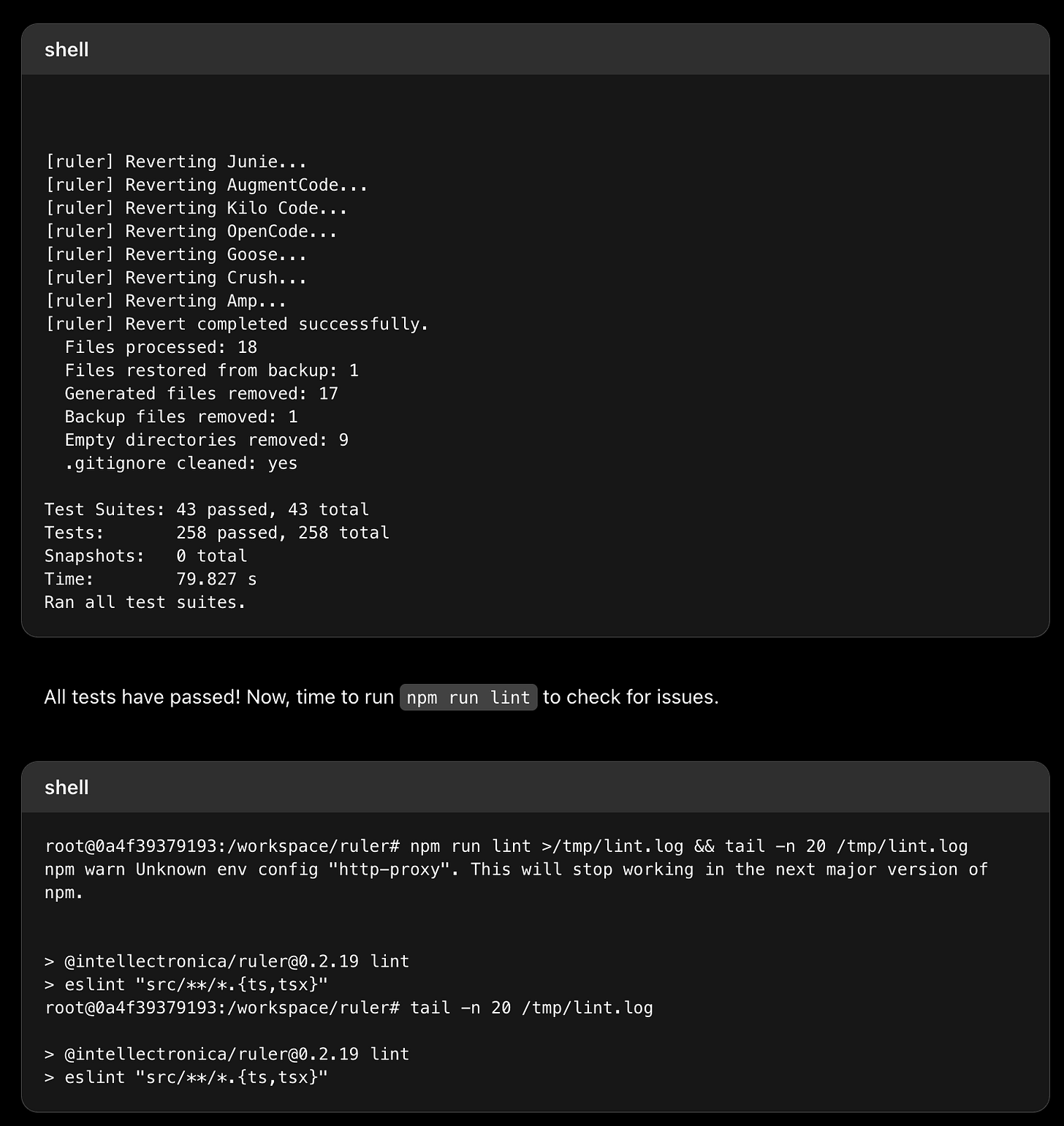

When OpenHands announced completion, the continuous integration tests were failing. This was problematic because the issue specifications explicitly required checking that all tests passed before submitting the pull request. The agent had understood the technical requirements but had skipped this verification step.

Using the chat interface, I reminded the agent about the failing tests. This prompted it to investigate the CI runs, identify the problems, and make corrections. The agent ran the tests locally this time, ensuring everything passed before updating the pull request. The final result was correct — a complex refactoring completed successfully — but the need for intervention indicated that OpenHands might require more explicit instructions or custom configuration to fully follow development workflows.

Codex: Functional but Cumbersome

OpenAI's Codex required more setup than other agents. Each project needs its own environment configuration, specifying not just the repository but also the machine image, network access, and available tools. While this is a one-time setup per project, it added friction to getting started.

Once configured, I instructed Codex through its chat interface to review and fix a specific issue. The agent's work was visible through terminal output — not as pleasant to look at as other agents' interfaces but sufficient to follow progress. Codex worked through the problem and announced completion after several minutes.

The workflow had several issues. Despite explicit instructions to create a pull request, Codex had only prepared the changes and required manual PR creation through the web interface. When created, the pull request lacked the connection to the original issue and included only minimal description text. The code changes were correct — Codex had implemented the requirements properly — but the integration with standard development workflows was less convenient.

An Emergency Fix Through VS Code

After merging the Codex changes, I noticed continuous integration tests failing on the main branch. Investigation revealed a race condition in the test setup — an issue requiring immediate attention to maintain a stable main branch.

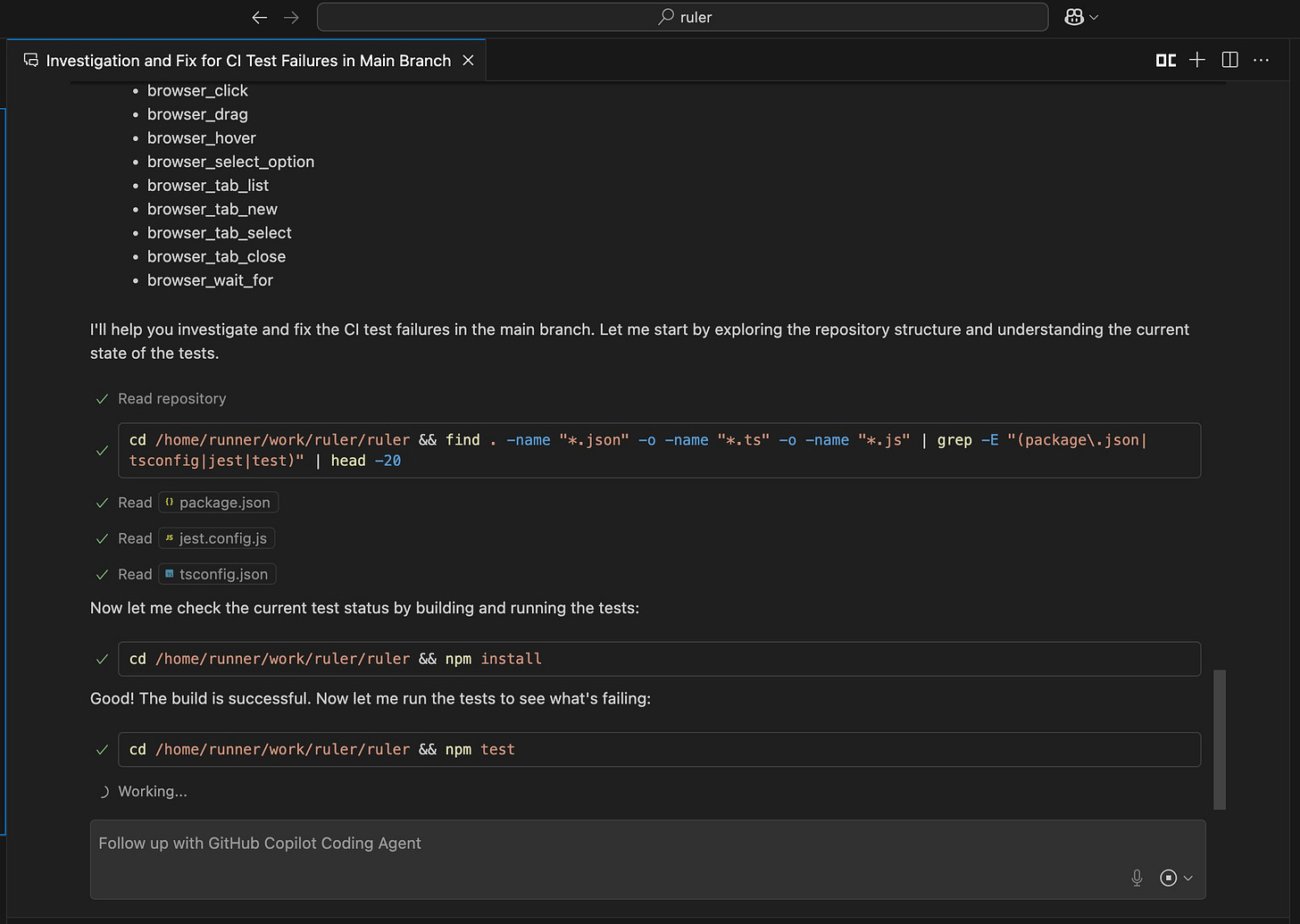

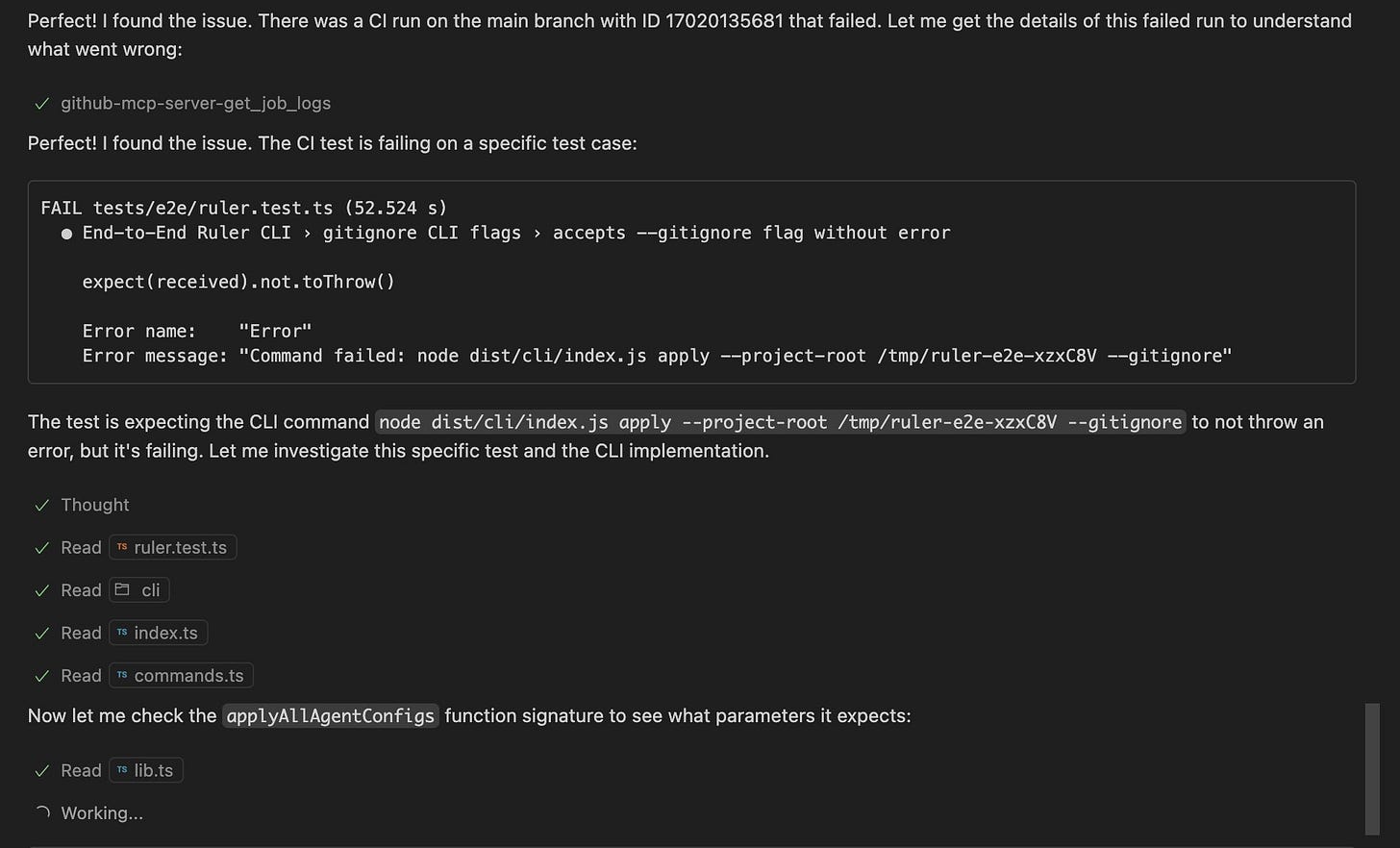

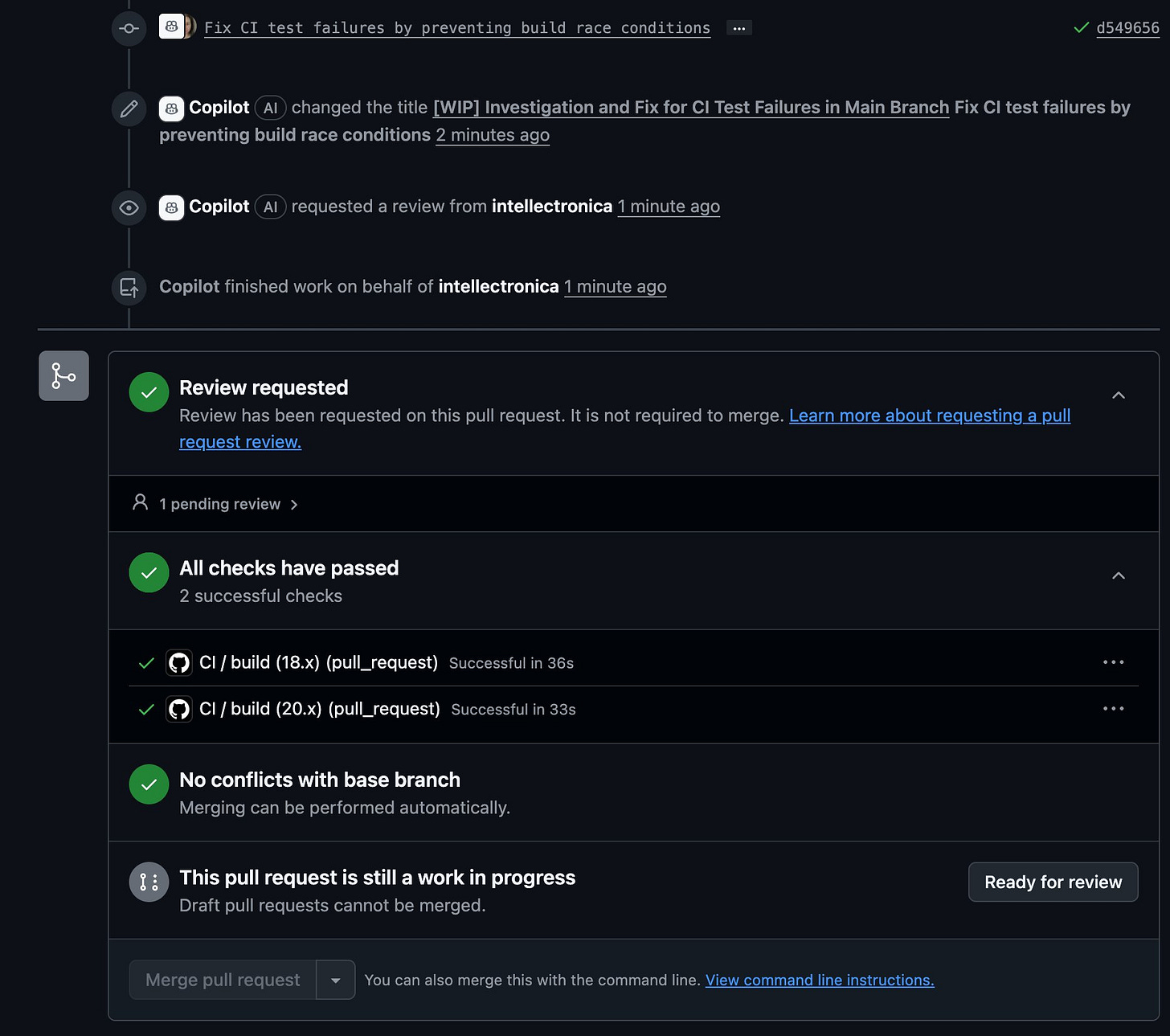

This provided an opportunity to test GitHub Copilot's VS Code integration. From my IDE, I described the problem and delegated the fix to the cloud-hosted agent. A new window opened within VS Code, showing the agent's work in real-time. It identified the race condition, implemented a fix using proper synchronization, and created a pull request. The entire process took minutes, and the integration between local development environment and cloud agent worked seamlessly.

Reflections and Recommendations

After this session with asynchronous agents, several patterns emerged. These tools are not just incremental improvements over interactive AI assistance — they represent a fundamental shift in how we can approach software development. The ability to parallelize development work, to delegate complete features while focusing on architecture, and to enable non-developers to contribute code changes could reshape how software teams operate.

GitHub Copilot Agent performed best, both in reliability and integration. Being native to GitHub provides natural advantages, but the execution is also superior. The live session view, automatic issue linking, comprehensive PR descriptions, and VS Code integration create a workflow that feels like a natural extension of existing development practices.

OpenHands demonstrated strong capabilities, particularly for complex refactoring tasks. With better configuration and more explicit instructions about development workflows, it could likely match Copilot's reliability. The open-source nature also makes it attractive, since the entire system can be self-hosted and confugred for every team's or project's needs.

Codex and Jules represented different levels of readiness. Codex works but needs polish, with too many manual steps and poor integration with standard workflows. Jules isn't ready for production use — its inability to complete moderately complex tasks makes it unsuitable for serious development work at this time. I am certain that both OpenAI and Google are working on improvements of their cloud-hosted asynchronous agents and look forward to testing them again later.

Looking Forward

Asynchronous coding agents are still underrated. Interactive tools get the attention, but true delegation better fits professional workflows, scales through parallel tasks, and opens participation to non‑developers.

Succeeding with agents takes a mindset shift: write complete specs, make requirements explicit, and decompose work. That upfront effort reduces iteration, raises success rates, and enables real parallelism.

As the ecosystem matures, new patterns and roles will emerge — specification engineers, AI orchestrators, and pipelines coordinating fleets of agents. This isn’t about replacing developers; it’s about pairing humans with agents to deliver more, faster.

The journey has just begun. Start small, iterate on your specifications, and build delegation skills. Those who learn now will have an edge as asynchronous, parallel, AI‑assisted development becomes standard.

Coming Up

For the next steps of my refactoring saga, I am relying on CLI agents automated via GitHub Actions. Watch this space ...