The 3 Architectures for Reusable LLM Tools

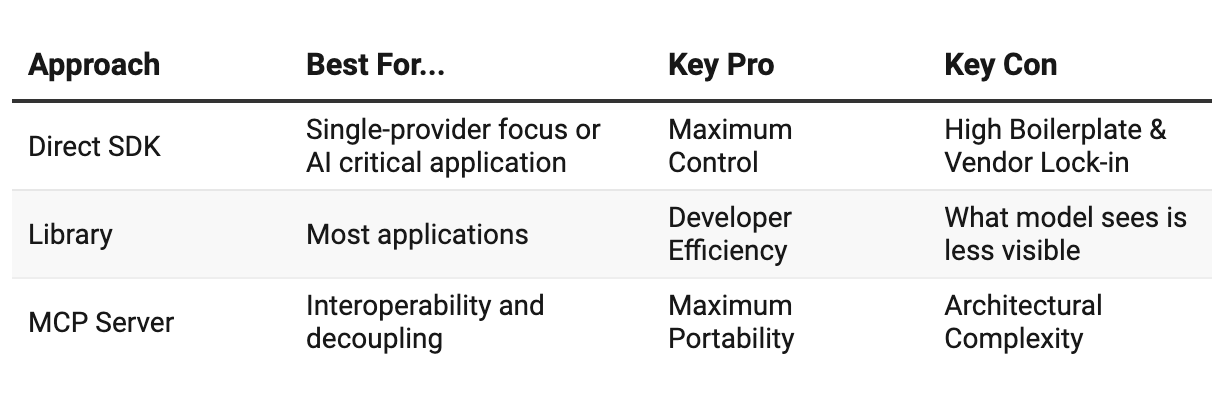

How you define tools is an architectural choice with trade-offs between control, development speed, and portability.

To make an LLM truly useful, you need to give it tools (ways to interact with APIs, databases, and the real world). How you define those tools is an architectural choice with trade-offs between control, development speed, and portability.

The three main paths:

Direct SDKs: The low-level, high-control route

Higher-Level Libraries: The abstracted, developer-friendly route.

MCP Servers: The decoupled, maximum-portability route.

1. Direct SDK: Maximum Control

Import a model provider's library (openai, gemini, etc.) and write the tool definitions using the native syntax.

Pros:

Control: You can tweak every parameter and know exactly what the model gets

Features: You get features instantly without waiting for library support.

No Extra Dependencies: You're only relying on the provider's official SDK.

Cons:

High Boilerplate: Requires a lot of code and complex dictionary structures

Vendor Lock-in: Each sdk has different syntax. Supporting multiple models means writing and maintaining multiple versions of your tool logic.

Tightly Coupled: Your tools are bound to your application code. Using them elsewhere means copy-pasting or refactoring into a separate library.

When to use it:

When you need the latest, most granular features and maximum control. This is not required for most commercial applications

When you will be doing extensive work and want to build your own abstractions that you can control and understand

Seeing the exact details of what is sent to the model is critical because your product is predominantly an AI product

2. Higher-Level Libraries: Maximum Efficiency

Frameworks like Simon's llm toolbox, and others, seek to provide a simplified interface for defining tools that work across different models.

Pros:

Simple: The code is simple and concise, often simple python functions.

Provider Agnostic: One tool definition for OpenAI, Google, Claude, etc.

Less Boilerplate: Reduces repetitive code

Cons:

Abstraction Lag: You might have to wait for a library to support a new feature

Less Control: Abstraction hides some low-level details which can be critical

Added Dependency: Dependencies always have a cost

When to use it: For most application development. The trade-off is slightly less control for developer speed and code simplicity. Choosing a simple, lightweight library (like `llm`) mitigates a lot of the risk of low-level details being hidden.

3. The MCP Server Approach: Maximum Portability

With MCP, you build a simple server that exposes your tools over a standardized API. Your LLM application (or any LLM application) can then call this server to use its tools.

Pros:

Decoupling: Your tools live separate from your application and you can update, test, and deploy them without touching your main app.

Plug-and-Play: Any agent that speaks MCP can use your tools. This can be useful for two reasons:

Clients or beta testers can use your tools in their preferred environment

You can try your tools in other interfaces (cursor, CC, etc.) to experiment

Language Agnostic: Your main application might be in Python, but your data processing tool could be written in Go or Rust.

Cons:

Architectural Complexity: A separate microservice means more complexity

Network Latency: Often slightly more latency due to going over a network

Protocol Adherence: You must conform to a specific protocol standard

When to use it: When tools need to be shared across teams and applications

Which Path Should You Choose?

The "right" choice depends entirely on your project's needs.

From Theory to Production

Choosing the right tool integration strategy is more than a preference, it’s an architecture decision. If you're ready to move beyond basic tool-calling and learn how to build reliable, customized AI workflows that scale, check out our 3-week, cohort-based course, Elite AI Assisted Coding. We dive deep into designing and shipping these exact systems with instruction from AI engineering leaders from companies like Google and Microsoft.