Mastering AI Context

A Deep Dive into Repo Prompt with its Founder, Eric

I was thrilled to host Eric, the founder of Repo Prompt, for a recent talk in our Elite AI Assisted Coding Course . Our community has been deep in conversation about an often-overloved aspect of working with AI agents: context. How do you give an agent enough information to have a real chance at success without overwhelming it?

Too little context, and the agent hallucinates or produces irrelevant code. Too much, and it gets lost, distracted, or burns through its usable context window.

This is exactly the problem Eric set out to solve with Repo Prompt, a tool designed for “building optimal prompts to solve problems.” [00:38] His insights were fantastic, and I want to share the key takeaways from his session.

The Origin: Solving a Real-World Problem

Like many great tools, Repo Prompt was born from a personal need. Eric was working on a game for the Apple Vision Pro and kept hitting the same wall: getting the right context into the AI model. [01:35] Early agents were clumsy, and tools like Cursor had strict context limits. He needed a better way to build rich, informative prompts that made the most of the available context window.

The Core Workflow: From Vague Idea to Actionable Plan

Eric’s process centers on a powerful idea: separate the “thinking” from the “doing.” You use a smart model to plan and a fast agent to implement.

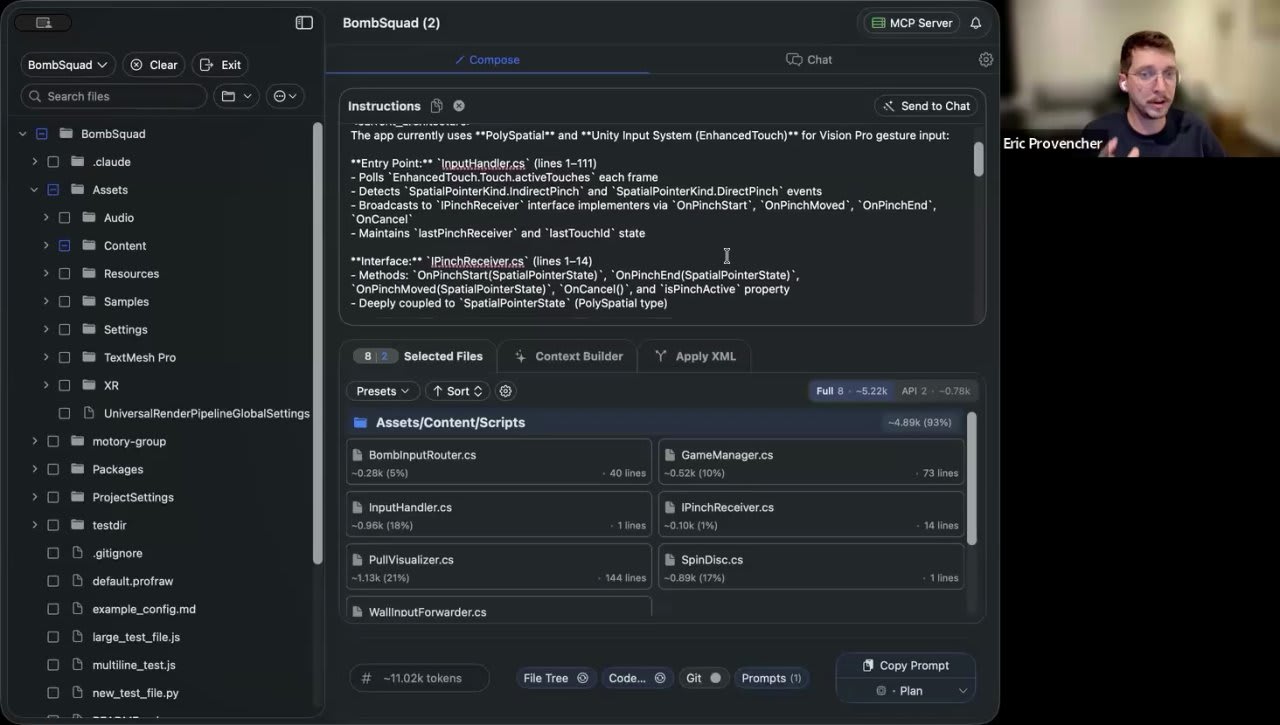

It starts with the Context Builder. [04:31] You begin with a simple, high-level task. In his demo, Eric wanted to refactor his game’s input system to handle touchscreen input for the Vision Pro.

He types a simple instruction and selects a few potentially relevant files.

Then, he hits “Run Discovery.” [05:56] This is where the magic starts. An AI agent, powered by a model of your choice (like Claude Haiku for speed or a more powerful model for complexity), scans your codebase. It uses a few clever techniques to build a comprehensive understanding of the task.

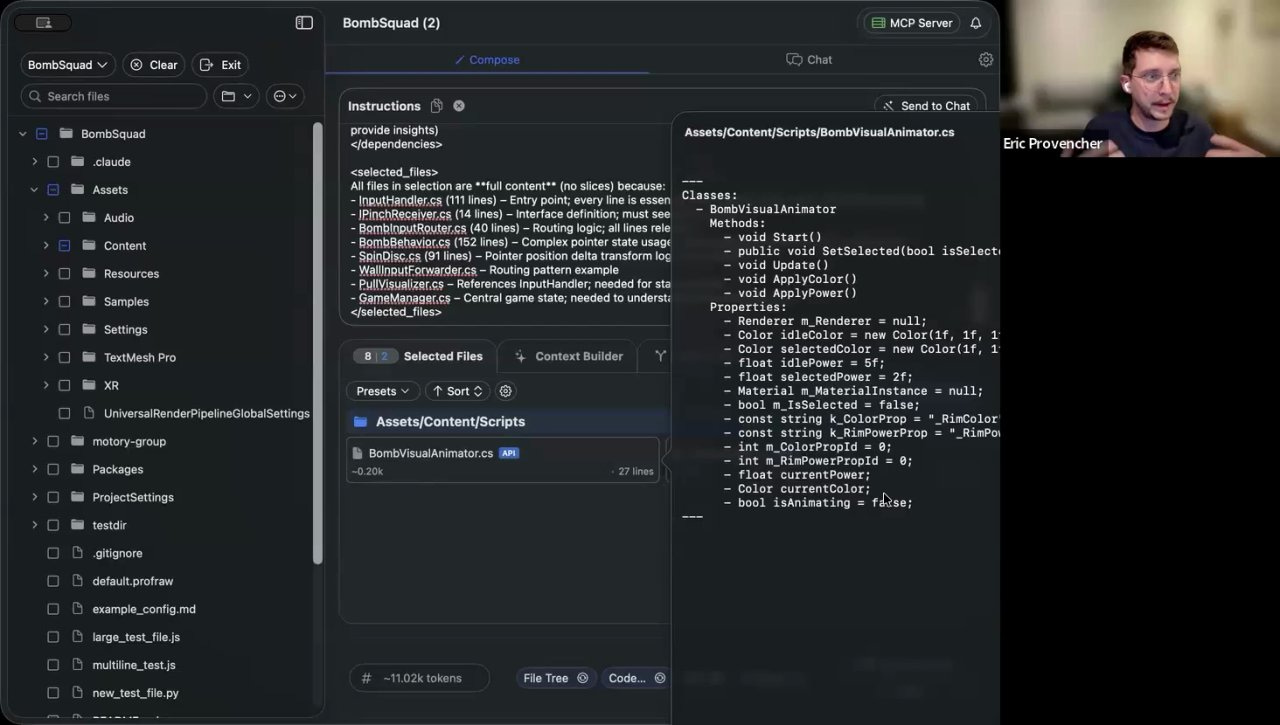

Code Maps: The AI’s Table of Contents

One of Repo Prompt’s key features is Code Maps. [06:17] Eric describes these as being like C++ header files. Instead of feeding the full source code of a file into the prompt, a Code Map provides a compressed summary - essentially just the class and function signatures. [06:58]

This gives the agent a bird’s-eye view of the codebase, allowing it to understand relationships and dependencies without wasting precious tokens on implementation details it doesn’t need yet. It’s an incredibly efficient way to provide broad context.

The Enhanced Prompt: Your Seed for Success

After the discovery process finishes, Repo Prompt transforms the initial vague request into a detailed, structured “seed prompt.” This isn’t just a slightly better version of your original text; it’s a comprehensive brief, contextualized to your specific codebase and task.

The generated prompt includes:

A Reframed Task: A clearer, more specific goal.

Current Architecture: An overview of how the relevant parts of the code are currently set up.

Data Flow: How information moves through the system.

Key Decision Points: Crucial questions the model needs to consider, highlighting areas of ambiguity.

Dependencies & Selected Files: The full text of the most relevant files and code maps.

This 11,000-token prompt is now perfectly primed for the next step. You’ve done the hard work of gathering and structuring the context.

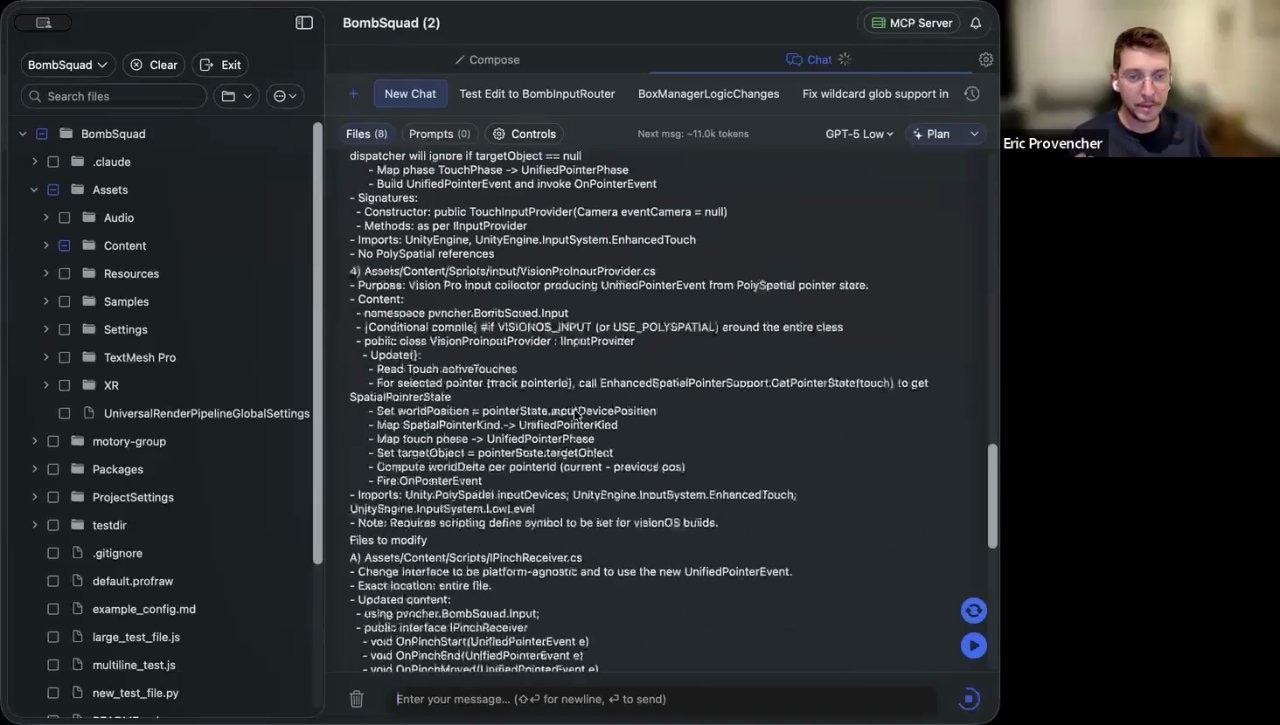

The Two-Step Process: Plan, then Implement

With this high-quality seed prompt, you can now move to the planning phase.

Plan with a Reasoning Model: Paste the entire context into a powerful reasoning model like GPT-4 or use Repo Prompt’s built-in chat. [12:08] The goal here is to resolve all the “Key Decision Points” and create a detailed, unambiguous architectural plan. The model isn’t writing code yet; it’s thinking about the best way to solve the problem.

Implement with an Agent: Once you have a clear plan, you give only the plan to an execution-focused agent like Claude Code or Codex. [12:20] The agent’s job is now simple: follow the instructions. All the complex reasoning and architectural decisions have already been made.

Eric noted this separation of concerns is crucial. “You don’t want them to both think really hard and implement well, because if they do both, you’re going to get average results on both sides.” [31:40]

Avoiding “Context Rot”

During the Q&A, a great point came up about the “amnesia” agents have between sessions. [20:08] Every time you interact, you have to re-establish context. This can lead to context rot or context pollution, where irrelevant tool outputs and failed attempts from earlier in a session clutter the context window and degrade the agent’s performance. [32:49]

This is why static context files like agents.md can be tricky. Eric advises keeping them extremely minimal, focusing only on timeless rules about agent behavior (e.g., “use this tool”), not project-specific knowledge that can quickly become stale. [23:45]

Repo Prompt’s approach avoids this by generating fresh, dynamic, and task-specific context for each job.

Key Takeaways for Working with AI

Remove Ambiguity Above All: Your primary job is to be the “Chief Ambiguity Remover.” Use planning phases to turn vague requirements into concrete, actionable steps. [19:08]

Break Down the Work: Do the engineering work first: break the PRD into an architectural plan, then into milestones, and finally into specific coding tasks. [41:05]

Use Version Control Aggressively: When an agent goes down the wrong path, don’t try to fix it. Roll back to a known good state and restart with a better prompt, incorporating what you learned from the failure. [34:52]

Treat the Process Like a Science Experiment: Start with a hypothesis (your prompt), run the experiment (the agent), and analyze the results. Iterate and refine. [35:26]