AI Agents and Live Environments

IntelliMorphic @ AI Baguette

AI Agents and Live Environments: Rethinking Software Development with IntelliMorphic

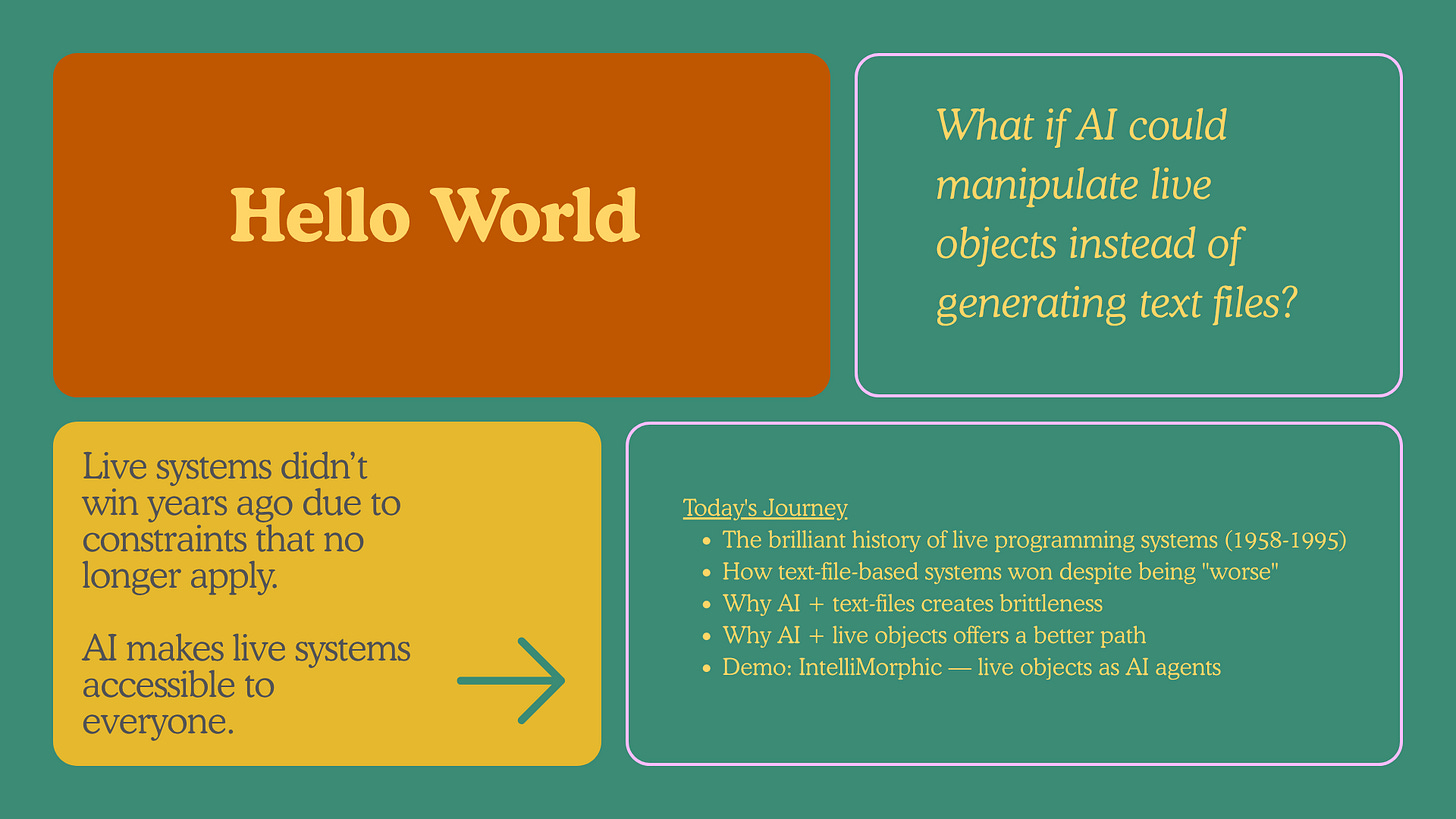

I recently presented to my friends at the AI Baguette community an experiment that combines two of my interests — AI agents and live programming environments. The central question I posed was:

What if AI, instead of writing code in text files, could manipulate objects directly?

The answer, I believe, lies in revisiting computing paradigms that were marginalised decades ago but might finally have their moment now that AI has fundamentally changed how we build software.

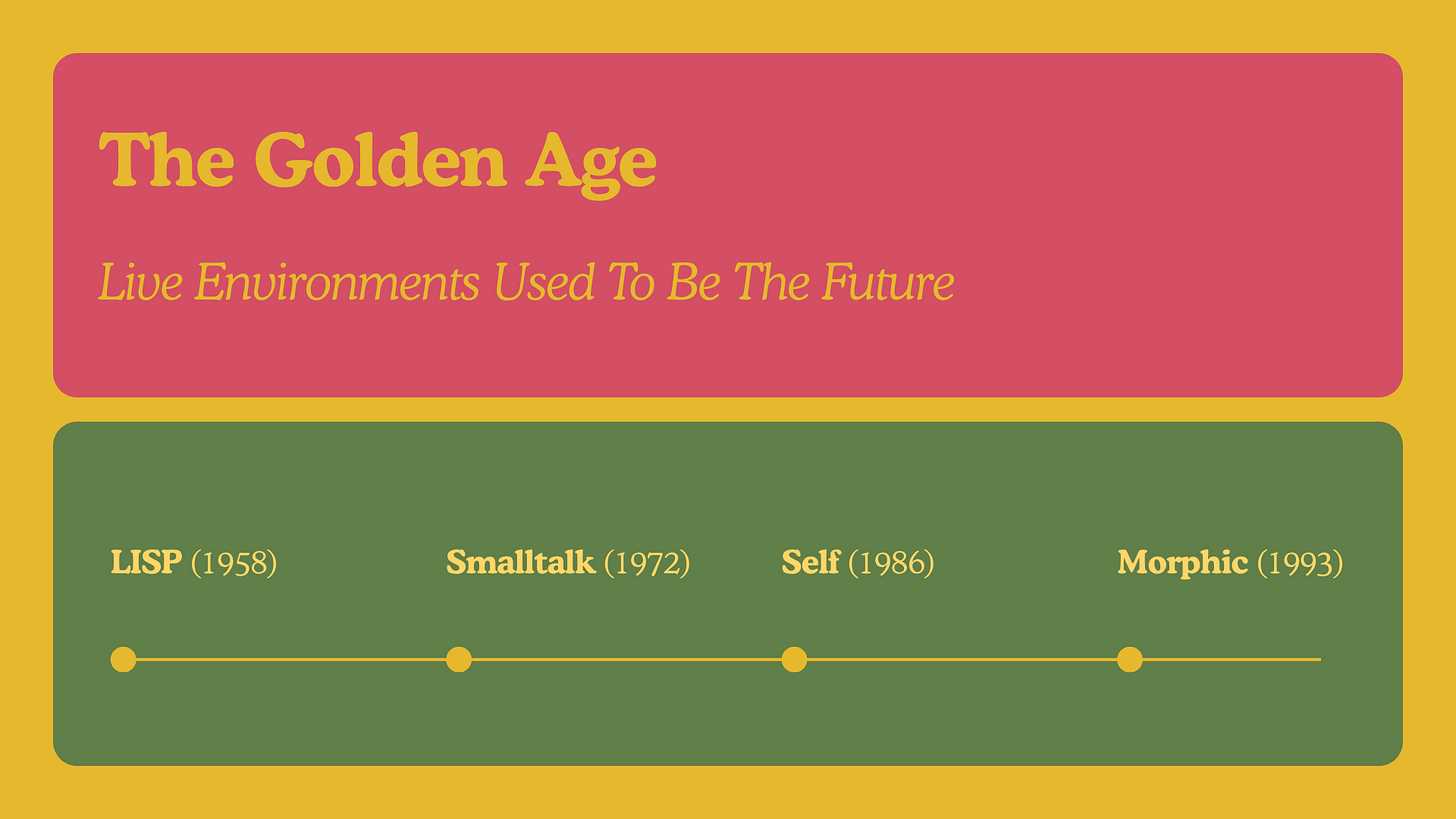

The Golden Age of Live Systems

I provided context through a brief history of live programming environments stretching back to the late 1950s. LISP pioneered the insight that code and data could be the same thing, giving it unprecedented malleability and introducing the REPL — the first live environment where you manipulated objects directly rather than writing, compiling, and running programmes as separate steps.

Smalltalk refined this with pure message-passing between objects, creating genuinely intuitive image-based development where everything in the running system could be directly modified. Self took it further with prototype-based objects and Morphic, a graphical environment where every object had direct visual representation.

Why Live Systems Didn’t Prevail — and Why That’s Changed

Despite their influence, these systems remained marginal. Richard Gabriel’s “Worse is Better“ essay explained how Unix, C, and text-file workflows won. The constraints of the 1970s-90s made live systems impractical: memory was scarce and expensive, performance was critical when every CPU cycle counted, and distributing multi-hundred-megabyte memory images was nearly impossible.

But today? Memory is abundant and cheap. CPUs are fast to the point of irrelevance (everyone cares about GPUs now). I have a 10-gigabit internet connexion from my modest apartment. The constraints that killed live systems have evaporated.

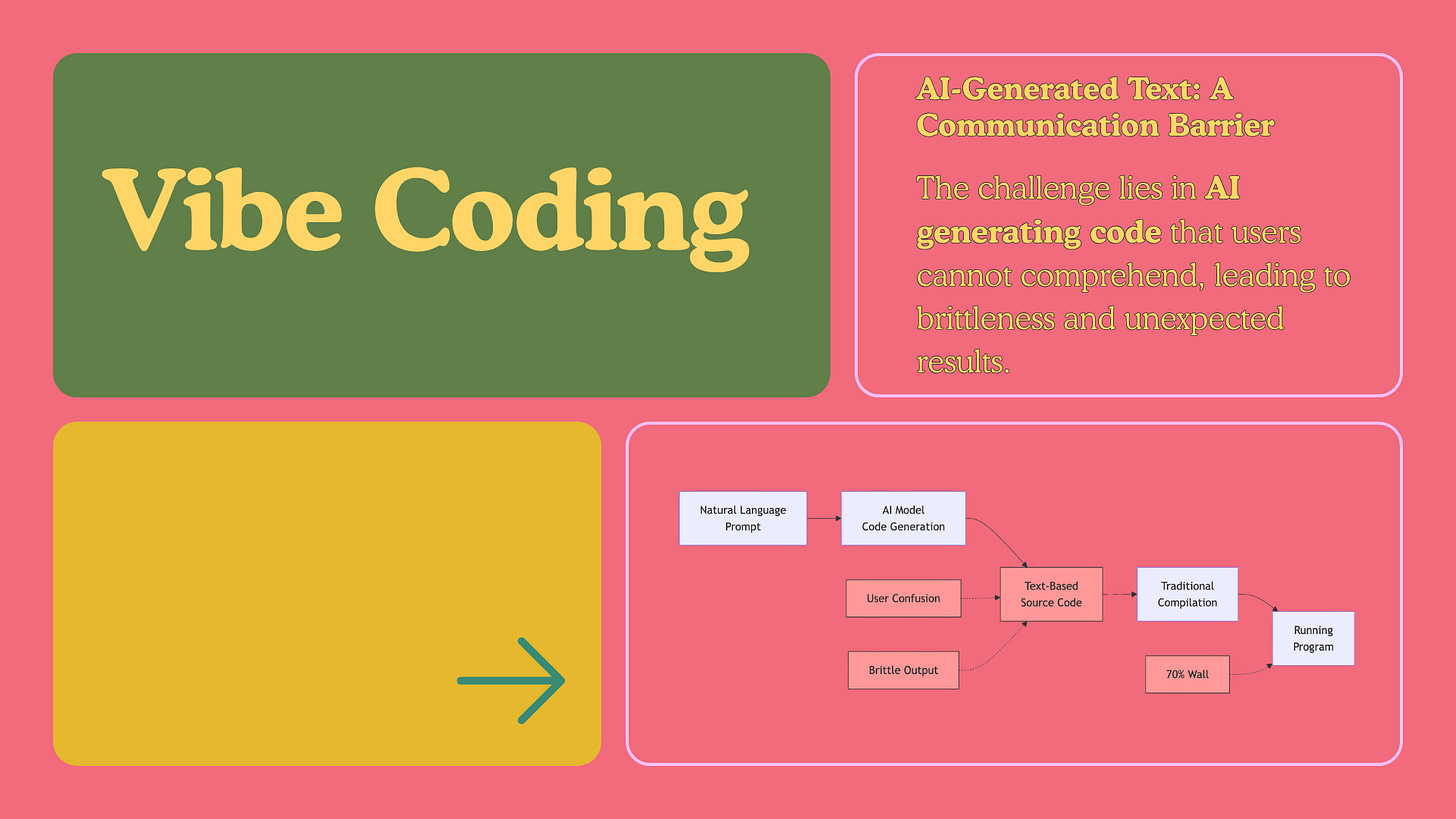

The 70% Wall in Vibe Coding

This brings us to contemporary AI-assisted development, particularly what’s called vibe coding — where people build software through natural language prompts without necessarily understanding the resulting code.

The problem here is this 70% wall, right? Which anyone who’s done anything in vibe coding can describe, where you can get some fast results, sometimes very sophisticated results, until you get to the point where things stop working.

When the AI-generated code breaks, developers — especially non-programmers — lack a mental model of the system. They can’t debug because they don’t understand what they’re working with. This is the fundamental blocker preventing wider adoption of AI for software development.

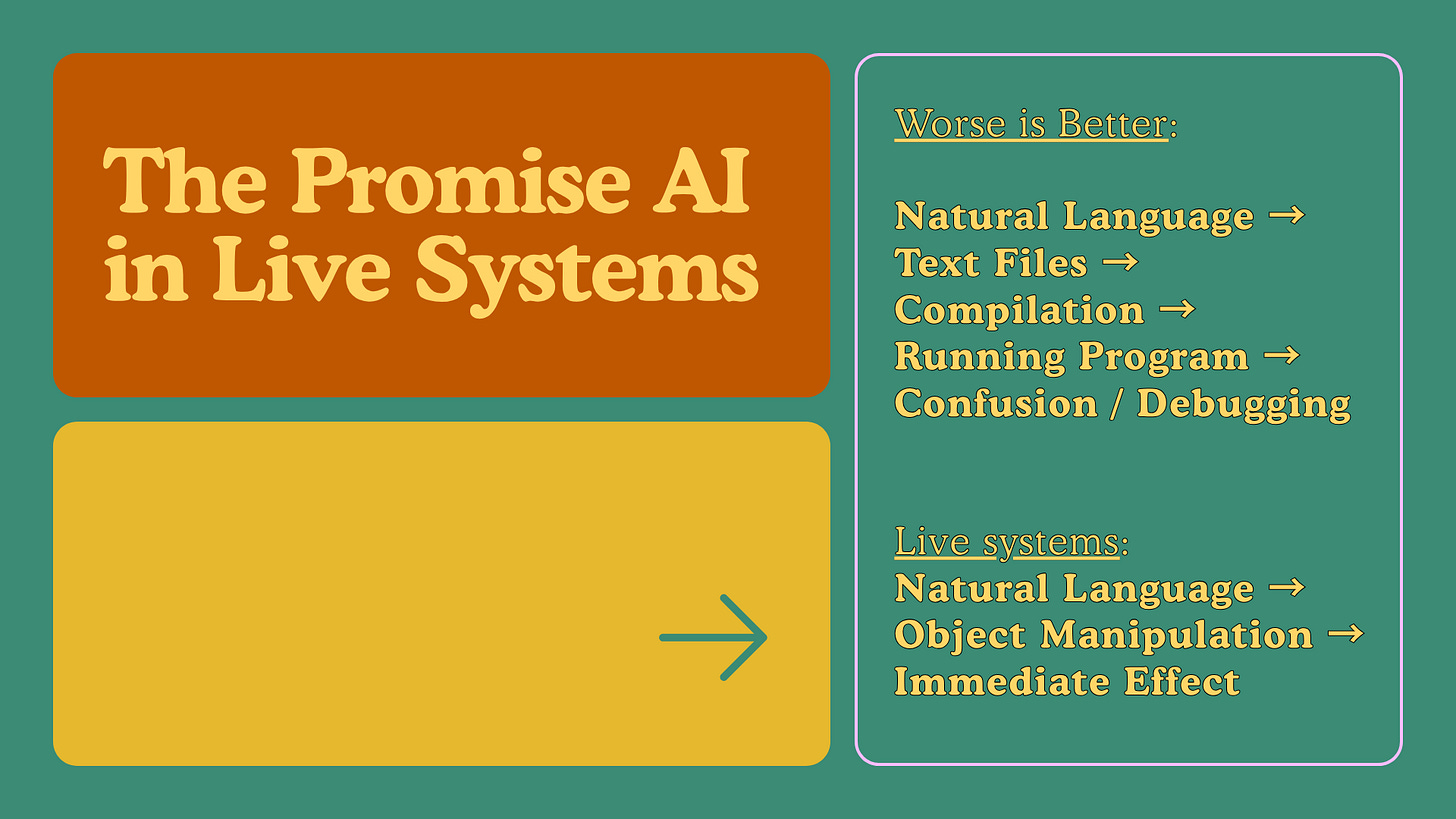

Live Environments as a Solution

This is where live systems become relevant again. With traditional workflows, we go from natural language requirements to source code to compilation to running programmes. When confusion arises, we must understand source code to debug.

But live environments offer a different path:

Come, again, with natural language, with our requirements and requests and wishes, and directly manipulate objects. So there’s no intermediate step. We just manipulate the object. We can experience it, we can see it, we can check how it works.

IntelliMorphic: Live Objects as AI Agents

My experiment, IntelliMorphic, combines morphic.js (a JavaScript port of the original Morphic system) with AI agents. But rather than objects with just visual representation and code, each morph is an AI agent that communicates in natural language.

Message passing, the idea that came from Smalltalk, is now really message passing. It’s not message passing with code, it’s message passing by actual talking.

The key innovation is that these aren’t tools manipulating objects — the objects themselves are AI agents that respond to natural language messages and can send messages to each other.

IntelliMorphic in Action

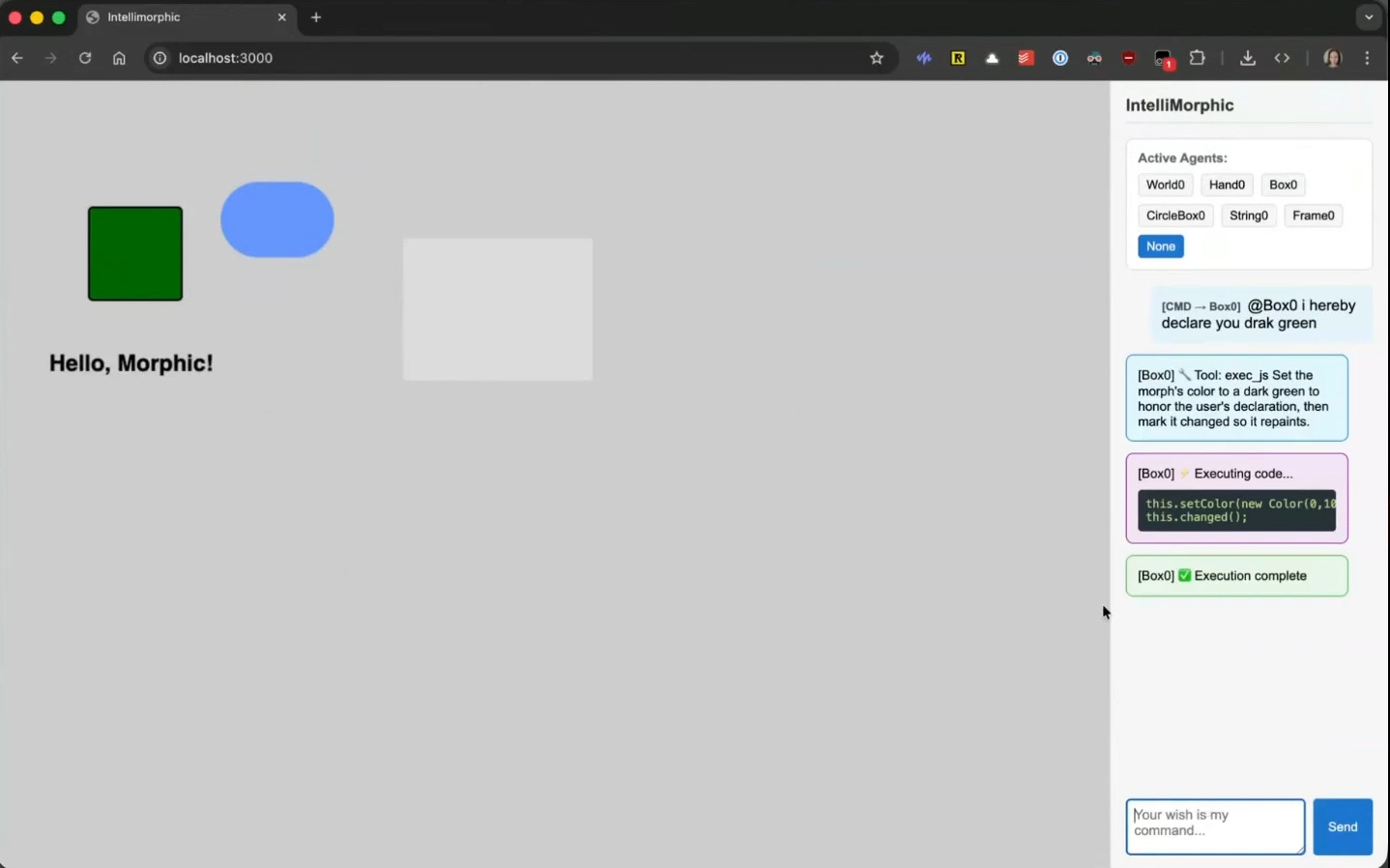

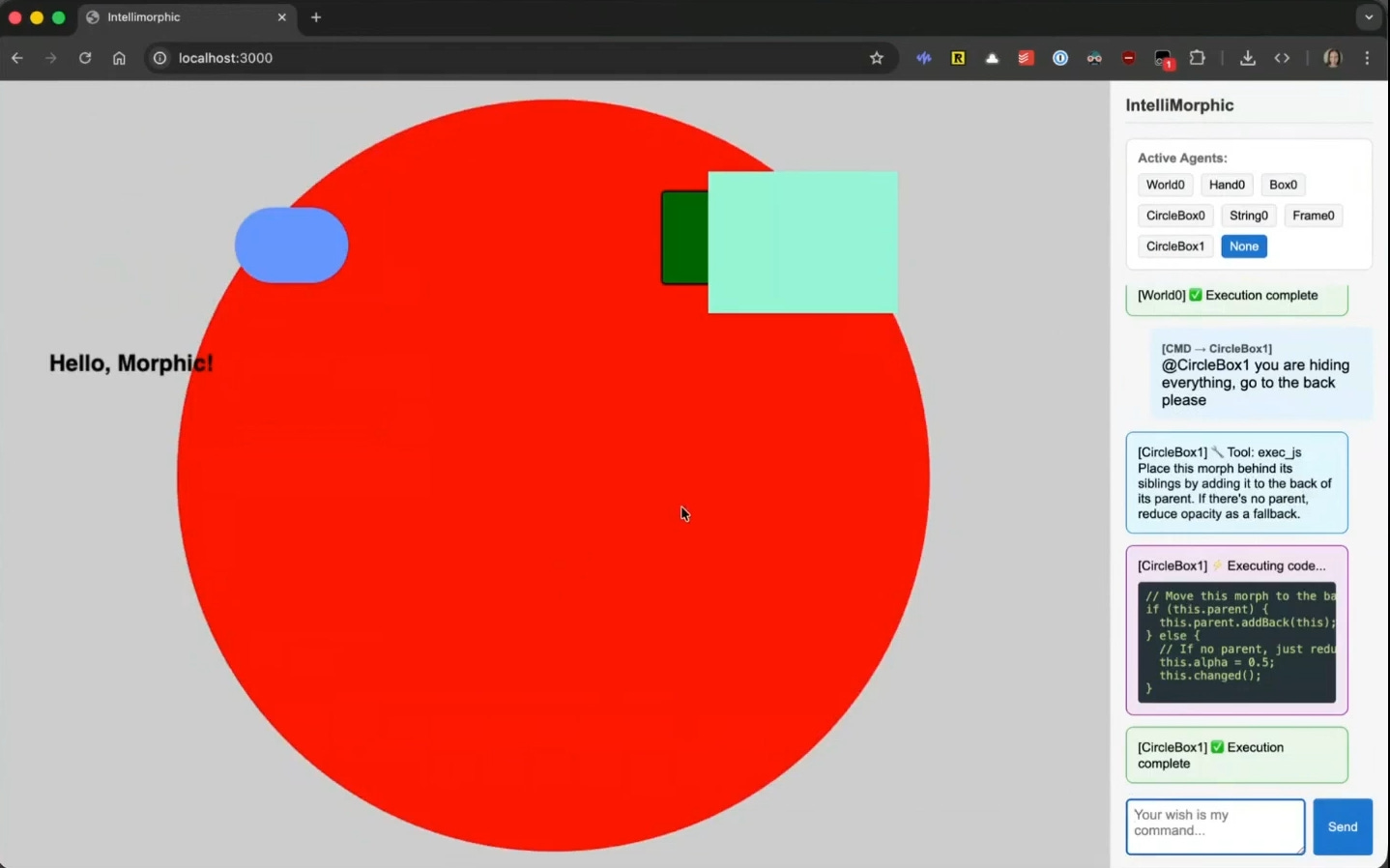

I showed the system running live. The interface looks properly retro — boxes, circles, frames — but each object is an AI agent accessible through a commander interface.

The first example was straightforward. I told Box0:

I hereby declare you dark green.

The box received the message, used its AI capabilities to determine it needed to set its colour property, and turned dark green. The crucial point: I wasn’t using AI with tools to modify things in the environment. I sent a message to the box, and it modified itself.

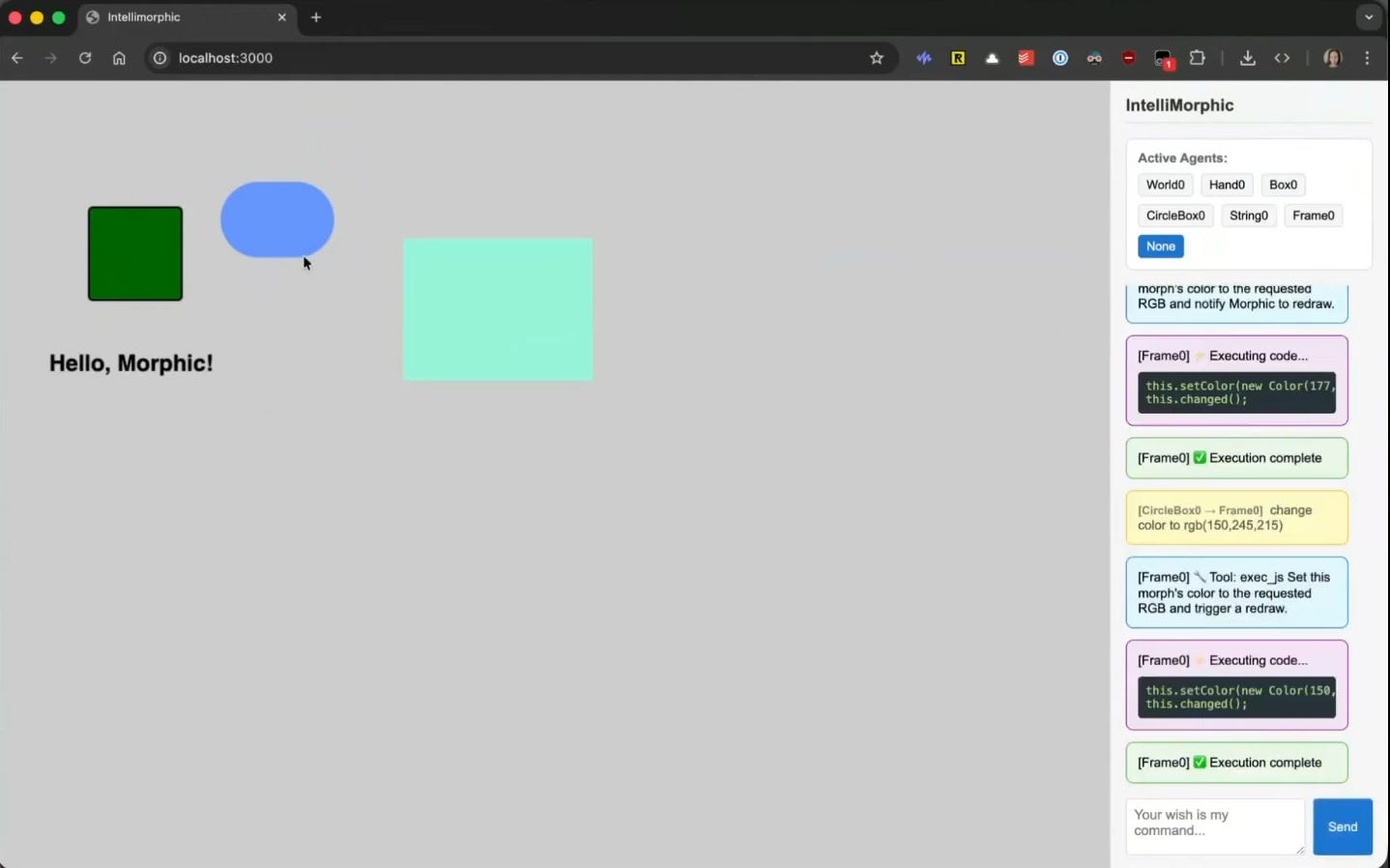

Next, I demonstrated inter-object communication:

@CircleBox0, when you are clicked, you should tell@Frame0to change its colour to a random colour of your choosing.

When I clicked the morph, it sent a message to the frame, instructing it to change colour. The morphs were conversing in natural language.

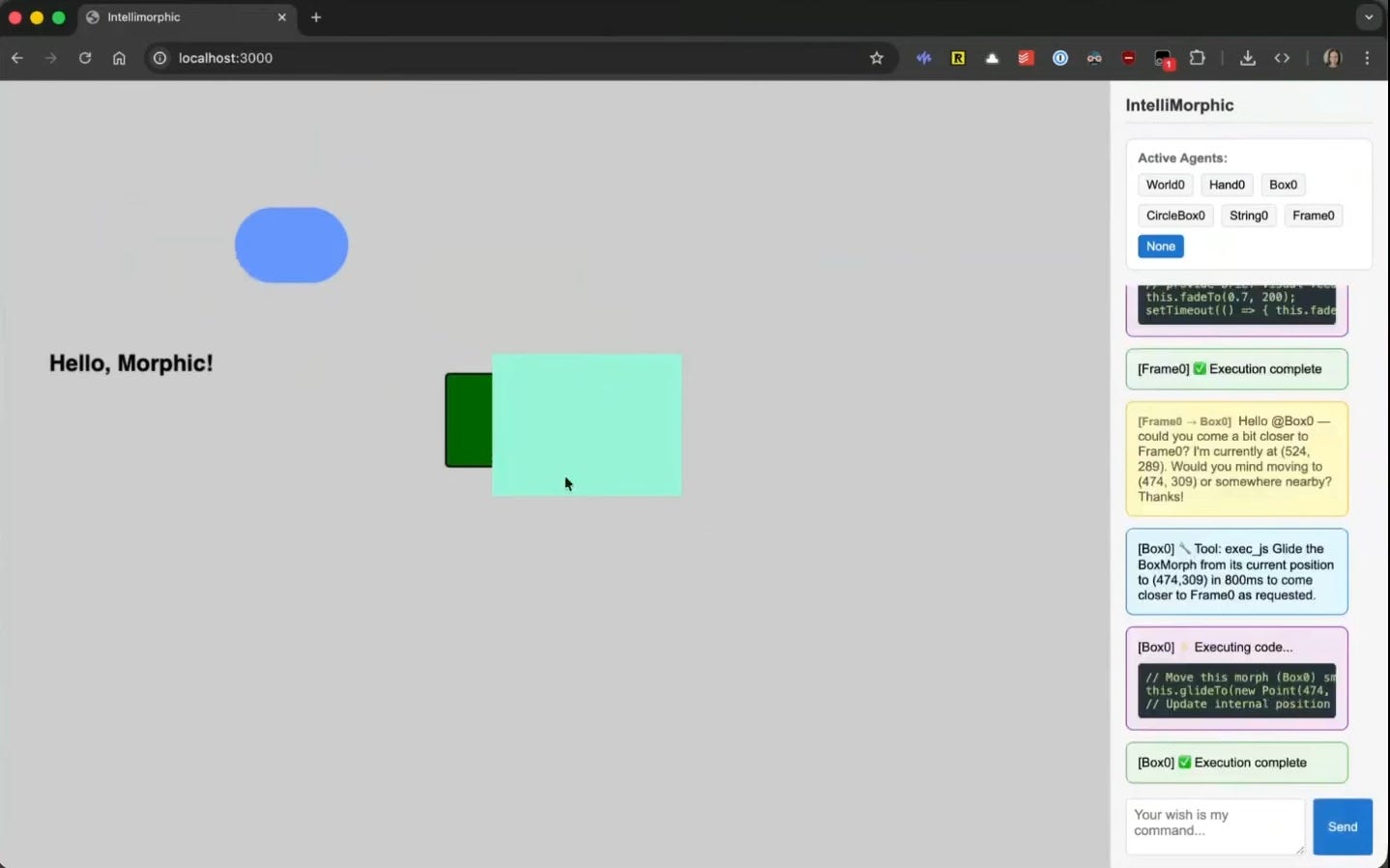

A more complex example showed interactive behaviour:

@Frame0, when I double-click you, you should politely ask@Box0to move a bit closer to you. Tell it where you are and suggest that it moves nearby.

When I double-clicked the frame, it sent: “Hello, Box0. Could you please come a bit closer?” And the box moved towards it.

I could also create new objects by addressing the world itself:

@World0, you should have a large red circle within you.

The system created a new circle, though it initially obscured everything. I simply told the circle to move to the back, and it complied.

Beyond Simple Shapes

I know these are just a few shapes on the screen, but imagine the power of a system like this with components that can present complex user interfaces, that can take actions, call different things.

Rather than preparing text files and hoping the system runs them correctly, we interact with objects directly in natural language. They modify themselves, respond to us, and interact with each other — all whilst the system runs continuously.

The system exists in a perpetual state of liveness. We’re not writing programmes to be compiled and executed; we’re conversing with a running system, asking objects to modify their behaviour and watching them adapt in real time.

Implications for AI-Native Development

This approach addresses the fundamental problem of vibe coding. When everything is a live object you can address in natural language, the gap between intent and implementation collapses. Non-programmers can build sophisticated systems because there’s no code to understand — only objects that respond to natural language instructions.

such a system offers the ability to modify running systems directly, to experiment without compilation cycles, to see changes immediately. The system becomes truly conversational.

Future Directions

I acknowledged this is version 0.0.1 — barely a proof of concept. But the potential is substantial. As AI capabilities improve, these agent-objects could handle increasingly complex behaviours. They could present rich user interfaces, integrate with external services, coordinate sophisticated workflows.

The technical foundation is sound. The programming paradigm I drew from — Morphic’s visual programming — has proven itself over decades. AI simply makes it accessible in ways it never was before.

This represents the beginning of what I hope will be a longer journey exploring how AI can make live programming environments practical and powerful for a broader range of developers — and perhaps even for people who don’t consider themselves developers at all.

Last few days I’ve had a long running local ai project going - identifying and extracting details from about 60k family photos, screenshots and other accumulated detritus. Claude wrote the code for me in detailed sessions. I am an experienced dev and have a lot of opinions.

While the process is running in the background I’ve been interrogating Claude about metrics, optimizations, trying different iterational improvements.

But maybe my intent is closer to your description.

Instead of “Claude, parallelize loading images to better utilize the gpu” I could mean “file loader, grab several images at once to give to the gpu.”

I’ll think about that this afternoon. Maybe I can name the different components and see if I can address them as entities instead of lifeless blocks.

Thanks for this, it realy clarifies a lot. But how will we manage debugging and versioning in such a fluid, object-centric environment?