Inside AMP Code: How SourceGraph is Building AI-Powered Development

AI coding assistants are rapidly changing how developers work, and AMP Code, built by SourceGraph, is aiming to be at the forefront. This blog post dives into the approach AMP Code is taking based on an Interview I did with the CEO of SourceGraph, Quinn Slack, from its multi-model architecture to its focus on team collaboration and continuous improvement. We'll explore why SourceGraph believes AMP Code is poised to be the "very best coding agent" [04:07], how it's addressing the challenges of AI-powered development, and what my experience with Amp has been as compared to Claude Code.

Before we dive in, a couple of things I want to highlight about Source Graph that we didn't talk about in the interview, but are related and wholesome

Amp is doing some pretty cool community-building things. For example, they are giving all students in our upcoming AI coding course free credits to try Amp for the duration of the course. And in addition, they are supporting OSS creators for OSS work.

Amp supports open-source developers. I personally receive no credits from them, but I am aware they are supporting two good friends of mine and legendary OSS developers, Danny and Audrey, as they develop a new web development framework called air that is built on FastAPI. It's great to see commercial companies that have proprietary products as part of their main business model finding other ways to support the open-source ecosystem.

Interview Introduction

I recently had the opportunity to chat with Quinn, the CEO of SourceGraph, about AMP Code. I wanted to understand what sets it apart from other AI coding tools like Cloud Code, which I use frequently. I started explaining why I started looking into Amp.

I explain in more detail in the video, but this is for people who don't want to watch the whole thing, so I'll keep it brief.

I tried Amp on an OSS task and was surprised at how smooth it was

I dove into compare vs Claude Code to determine if I just got lucky with the task

Bryan said Amp was bad at Infra, so I used that as a use-case for comparison

I found I was getting better results with Amp and wanted to know why

So I decided to talk to Amp directly to get answers

Amp's Positioning

We started with why build Amp Code when there are other coding agents? What's different about their goals?

"We built AMP Code because we felt that it needed to exist. There's nobody building anything like it that was trying to be the very best coding agent unconstrained in every way." - Quinn [04:07]

SourceGraph, initially focused on code search, has evolved into also doing AI-powered development assistance. This evolution allows them to build AMP with a long-term vision, focusing on future-proofing development workflows and addressing the needs of serious developers. AMP Code is designed not just for today, but for how everyone will be coding 12 months from now [04:33].

Part of the reason they believe they can do that is that they can purely focus on that, whereas other companies may have other business pressures. For example, if GPT-5, or GPT-6, or Gemini 3 ends up being the best coding the Claude Code team may have business pressures and reasons not to default Claude Code to non-anthropic models.

This seems important to me because AI is changing so quickly that nobody can accurately tell you what the best model or approach is in 12 months, so more approach flexibility seems essential.

The Multi-Model Advantage

"There's so many models being used under the hood right now, and that's only going to grow," - Quinn [31:53] Quinn

“AMP automatically selects the best model for the job, without requiring the user to choose from a clunky dropdown menu manually. The goal is simplicity for the user” - Quinn [11:26].

"For the same price, you can have it scan through a lot more of your code and then present the files that might be relevant to the main smarter model for further investigation," - Quinn [30:36]

One of AMP Code's key differentiators is its multi-model architecture. Instead of relying on a single AI model, AMP leverages several models, each optimized for specific tasks.

A notable example is the "Oracle" feature, which utilizes a more powerful model for particularly complex problems. Users can explicitly invoke the Oracle by saying, "use the Oracle to do this in AMP" [26:45]. Otherwise, AMP can switch to it automatically if it senses the task is particularly tricky [26:54]. The models currently used are O3 but will switch to another model (possibly even the new GPT-5) if it turns out to be a better model. [28:46].

A key point that Quinn highlighted about their approach is that even though they have been testing it for weeks, they still are not entirely sure it's better. They do not just add an extra option to a drop-down, or swap out the provider. They change a significant amount of the code to improve model-specific behaviors. There were several examples of this discussed in the interview around how tool calling is exposed to the agent, relative vs absolute path adjustments that make small improvements, how URI are/aren't exposed to the model based on behavior, etc. They’re approach to evaluation is to be aware of leaderboards, but that the bulk of the information comes from taking detailed notes of trace

This dynamic model selection allows AMP to optimize for speed, cost, and accuracy, depending on the task at hand.

Team Collaboration Features

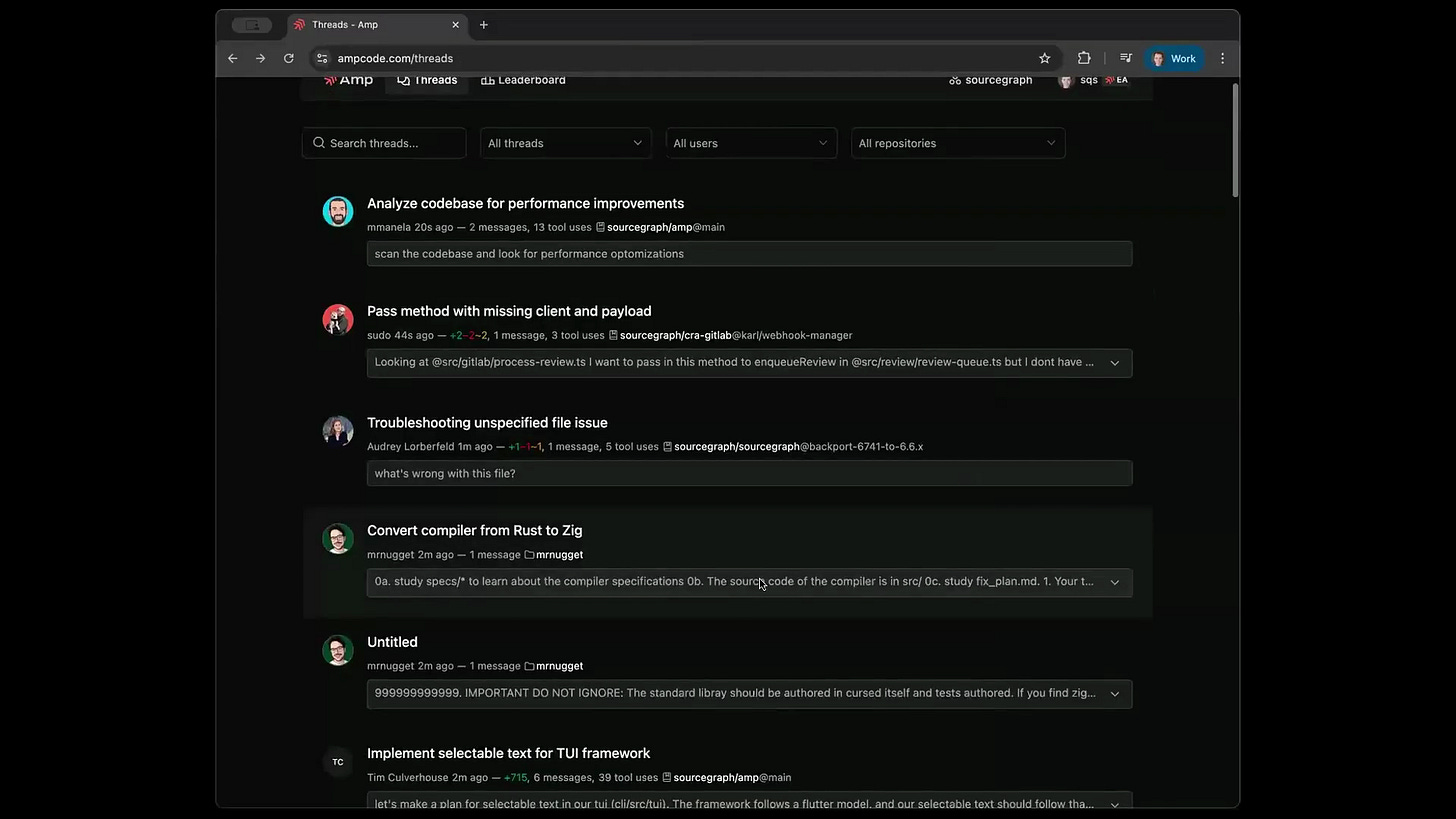

"There's nothing like getting to see how the smartest people on your team are actually using coding agents," - Quinn [09:57]

The first feature Quinn brought up unprompted was team collaboration features. It's always telling what the first thing a company leads with, as it speaks a bit to the priorities and what they think is essential. It has unique "multiplayer" capabilities that allow developers to learn from each other and share best practices.

This can be useful for upskilling, onboarding, understanding intent being PRs and Issues, and more.

Quinn highlighted that you don't get this in Cursor or Claude Code. There are ways to get at that information in a bolt-on (watching the JSONL files), but it's not native. Companies such as SpecStory have built tooling to address this limitation for other coding agents, but Amp is the only one I know of that builds this directly into their product.

Real-World Performance

Earlier, I mentioned I did some comparisons. I recently conducted a comparative analysis against Cloud Code, using the same initial prompt to build and deploy a simple application. The goal was to assess not just functionality, but also factors like memory provisioning, health check implementation, and CI/CD integration. I showed this to Quinn to discuss.

While both tools were able to complete the task, AMP Code demonstrated several advantages. These findings suggest that AMP Code's focus on detail and optimization translates into improved developer experience.

For more details on the task, the prompt, and my thoughts check out the post dedicated to that analysis [here](https://elite-ai-assisted-coding.dev/p/amp-vs-claude-code-for-infra)

Future Development

"Everything is changing" - Quinn [37:16]

Quinn emphasizes that "everything is changing" [37:16] and that AMP Code's doesn’t have a roadmap because it’s impossible to have one and stick to it with how rapidly AI is changing

Some key areas of future development include:

The team is currently working on the best way to integrate GPT-5 [37:31] and will continue to watch new models as well [37:58].

I’m hoping for PDF support in it’s tools

More model integration and evaluation

Practical tips

We ended with practical tips for people who want to try it. Quinn's advice is:

There's a $10 free tier, and that can get you a long way, so start with that.

You are always in control of your spend, and auto-reloading of credits is off by default.

Most things new users miss on their first use are the Oracle model. When you have a particularly tricky task or something the model is struggling with, prompt it to use the oracle model for better reasoning.

A technique power users use that newer users don't use as much is going back up in the chain to edit an initial prompt with clearer instructions, rather than solely continuing on in the thread.