Writing Strong Content with AI

Lightning Talk with Hamel Husain

Introduction: Writing as a Business Foundation

In a recent lightning talk, Hamel Husain demonstrated his approach to AI-assisted writing — a component that drives his independent consulting business. As Hamel explained at the outset:

"My business depends upon good writing because it's important that people get to know who you are, what you're interested in, what your expertise is... I would probably say my entire business is driven off of writing."

This writing encompasses blog posts, emails, talk notes, and social media posts — all enhanced but not entirely automated by AI tools. Hamel emphasized that while AI plays a role, the process remains "human in the loop." The session provided a detailed look at how a practitioner manages AI-assisted content creation while maintaining quality and authenticity.

The Monorepo Strategy for Writing Projects

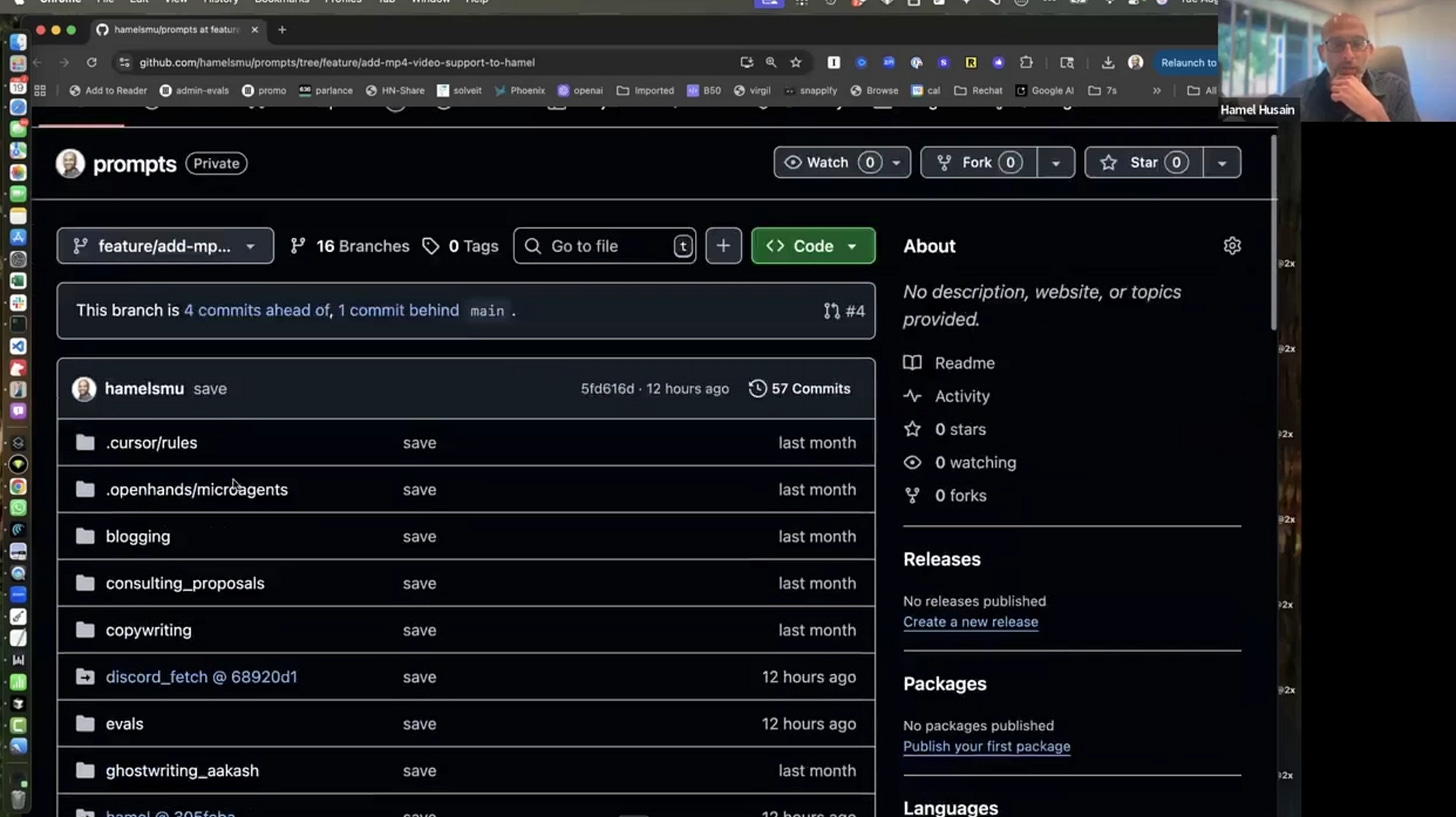

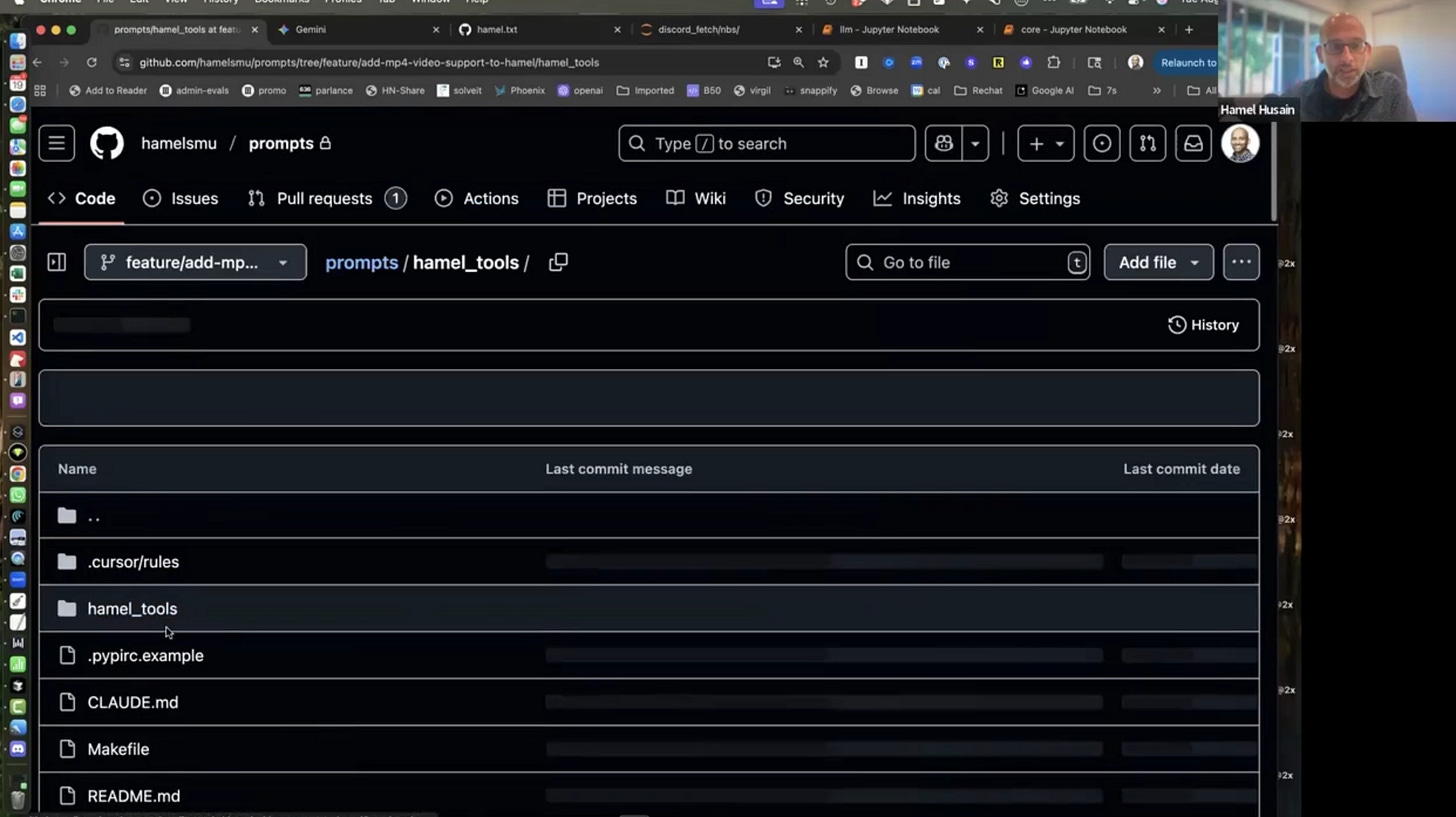

Hamel began by showcasing his monorepo approach to managing writing projects. While not typically a monorepo advocate, he found this structure necessary for writing work because content rarely exists in isolation.

"You'll find that it's hard to write in isolation. And it's really hard to stuff everything into a cloud project, honestly. Because I have consulting proposals. But some of my consulting proposals, I may want to bring in something from one of my blogs."

His monorepo contains both ad hoc projects and permanent ones, allowing him to reference previous work when needed. For instance, when writing a consulting proposal, he can pull in relevant blog posts or examples from other projects. This interconnected approach enables the AI assistant to access broader context and connect different pieces of content.

The monorepo includes various rules and configurations, though Hamel admitted he hasn't yet integrated Eleanor's Ruler tool — something he noted was "high on my to-do list."

Defending Against "Slop"

A central concern in AI-assisted writing is avoiding what Hamel calls "slop" — content that immediately reads as AI-generated. He defined slop as "low information per token" but also simply as content that "looks like AI."

His solution involves using Google Gemini instead of the more common ChatGPT or Claude. His theory:

"I think a lot of people are just not using Gemini. I think most people are using ChatGPT or Claude. And so you use ChatGPT or Claude, your writing sounds like ChatGPT or Claude. Whereas you use Gemini, your writing doesn't maybe sound like Gemini... except that not many people are using Gemini relative to the other two."

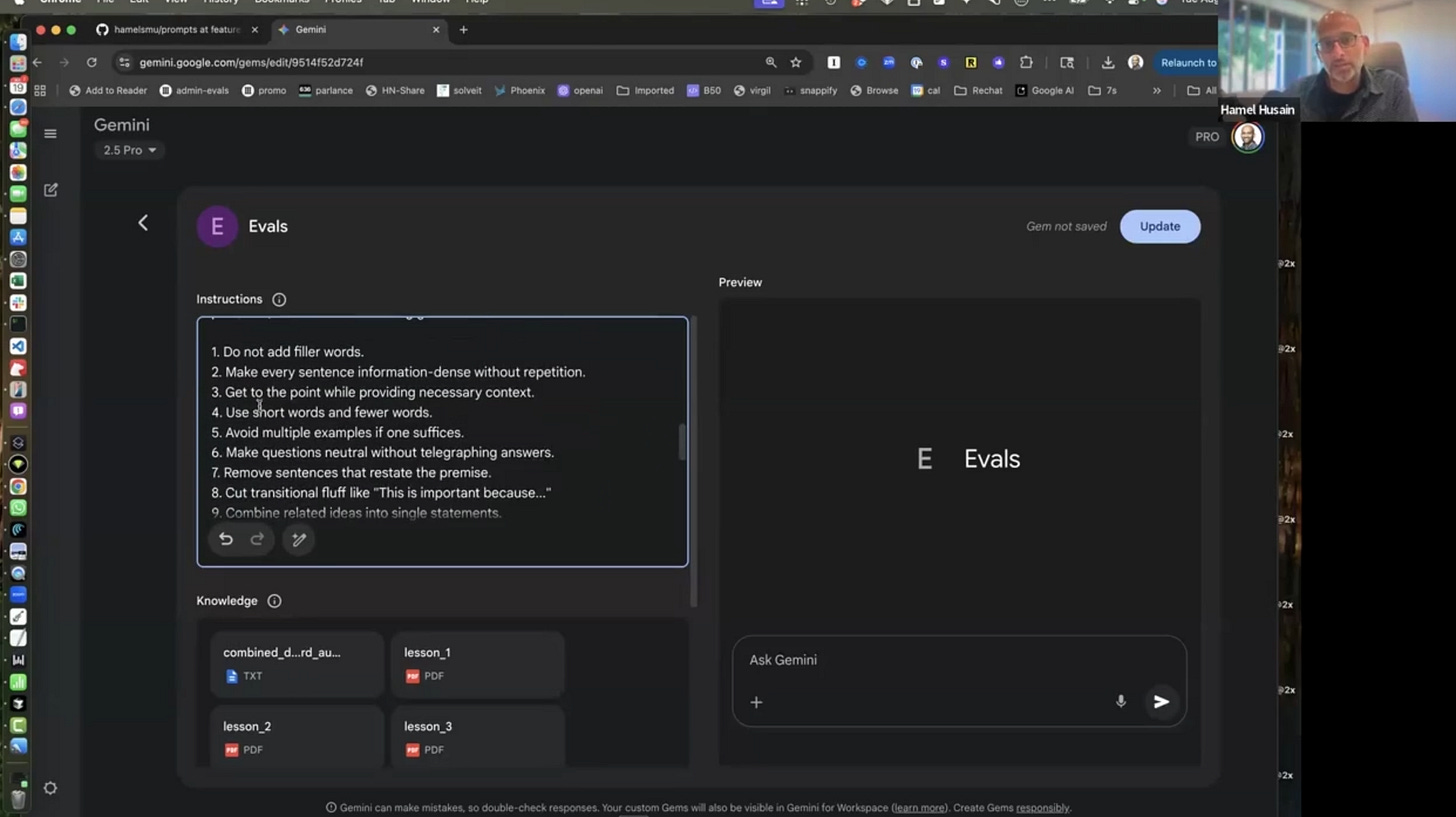

Writing Guidelines Configuration

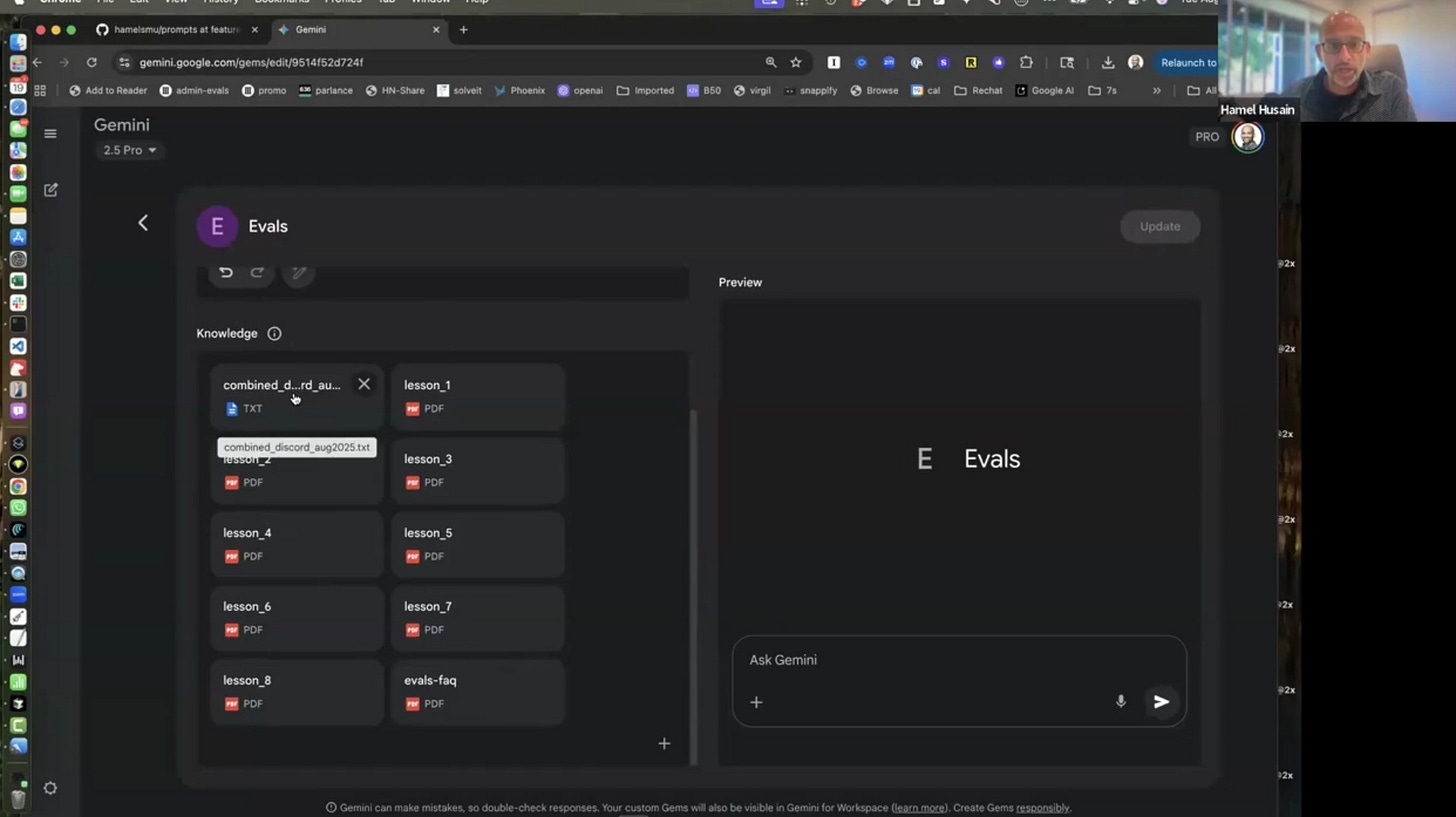

Hamel demonstrated his Gemini project configured for helping with LLM evaluations content. The project includes carefully curated context from Discord conversations, course lessons, and an evaluations FAQ. His writing guidelines prompt includes specific directives:

Do not add filler words

Make every sentence information dense

Get to the point

Use short words and fewer words

Avoid multiple examples

Don't use phrases like "it's important to note"

Avoid unnecessary transitions

As Hamel explained, these guidelines serve as a defense against AI-generated writing patterns that make content feel generic or artificial.

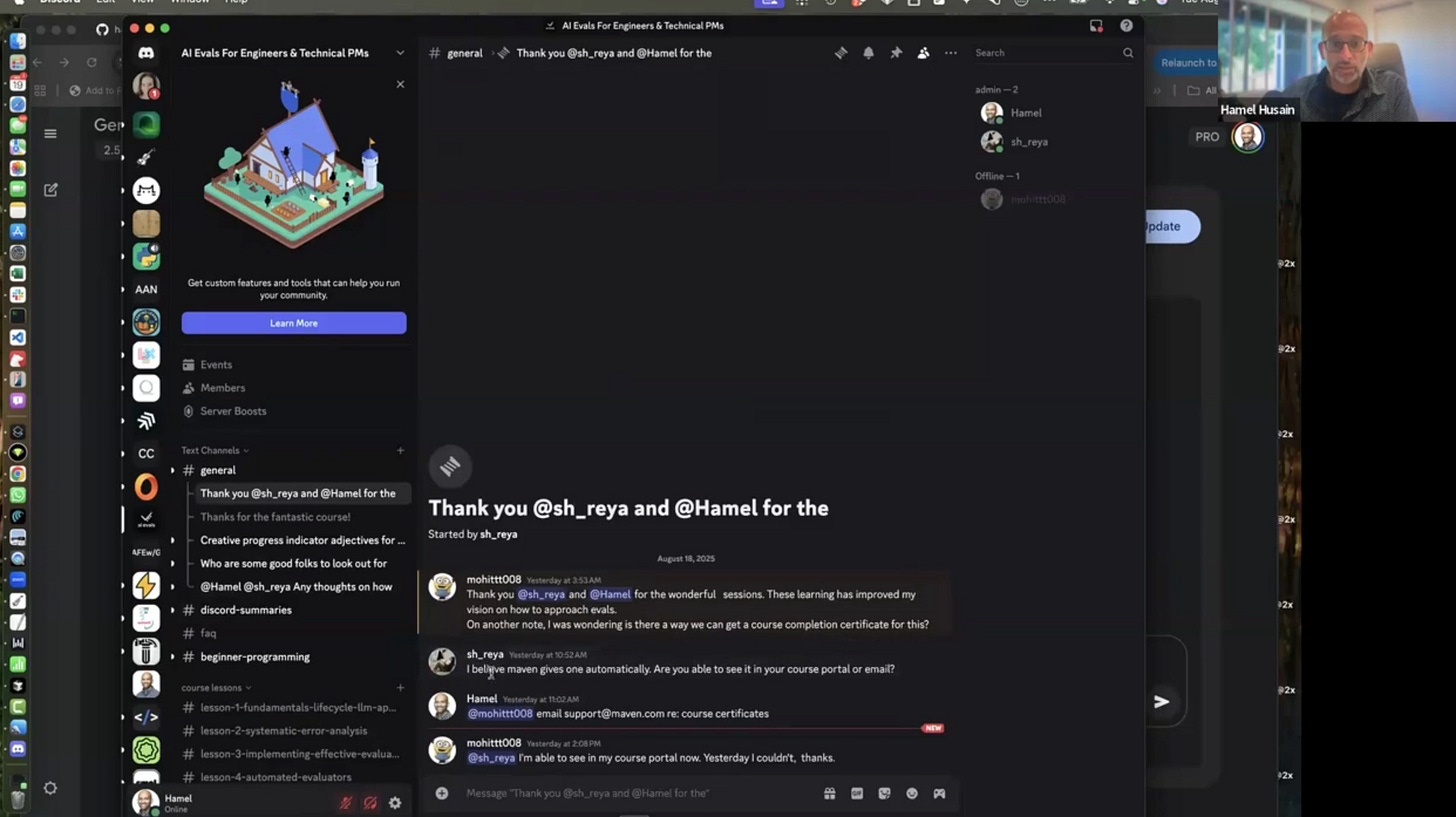

The Art of Context Curation: Discord Data Example

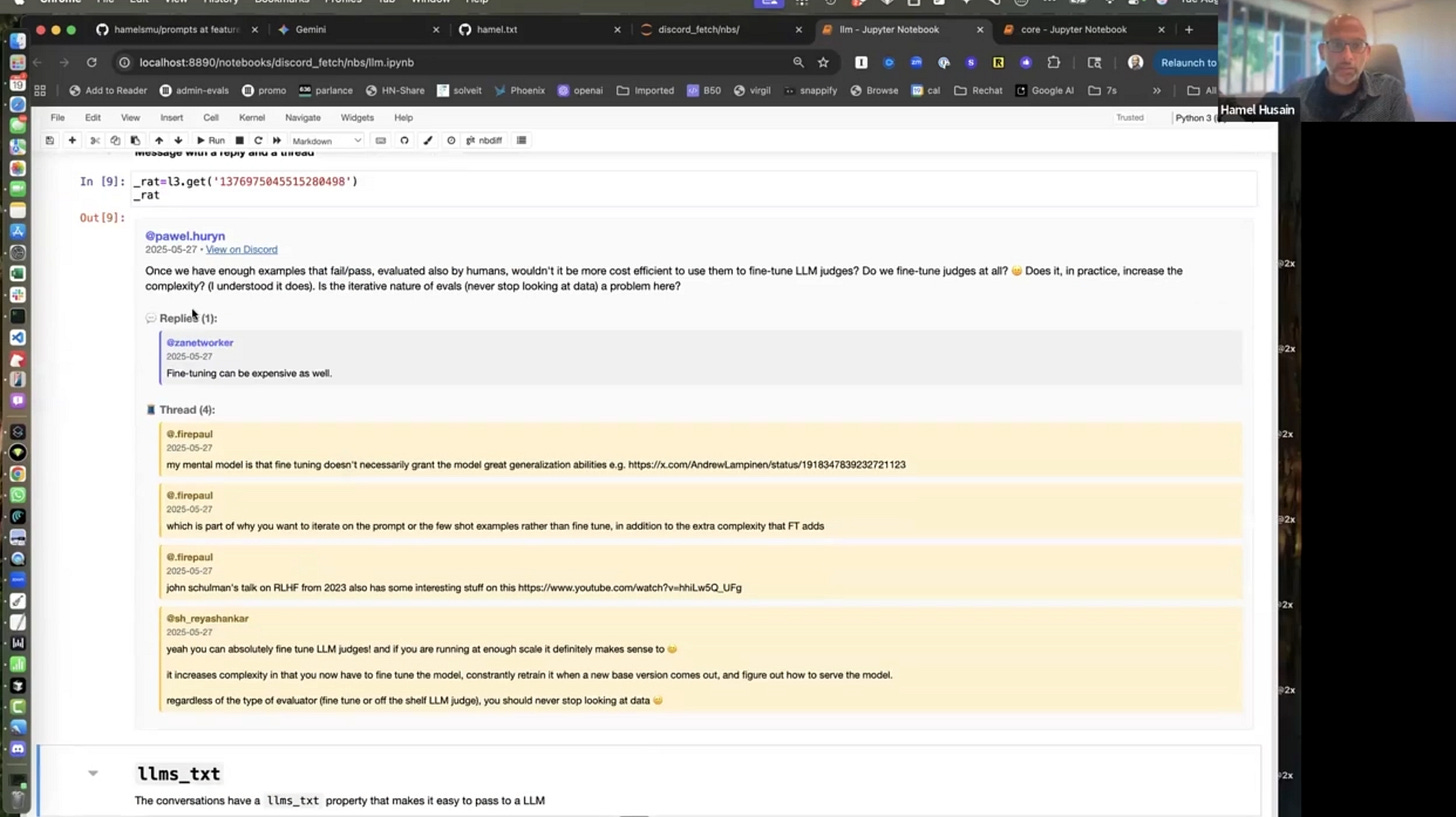

A significant portion of the talk focused on Hamel's approach to curating context from Discord channels. His AI evals course has generated tens of thousands of messages across multiple channels — valuable information that requires careful processing.

"You don't want to just dump the entire contents of this Discord into an LLM. That would be a really big mistake... everything you put into your context should be carefully curated, especially when it comes to writing."

The Data Structure and Visualization

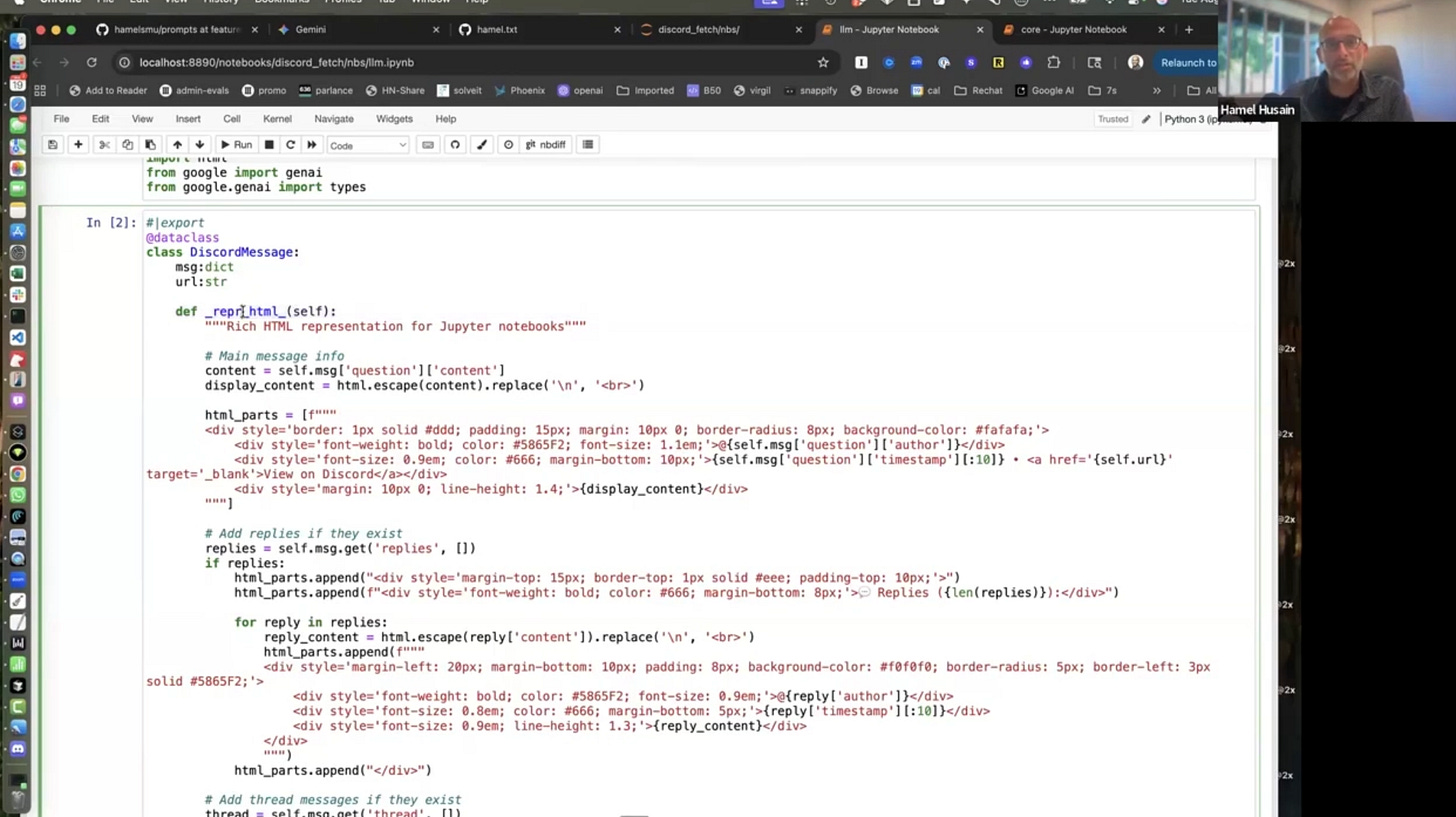

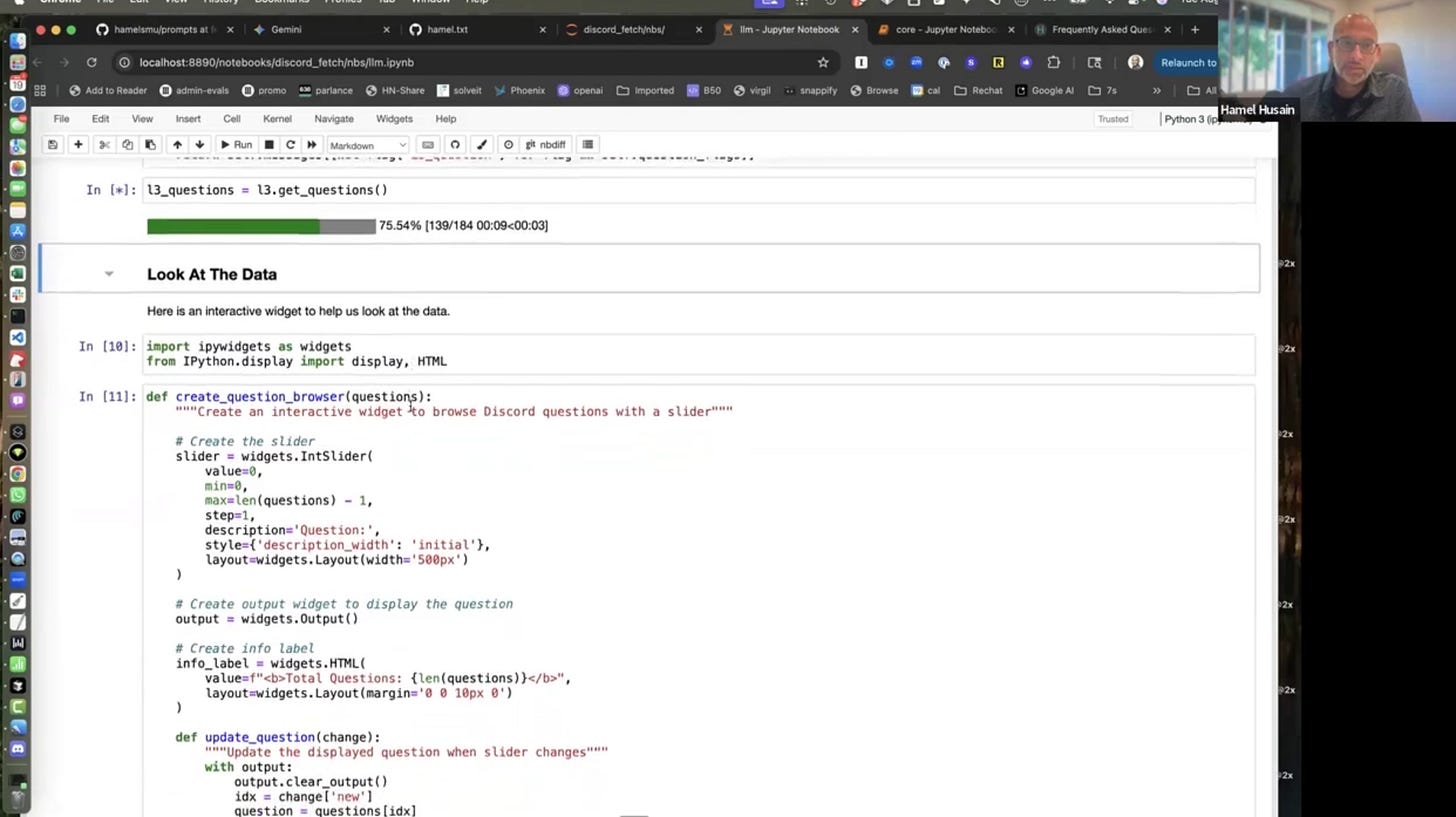

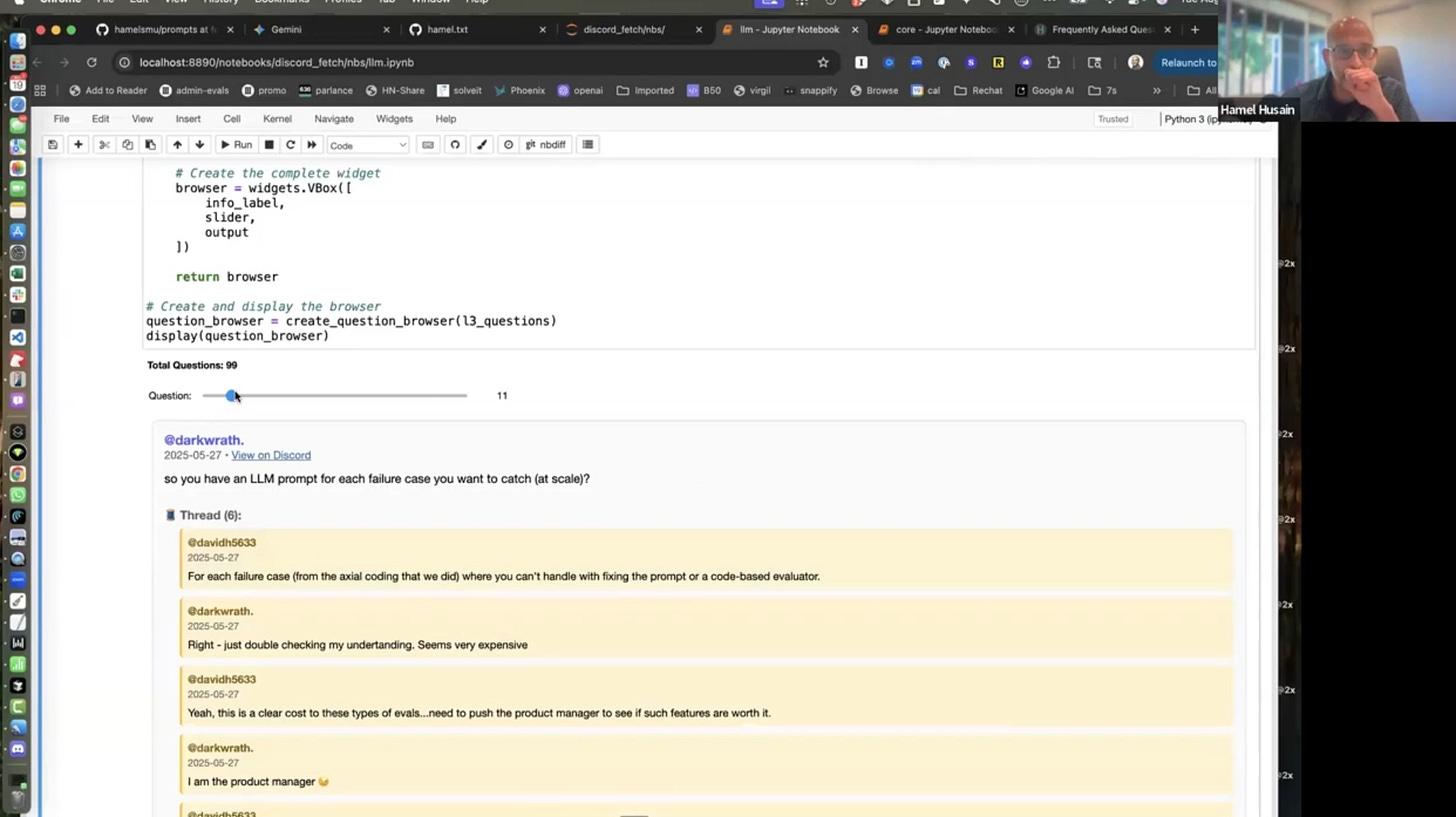

Hamel demonstrated his data processing workflow using Jupyter notebooks. When asked about the polished rendering in his notebooks, he explained:

"Jupiter has this

_repr_htmlmethod. And this is a special method that you can attach to any object in Jupiter and you can render data however you want... I had AI help me do this. There's no way that I would have done this myself."

This custom rendering allows him to view Discord threads in a format that resembles the actual Discord interface, making it easier to review and understand the data structure during processing.

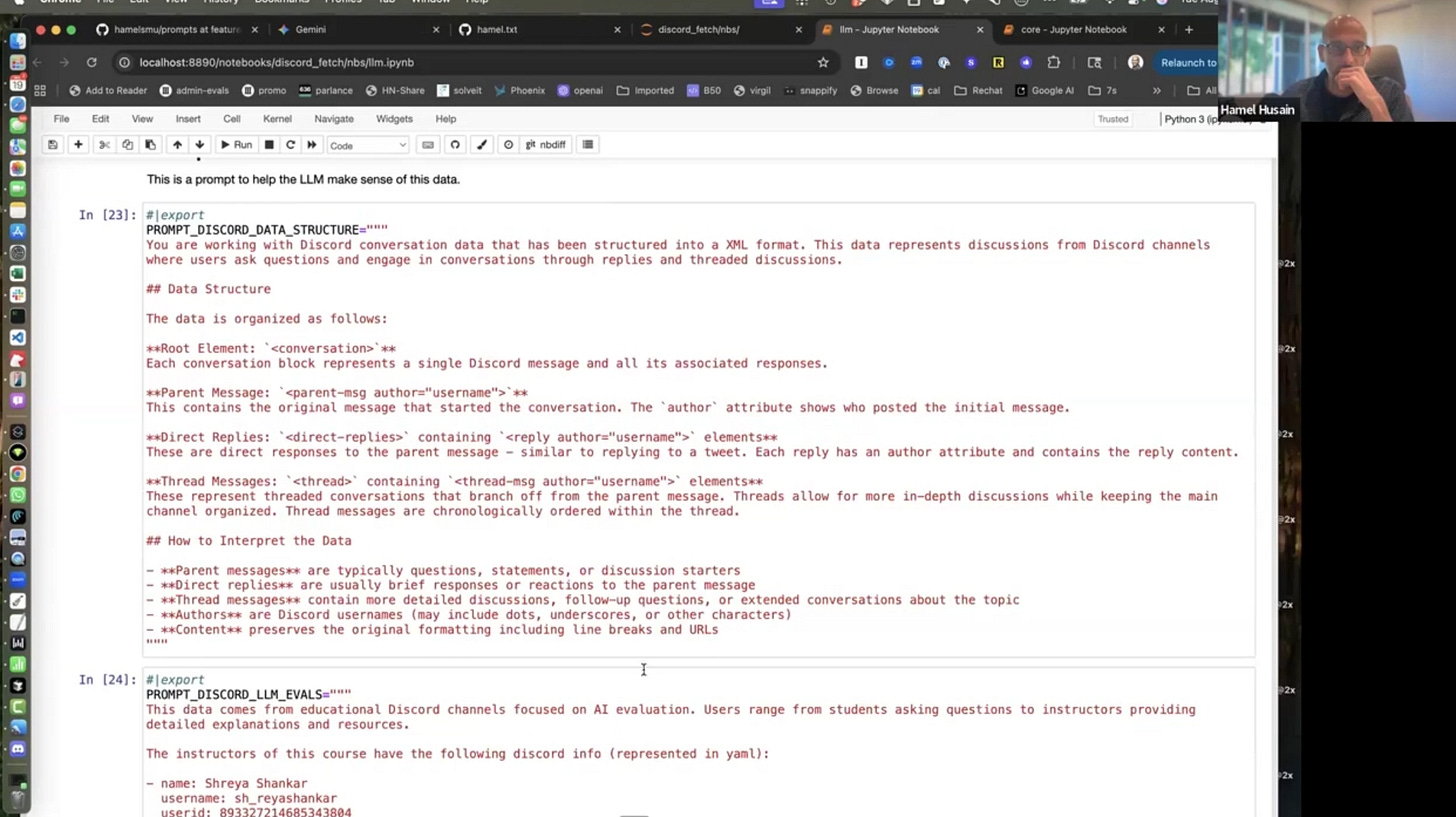

The Classification Prompt

For filtering relevant content, Hamel uses a detailed classification prompt that includes:

"Classify the following conversation as containing a question or discussion about LLM evals, machine learning, data science... The purpose is to filter conversations that can serve as useful context for an FAQ."

The prompt specifically excludes:

Reflective statements or personal takeaways

Pure social banter

Course logistics

Thank you messages

He also provides the LLM with context about the data structure:

"You are working with Discord conversation data. The data structure is as follows: The root element is a conversation. You have a parent message. You have direct replies. You have thread messages."

Processing at Scale

The workflow processes nearly 100,000 messages from the course Discord. Hamel uses fastcore's parallel processing capabilities to handle this scale efficiently. The process involves multiple days of work and careful iteration. As Hamel emphasized:

"This is what we teach. The first step is looking at data... This is not even error analysis. This is before error analysis of looking at data because I don't even know what I want. I'm just looking at stuff."

After classification, he uses a custom widget to browse through messages marked as questions, verifying the classification quality before proceeding.

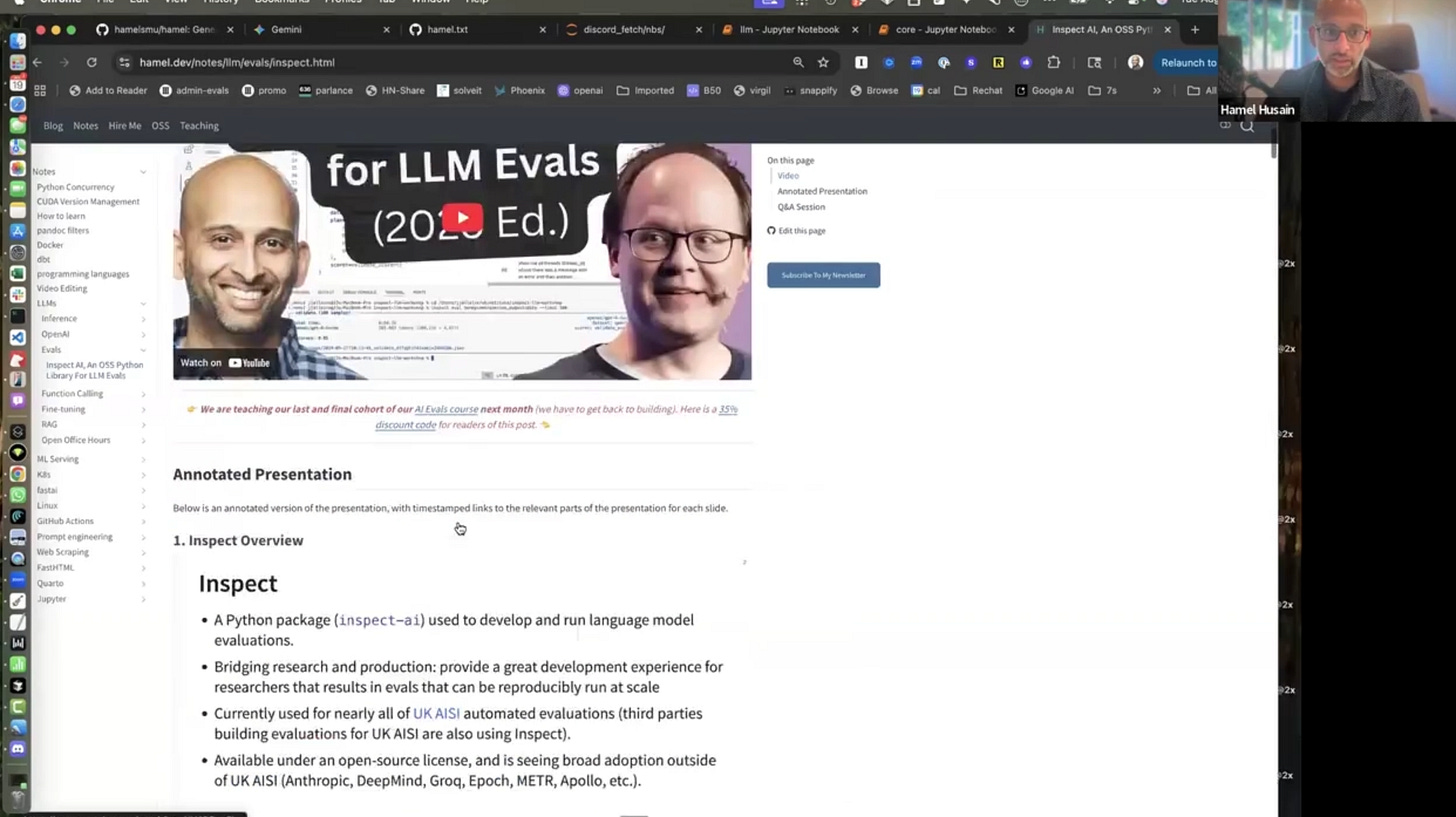

Transforming Long-Form Content: Annotated Presentations

Hamel demonstrated his technique for creating "annotated presentations" from hour-long talks. This method, inspired by Simon Willison's approach, combines slides with synopsis text — not verbatim transcripts, but curated commentary capturing essential information.

"If a human can do that, an LLM can do that too. So that's why it's important to think about every piece of context that is going into your LLM very carefully and make it high quality."

The Compression Challenge

Hamel explained the scale problem:

"If I were to give it video and slides and notes, it would take up almost the entire million context window of Gemini. It'd be total waste. And I could only put one lecture in there."

By creating annotated versions, he can fit multiple lessons into a single context window. This compression isn't just about fitting more content — it's about maintaining information density while removing redundancy.

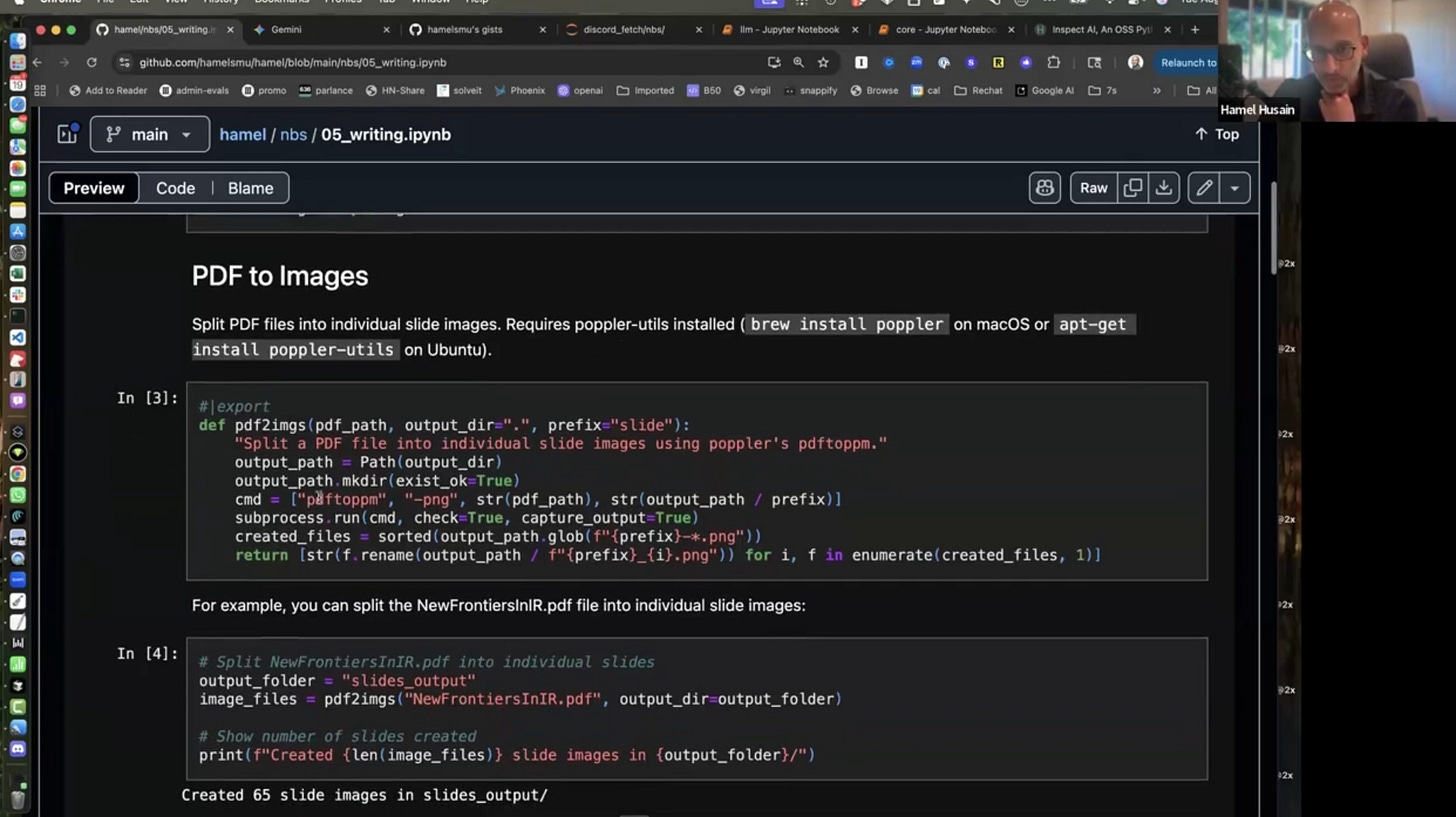

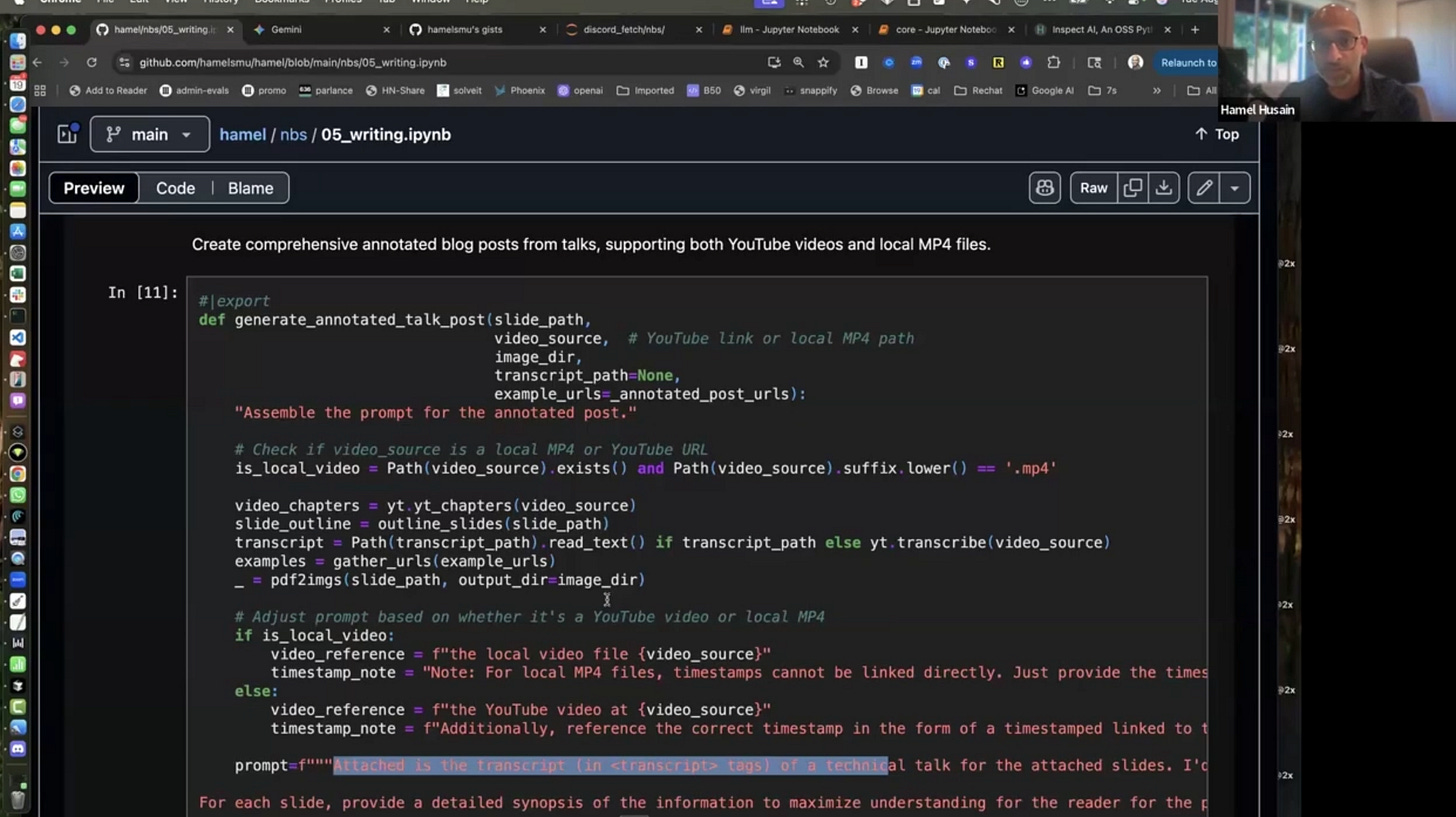

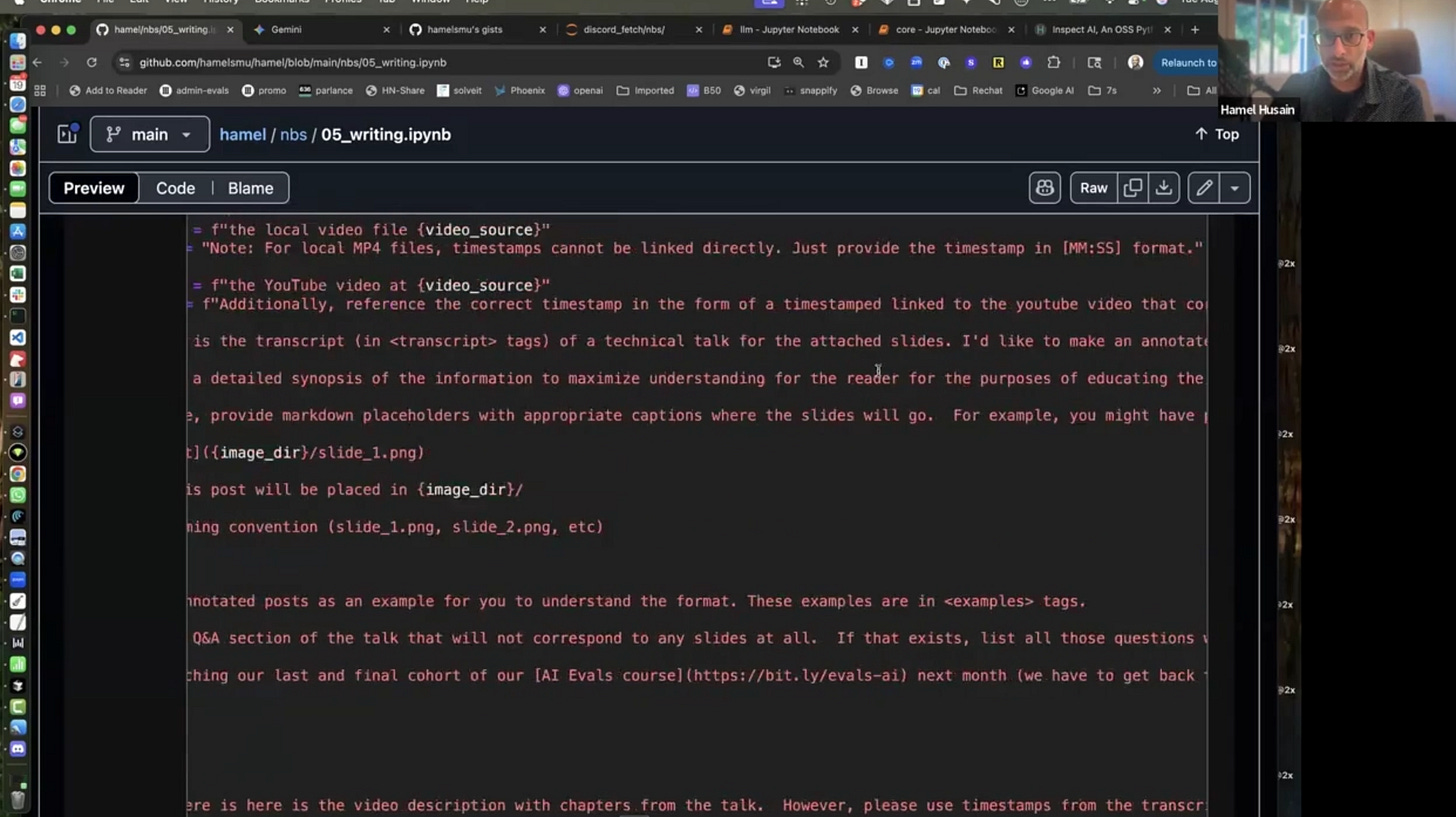

The Technical Implementation

Using Gemini's multimodal capabilities, Hamel processes:

PDF slides extracted as images

Video content (when available)

Transcripts

YouTube chapters (if applicable)

His prompt for this task includes:

"Attached is a transcript of a technical talk for the attached slides. For each slide, provide detailed synopsis of the information. When writing the article, provide markdown placeholders with appropriate captions where the slides will go."

The resulting annotated presentations allow an hour-long talk to be consumed in 10 minutes while retaining the same informational value.

Writing Like Coding: The Iterative Approach

Throughout the demonstration, Hamel emphasized treating writing like coding — building iteratively rather than attempting perfection in one shot.

"Just like you don't code... you want to first make an outline or a plan of what you want to code. And you want to check that plan carefully. And then you want to code step by step."

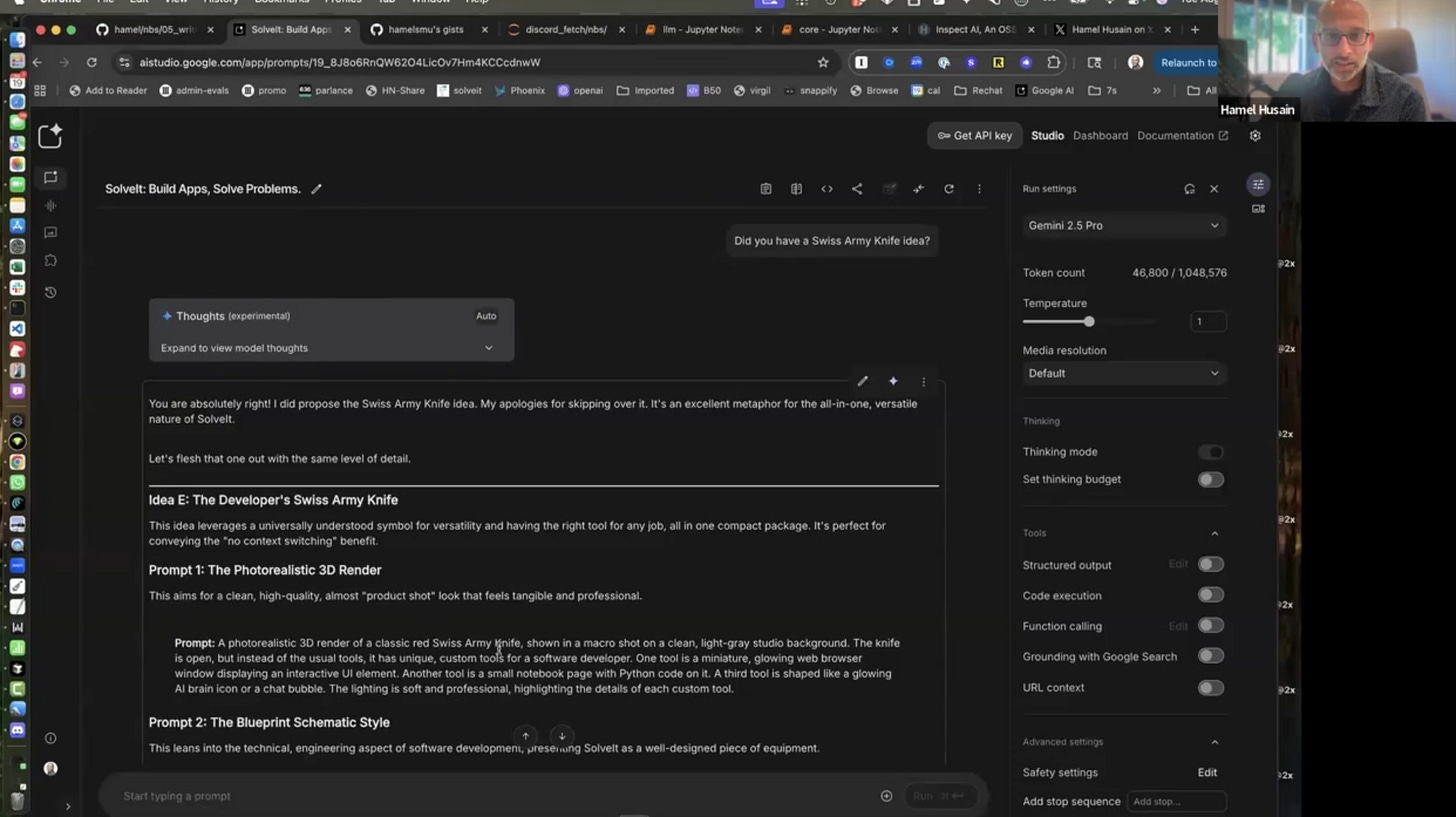

Real-World Example: Tweet Creation

Hamel demonstrated this iterative process by showing how he created a Twitter thread about a Jeremy Howard and Jono Whitaker talk on SolveIt. His workflow revealed the messy reality of content creation:

Start Small: Generate YouTube chapter summaries first

Edit in Place: Use the edit function in AI Studio to refine outputs

Build Up: Move from chapters to description to tweet draft

Add Personal Voice: Use WhisperFlow to verbally dump thoughts for 10 minutes

Refine: Edit the final output to remove AI patterns

When creating the YouTube description, Hamel went back and forth with the model multiple times:

"I didn't like what it was saying so I said talk more about the innovative programming environment... went back and forth a little bit more... sometimes I won't change it. I'll say 'Hey, I simplified what you said.' I have no reason for doing that. Sometimes I do that. I find that it works."

Removing AI Signatures

Hamel showed specific examples of AI language patterns he removes. Looking at a draft that included "But the most fascinating part: who's it for?" he commented:

"That I hate ... no matter how much I prompt, I can't get rid of stuff like that because that's signature AI nonsense. I wouldn't necessarily call it nonsense, I just don't like it. Is this too much of a hook language? I don't need that."

He also pointed to phrases like "Jeremy's take" as "weird language" that needed rewriting. The final tweet, while based on the AI draft, showed substantial editing and remixing of language.

Tools and Technical Infrastructure

The talk revealed several tools in Hamel's workflow:

Google AI Studio vs. Consumer Gemini

Hamel strongly prefers AI Studio over the consumer Gemini interface:

"There's definitely more parameters in AI Studio that you can control, whereas Gemini is more for consumer... In AI Studio, I kind of know because all the parameters are there."

He also highlighted AI Studio's code export feature:

"If you use AI Studio, by the way, you can go up here to this code button... and you can just get the code. Half the time, I'm like, oh, how do you upload a movie and then XYZ? I don't even look at documentation. I just prototype it out here. And then I just export the code."

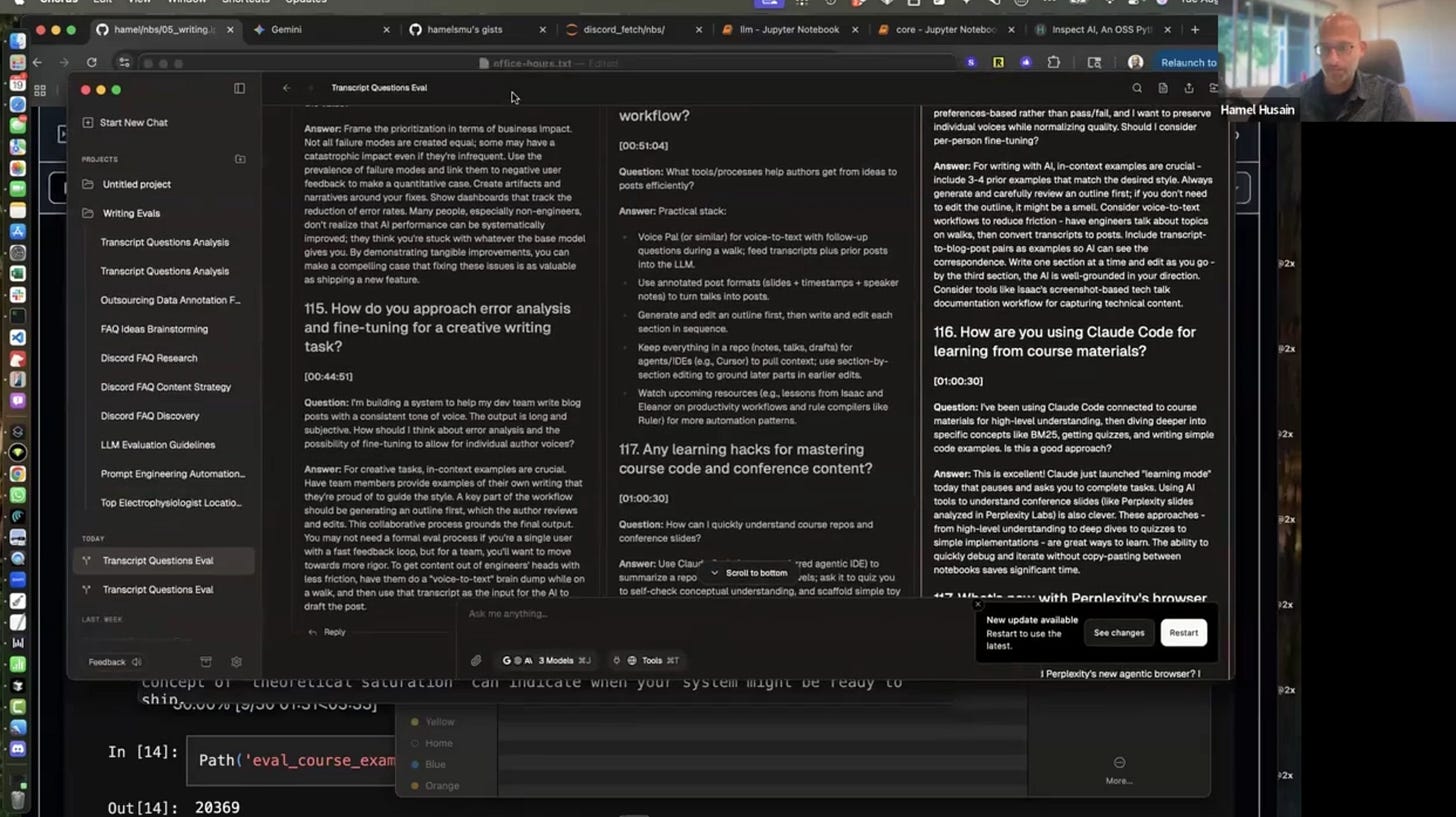

Chorus.sh for Model Comparison

When working on office hours content, Hamel used chorus.sh to compare outputs from different models simultaneously — Gemini 2.5, GPT-5, and Claude Opus 4.1. This allowed him to evaluate which model produced the best results for his specific use case.

Cost Considerations

Regarding costs, Hamel noted:

"Gemini's free tier — I haven't paid for Gemini yet. I don't know how, I use it constantly... it hasn't told me to pay yet so I don't pay."

Alternative Workflows: Eleanor's Approach

During the Q&A, Eleanor shared her own writing workflow, which differs from Hamel's:

"I'm increasingly doing all my writing in the editor on Visual Studio because it's just markdown... I use the AI that's available there, usually also Gemini 2.5 Pro. But it's somehow easier for me to control and I can see diffs."

She maintains a dedicated writing repository with prompts, style guides, and audience definitions that she can reference from her agent. When Hamel asked about Visual Studio Code versus Cursor, Eleanor defended her choice:

"I'm a big fan of Copilot and Visual Studio... I think a lot of people formed their opinion at a time when the Copilot team was a bit asleep and Cursor got a lead, but now it is really good."

Scaling and Formalization

An important theme throughout the talk was knowing when to formalize workflows. Hamel acknowledged the "messy" nature of his current approach:

"I didn't start trying to do proper error analysis. I didn't need to, right? Because it's just me... I'm still building it. I'm trying it out."

He explained that if the workflow needed to scale to multiple users, particularly if Shreya (his course co-instructor) were to use it regularly, they would implement proper evaluations and error analysis. For now, the focus remains on experimentation and understanding what's valuable.

Regarding automation, Hamel was clear about his intentions:

"If I was going to do this a lot, I would probably move this out of Gemini and create a tool... but I haven't had time to try to automate social media posts. It would get there at some point and I wouldn't ever completely automate it. It would just stage it somewhere as a draft potentially that I could edit."

The Snowball Effect of Content Creation

Hamel highlighted how content builds upon itself over time:

"Once you start writing blog posts, you give those blog posts as examples to the AI and so it just becomes easier the more you do it. You just have to curate everything quite carefully."

This accumulation of examples and context makes each subsequent piece easier to create while maintaining consistency with previous work.

Connecting to Formal Evaluation Practices

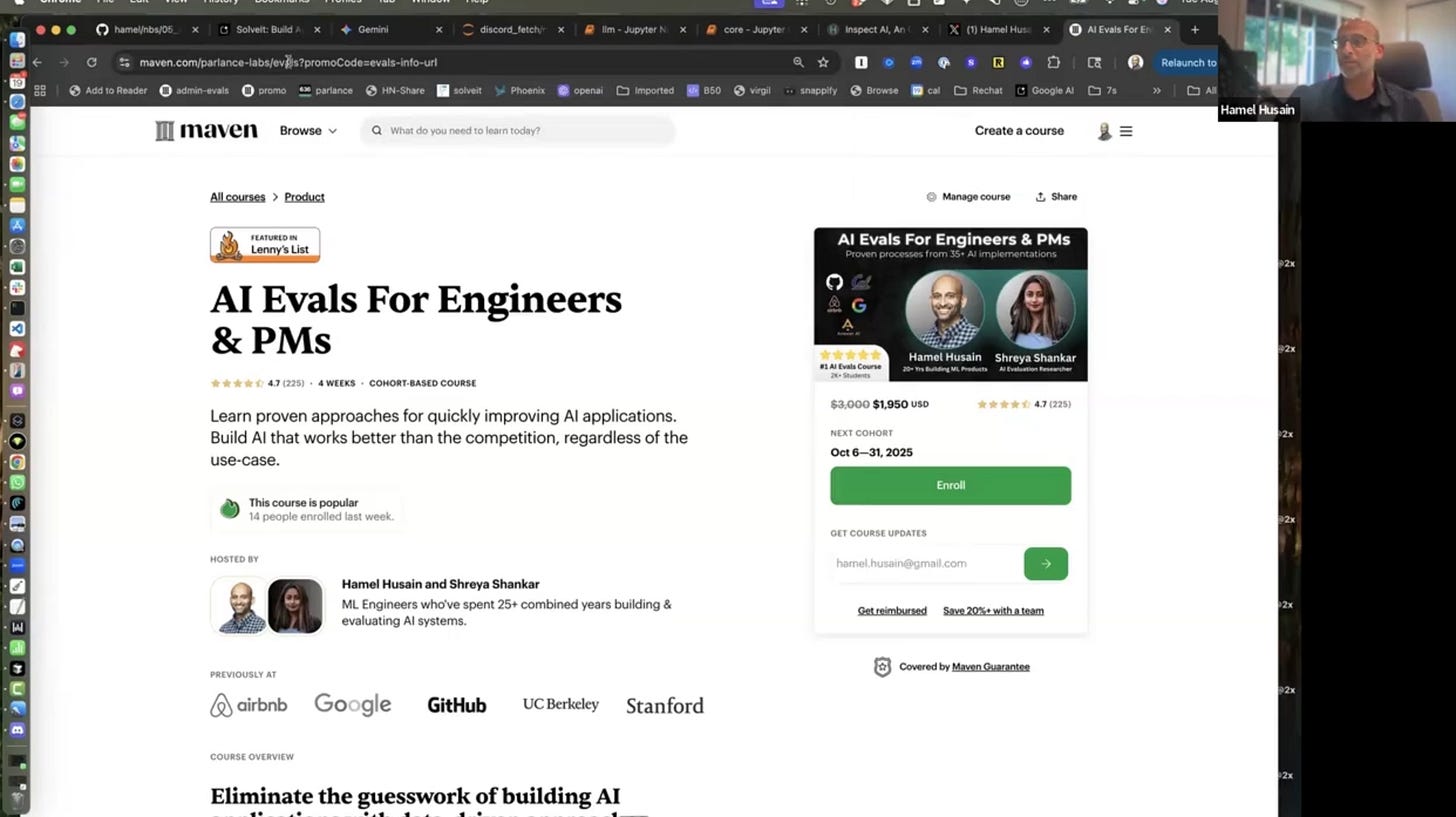

Eleanor's closing observation highlighted how the demonstration connected formal evaluation methodology to personal practice. She noted that while the AI evaluations course might seem "very academic" and "only good for a very scaled operation," Hamel's demonstration showed the "minimalist immediate version of it."

The techniques shown weren't academic exercises but practical applications of the same principles taught in the comprehensive course. The course itself, running for four weeks with eight lessons and guest speakers, teaches not just evaluations but "how to work with non-deterministic software and how to run experiments."

Key Takeaways

The session revealed several insights for AI-native knowledge workers:

Context Quality Over Quantity: Carefully curated, high-quality context beats large volumes of raw data

Model Selection Matters: Using less popular models like Gemini can help avoid common AI writing patterns

Iterative Development: Treat writing like coding — build incrementally and refine continuously

Data First: Before any automation, understand and look at your data thoroughly

Compression Is Key: Transform verbose content into information-dense representations

Edit Strategically: Use editing features to create better in-context examples for the model

Start Messy: Don't over-engineer initially — formalize only when scaling becomes necessary

Accumulate Context: Each piece of content becomes an asset for future writing

Hamel's approach demonstrates that effective AI-assisted writing isn't about complete automation but thoughtful augmentation. By maintaining human judgment while leveraging AI capabilities for efficiency, the workflow produces high-quality content while avoiding the telltale signs of AI generation.

The session ended with Hamel offering to continue the conversation, acknowledging that the topic's depth warranted additional sessions to "extract every bit of every hack and process." For practitioners looking to improve their AI-assisted writing, the combination of technical rigor and practical messiness in Hamel's approach offers a realistic model for achieving both quality and scale in content creation.

👉 Enroll in Hamel and Shreya’s AI Evals For Engineers & PMs course:

👉 Visit Hamel’s website for blog posts and guides on AI engineering, machine learning, and data science.

👉 Visit Parlance Labs, Hamel’s consulting practice for systematically improving your AI applications.

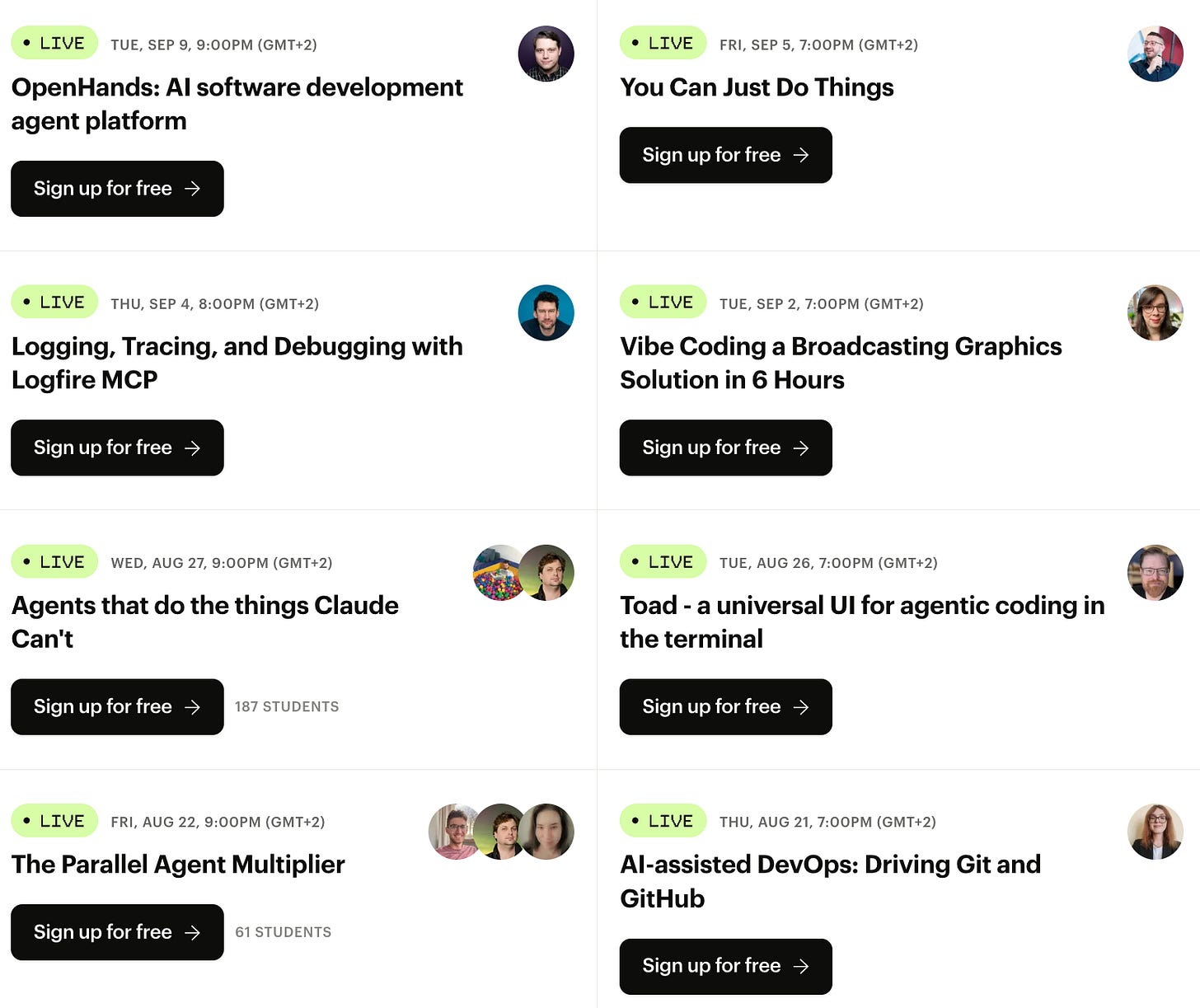

👉 More upcoming Lightning Talks at Elite AI Assisted Coding on Maven

Fantastic post! The timing of this could not have been better. I have been wrangling with generating content on my repo for blogs with Claude code/ cursor- Experimenting with a content analyzer, templates and avoiding slop/ exaggeration and superlatives to create customized content for different platforms. Avoiding repetition, following a structure and narrative/ storyline using simple concise language has been a hard problem to solve.