Boosting Claude: Faster, Clearer Code Analysis with MGrep

I ran an experiment to see how a powerful search tool could improve an LLM’s ability to understand a codebase. By giving Claude a simple instruction to use mgrep, a new semantic search tool by mixedbread, I saw a dramatic increase in speed, efficiency, and quality of its analysis.

Note: Mixedbread is doing a lot more than traditional single vector hybrid search + reranker, it’s a multi-vector multi-modal search. This is not the same semantic search that fell out of style for agentic search.

I took a complicated feature I’m currently building and asked Claude to explain it. I ran the same prompt twice. The first time, I let Claude work on its own. The second time, I added a single instruction about mgrep. Here’s a side by side video of one run. In this post we’ll break down what mgrep is, and how it using that tool improve claude’s performance.

What does Mgrep do?

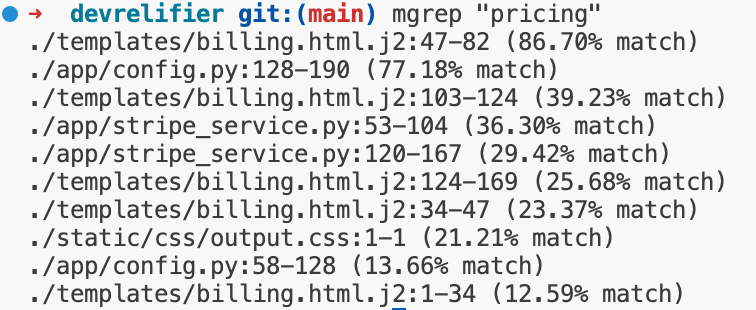

mgrep is a grep-like tool that uses semantic search. The basic usage looks like this

It didn’t just pull in files or line numbers with the string “pricing” it grabbed chunks that were semantically related to pricing.

The A/B Test Setup

My goal was to have the AI explain the image handling, user experience (UX), and editor architecture in my application

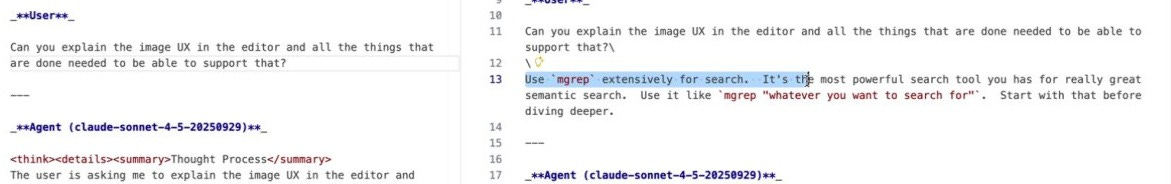

Prompt A (Standard Claude):

Can you explain the image, UX, and the editor, and all the things that are done in order to be able to support that?

Prompt B (Claude + mgrep):

This was the exact same prompt as above, with one addition:

Use mgrep extensively for search. It’s the most powerful search tool and really great for semantic search. Use it like m

grep “whatever you want to search for?”. Start with that before diving deeper.

I didn’t use any special plugin, mcp, skill, etc. This was just a hint in the prompt telling the model to lean on a specific tool for the information gathering.

The Numbers: Faster and More Efficient

The quantitative results were clear. Guiding AI with a better search tool made the entire process faster and required far less work from the model. Since the process isn’t fully deterministic I did 3 runs.

Speed:

Standard Claude runs

1 minute, 58 seconds

2 Minute 28 seconds

4 minutes, 7 seconds

Claude +

mgrepruns1 minute, 6 seconds (56% of standard time)

1 minute 48 seconds (73% of standard time)

1 minute 48 seconds (44% of standard time)

The mgrep version was nearly twice as fast.

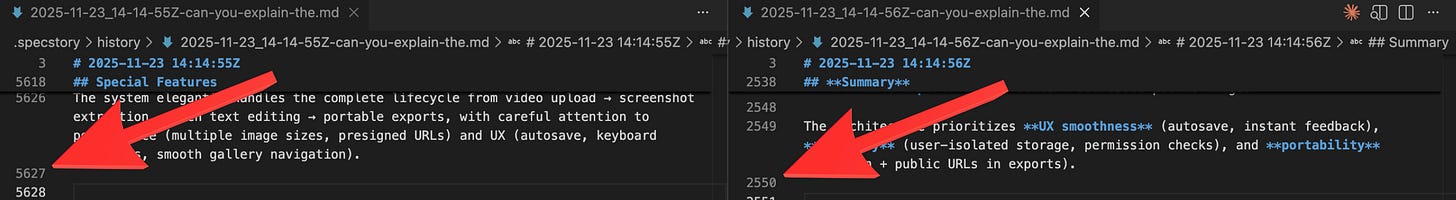

Efficiency (Agent History File):

Standard Claude:

4,984 lines

4,868 lines

5,626 lines

Claude +

mgrep:2,061 lines (41% of standard lines)

1,948 lines (40% of standard lines)

2,549 lines (45% of standard lines)

The mgrep-assisted run used less than half the context to arrive at its answer. This translates to fewer tokens, less processing, and a more focused analysis.

But speed and efficiency are meaningless if the quality of the answer suffers. Let’s look.

The Analysis: Better Insight, Accuracy, and Structure

The mgrep powered response was not only faster but also more insightful, accurate, and logically structured. I compared the first versions of each, because that’s the one I would have used in practice.

High-Level Insight from the Start

The mgrep response showed a better understanding of the feature.

Standard Claude started with a generic description: “the editor supports images through multi-layered architecture.”

Claude + mgrep was specific and immediately useful: it identified the tip-tap React editor and, more importantly, the two core operational modes of the gallery component: a “selection mode” and a “gallery change mode.”

This second description is far more valuable to me. It gets straight to how the feature actually works.

Improved Technical Accuracy

Deeper in the analysis, standard Claude made a subtle but significant error. It described two different ways to enter the full-screen gallery view and presented them as separate functionalities. The mgrep version correctly understood that these were just two different triggers for the same action.

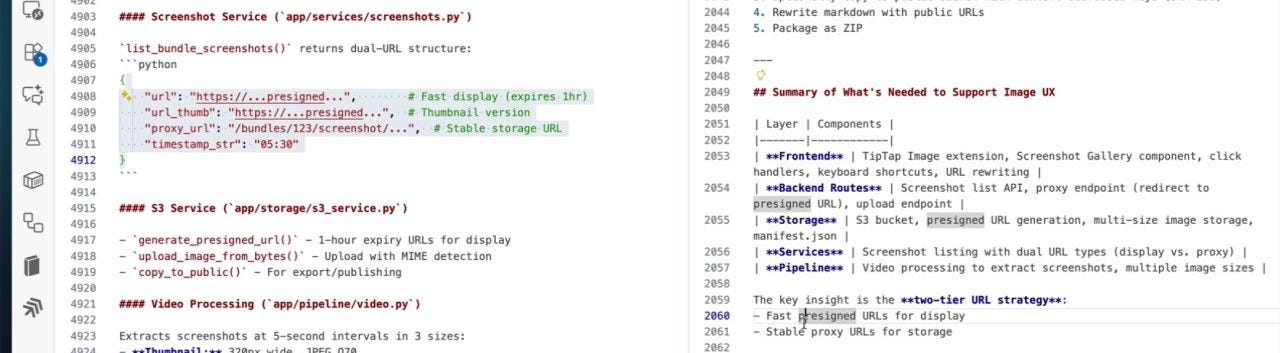

A More Logical Flow

The structure of the final reports also revealed a major difference.

The mgrep response was organized logically. It started with the front-end UX, then moved systematically through the architecture: back-end routes, the storage layer, and finally markdown handling.

The standard Claude response was more scattered. It jumped between front-end UX, back-end details, and then back to another front-end component, making it harder to follow.

For example, my app uses a two-tier URL strategy for images. The mgrep response explained the intent behind this: using fast, pre-signed URLs for thumbnails and a stable proxy for permanent images. Standard Claude presented the raw JSON structure, which is less helpful for what I was asking.

Key Takeaways

The tools we give our AI assistants matter immensely.

Better Tools, Better Results: A powerful semantic search tool like mgrep results in a faster, more efficient, and higher-quality analysis.

Efficiency is a Quality Signal: The mgrep version used less than half the context. This wasn’t a shortcut; it was a sign of a more direct path to the answer.

Incredible. I almost can't believe how one small workflow adjustment can improve speed, cost, AND ACCURACY?! 🤯

Love it. Read this, and immediately used it in a project.